Create an Autonomous Exadata VM Cluster

You can create an Autonomous Exadata VM cluster from the Oracle Cloud Infrastructure console.

Note:

You can only create a Multiple VM - Autonomous VM Cluster (AVMC) on Exadata Infrastructure resources created in Oracle Cloud after the launch of the multiple VM Autonomous Databases feature. Please create a service request in My Oracle Support if you need to address this limitation and want to add an Autonomous VM Cluster to older Exadata Infrastructure resources. For help with filing a support request, see Create a Service Request in My Oracle Support.Note:

23ai databases can be created only on ECPU-based Autonomous Exadata VM Clusters (AVMCs) provisioned with the required Tags. For the tag requirements, see 23ai Database Software Version Tag Requirements.Prerequisites

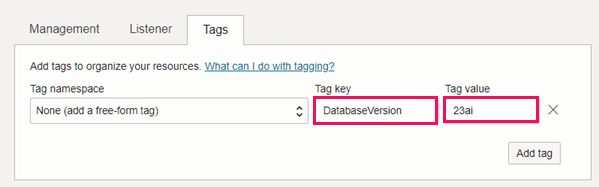

23ai Database Software Version Tag Requirements

- Tag namespace: None (Add a free-form tag)

- Tag key: DatabaseVersion

- Tag value: 23ai

An ECPU-based AVMC created with the 23ai tag supports ACDs with Oracle Database software version 23ai only. You cannot create 19c ACDs inside this AVMC. ACDs with Oracle Database software version 19c can only be provisioned in an AVMC that is not created with the 23ai tag. You cannot provision both 19c and 23ai ACDs inside the same AVMC. See Database Features Managed from Autonomous Container Database for details.

IAM Policy Requirements

| Deployment Choice | IAM Policies |

|---|---|

| Oracle Public Cloud |

|

| Exadata Cloud@Customer |

|

Zero Trust Packet Routing (ZPR) Policy Requirements

APPLIES TO: ![]() Oracle Public Cloud only

Oracle Public Cloud only

- IAM policies required to add Zero Trust Packet Routing (ZPR) security attributes while provisioning an AVMC:

allow group <group_name> to { ZPR_TAG_NAMESPACE_USE, SECURITY_ATTRIBUTE_NAMESPACE_USE } in tenancy allow group <group_name> to manage autonomous-database-family in tenancy allow group <group_name> to read security-attribute-namespaces in tenancy -

To provision an AVMC with security attributes, you must also enable the corresponding ZPR policies to allow traffic.

For example, you must define the sample ZPR policies shown below to provision an AVMC with the following security attributes:- VCN network: ADBD

- Database client: ADBDClient

- Database server: ADBDServer

Sample ZPR Policies to provision AVMC successfully:in VCN-Network:ADBD VCN allow all-endpoints to connect to Db-Server:ADBDServer endpoints with protocol='tcp/2484' in VCN-Network:ADBD VCN allow all-endpoints to connect to Db-Server:ADBDServer endpoints with protocol='tcp/22' in VCN-Network:ADBD VCN allow all-endpoints to connect to Db-Server:ADBDServer endpoints with protocol='tcp/1521' in VCN-Network:ADBD VCN allow Db-Server:ADBDServer endpoints to connect to all-endpoints in VCN-Network:ADBD VCN allow all-endpoints to connect to Db-Server:ADBDServer endpoints with protocol='icmp' in VCN-Network:ADBD VCN allow Db-Server:ADBDServer endpoints to connect to 'osn-services-ip-addresses' with protocol='tcp/443'Sample ZPR Policies for successful customer connectivity:in VCN-Network:ADBD VCN allow all-endpoints to connect to Db-Client:ADBDClient endpoints with protocol='tcp/22' in VCN-Network:ADBD VCN allow Db-Client:ADBDClient endpoints to connect to Db-Server:ADBDServer endpoints with protocol='tcp/1521' in VCN-Network:ADBD VCN allow all-endpoints to connect to Db-Server:ADBDServer endpoints with protocol='tcp/2484' in VCN-Network:ADBD VCN allow all-endpoints to connect to Db-Server:ADBDServer endpoints with protocol='tcp/22' in VCN-Network:ADBD VCN allow all-endpoints to connect to Db-Server:ADBDServer endpoints with protocol='tcp/1521' in VCN-Network:ADBD VCN allow Db-Server:ADBDServer endpoints to connect to all-endpoints in VCN-Network:ADBD VCN allow all-endpoints to connect to Db-Server:ADBDServer endpoints with protocol='icmp' in VCN-Network:ADBD VCN allow Db-Server:ADBDServer endpoints to connect to 'osn-services-ip-addresses' with protocol='tcp/443'

Resource Requirements

- 10 OCPUs or 40 ECPUs per node

- 120GB memory per node

- 338.5GB local storage per node

- 6.61TB Exadata storage

Network Requirements

You can provision new VM Clusters with IPv4/IPv6 dual-stack networking, enabling both IPv4 and IPv6 addresses. This option is enabled while you create a VCN, and while creating a subnet.

- Oracle Public Cloud Deployments:

If you are provisioning an Autonomous Exadata VM Cluster resource and setting up disaster recovery with Autonomous Data Guard, make sure the IP address space of the Virtual Cloud Networks (VCNs) does not overlap.

The following table lists the minimum required subnet sizes for Autonomous Database deployments on Oracle Public Cloud.

Tip:

The Networking service reserves three IP addresses in each subnet. Allocating a larger space for the subnet than the minimum required (for example, at least /25 instead of /28) can reduce the relative impact of those reserved addresses on the subnet's available space.

Rack Size Client Subnet: # Required IP Addresses Client Subnet: Minimum Size Base System or Quarter Rack (4 addresses * 2 nodes) + 3 for SCANs + 3 reserved in subnet = 14 /28 (16 IP addresses) Half Rack (4 * 4 nodes) + 3 + 3 = 22 /27 (32 IP addresses) Full Rack (4* 8 nodes) + 3 + 3 = 38 /26 (64 IP addresses) Flexible infrastructure systems (X8M and higher) 4 addresses per database node (minimum of 2 nodes) + 3 for SCANs + 3 reserved in subnet /28 is the minimum subnet size, but the addition of each node increases the subnet size correspondingly. Tip:

Ensure you configure your subnets to have sufficient available IP addresses to create multiple Autonomous Exadata VM Cluster. For example, the minimum required IP address for a Base system or Quarter Rack for a single VM Cluster is 14. If you plan to create 2 VM clusters, define your subnet at /27 or have two subnets at /28 level. - Exadata Cloud@Customer Deployments:

For Exadata Cloud@Customer IP requirements, refer to IP Addresses and Subnets for Exadata Cloud at Customer.

- Oracle Public Cloud Deployments:

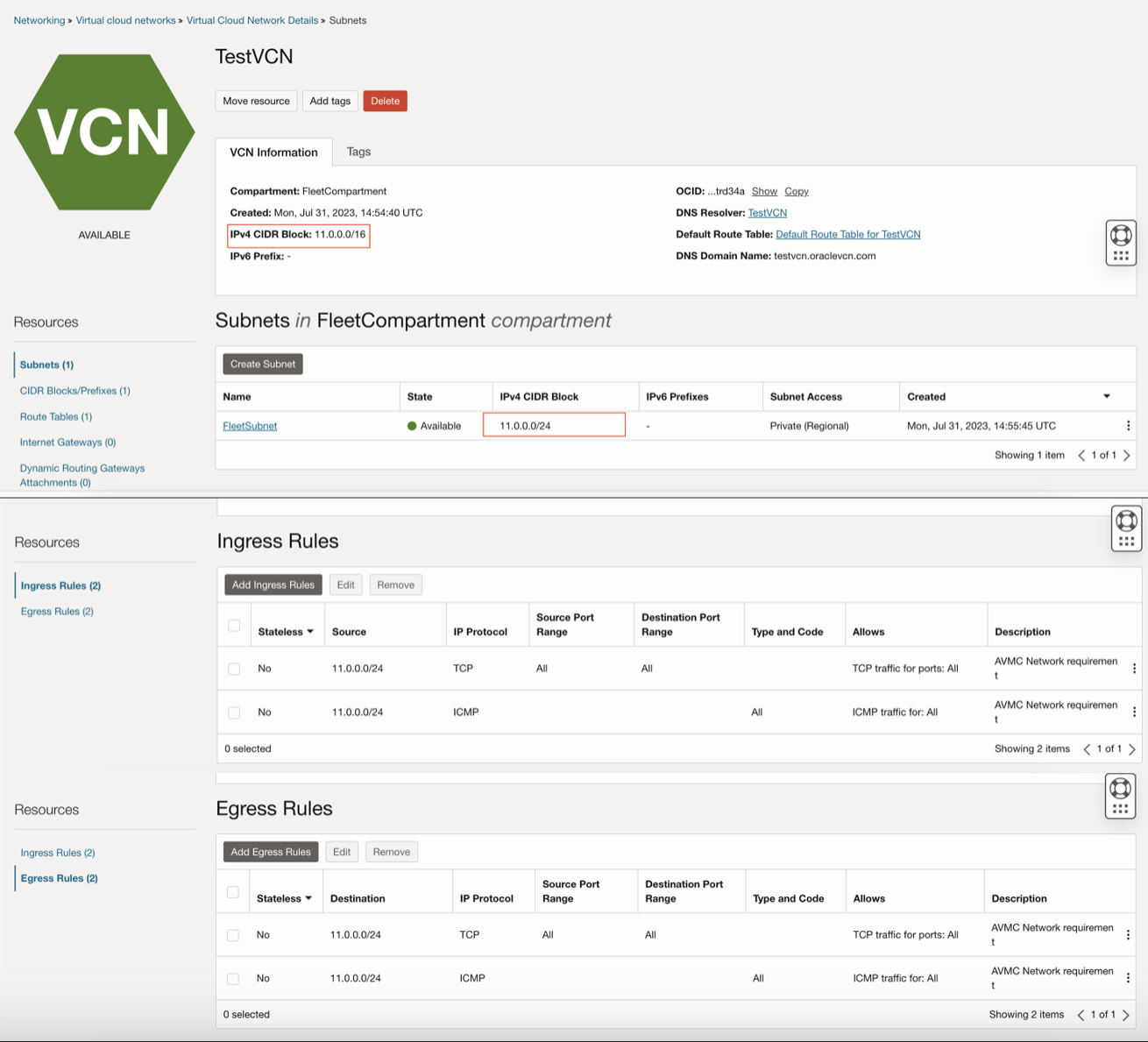

- To meet the minimum Security List requirements for provisioning an Autonomous VM Cluster:

- Open TCP on all ports for ingress and egress for the subnet CIDR.

- Open all ICMP types and codes for ingress and egress for the subnet CIDR.

- Ensure that ingress and egress have same type: either both are Stateless or both are Stateful.

For example, in the screenshot below, TestVCN has an IPv4 CIDR Block of 11.0.0.0/16 and FleetSubnet (the subnet used to provision AVMC) has an IPv4 CIDR Block of 11.0.0.0/24.

- Optionally, depending on your requirement, you may also need to open the following ports to connect and operate Autonomous Databases created in this AVMC.

Destination Port IP Protocol Purpose 1521 TCP SQL*Net traffic 2484 TCP Transport Layer Security (TLS) from the Application Subnets 6200 TCP Oracle Notification Service (ONS) traffic

Fast Application Notification (FAN) traffic

443 TCP Oracle Application Express

Oracle Database Actions

- To meet the minimum Security List requirements for provisioning an Autonomous VM Cluster:

- Exadata Cloud@Customer Deployments:

- Before provisioning an AVMC on Exadata Cloud@Customer, you must setup the network rules as listed in Network Requirements for Oracle Exadata Database Service on Cloud@Customer in Preparing for Exadata Database Service on Cloud@Customer.

- Additionally, you need to open Port 1522 to :

- Allow TCP traffic between primary and standby databases in an Autonomous Data Guard setup.

- Support database cloning.

-

Optionally, if you plan to connect to the following commonly used applications, you may also need to open the following ports.

Destination Port IP Protocol Purpose 443 (Egress) TCP Key Management Service (KMS) 1521 (Ingress) TCP Oracle Enterprise Manager (OEM) 1521 TCP SQL*Net traffic 2484 TCP Transport Layer Security (TLS) from the Application Subnets 6200 TCP Oracle Notification Service (ONS) traffic

Fast Application Notification (FAN) traffic

443 TCP Oracle Application Express

Oracle Database Actions

Procedure

Tip:

For "try it out" alternatives that demonstrate these instructions, see the following labs in Oracle Autonomous Database Dedicated for Fleet Administrators Workshop:- Lab 3: Provisioning a Cloud Autonomous Exadata VM Cluster for Autonomous Database on Dedicated Infrastructure

- Lab 5: Provisioning an Autonomous VM Cluster on Exadata Cloud@Customer

-

Go to Autonomous Database in the Oracle Cloud Infrastructure Console.

For instructions, see Access Autonomous Database in the Oracle Cloud Infrastructure Console.

-

In the side menu’s list of Autonomous Database resource types, click Autonomous Exadata VM Cluster.

The list of Autonomous Exadata VM Clusters in your current Compartment is displayed.

-

Choose the Compartment where you want to create an Autonomous Exadata VM Cluster.

The list of Autonomous Exadata VM Clusters refreshes to show those in the selected Compartment.

-

Click Create Autonomous Exadata VM Cluster.

-

Fill out the Create Autonomous Exadata VM Cluster page with the following information:

Setting Description Notes Basic Information

Compartment: Select a compartment to host the Autonomous Exadata VM Cluster.

Display Name: Enter a user-friendly description or other information that helps you easily identify the infrastructure resource.

The display name does not have to be unique. Avoid entering confidential information.

Exadata Infrastructure

The Exadata Infrastructure to host the new Autonomous Exadata VM Cluster.

Autonomous VM Cluster resources: Compute Model

Compute Model: Denotes the compute model for your Autonomous Exadata VM Cluster resource. Default model is ECPU. This is based on the number of cores elastically allocated from a pool of compute and storage servers.

Click Change compute model if you wish to select OCPU. OCPU compute model is based on the physical core of a processor with hyper-threading enabled.The compute model chosen here will apply to all the Autonomous Container Databases and Autonomous Databases created in this Autonomous Exadata VM Cluster resource. Refer to Compute Models.

To be able to create 23ai databases, you must provision the AVMC with the ECPU compute model.

Autonomous VM Cluster resources: DB Server Selection

Lists the DB Servers (VMs) used to deploy the new Autonomous Exadata VM Cluster (AVMC) resource.

The maximum resources (CPUs, Memory, and Local Storage) available per VM is also displayed.

Optionally, you can add or remove the VMs by clicking Edit DB Server Selection. Clicking this button launches the Change DB Servers dialog listing all the available DB Servers with the following details for each one of them:- Available CPUs

- Available memory (GB)

- Available local storage (GB)

- Number of VM Clusters and Autonomous VM Clusters in it.

By default, all the DB Servers that have the minimum resources needed to create an Autonomous VM Cluster are selected. You can remove a DB Server by clicking the check-box against it in the list. Depending on your selection, the maximum resources available per VM and resources allocated per VM details are displayed on the dialog.

After selecting the DB Servers, click Save Changes.

You must select at least 2 DB Servers to deploy an AVMC resource. A minimum of 2 DB Servers are needed for high availability (HA) configuration.

Note:

You can not add or remove DB Servers after provisioning an AVMC.Autonomous VM Cluster resources

VM count or Node Count: Denotes the number of database servers in the Exadata infrastructure. This is a read-only value. This value depends on the number of DB Servers selected for the AVMC.

Maximum number of Autonomous Container Databases: The number of ACDs specified represents the upper limit on ACDs. These ACDs must be created separately as needed. ACD creation also requires 8 available ECPUs or 2 available OCPUs per node.

CPU count per VM or node: Specify the CPU count for each individual VM. The minimum value is 40 ECPUs or 10 OCPUs per VM.

Database memory per CPU (GB): The memory per CPU allocated for the Autonomous Databases in the Autonomous VM Cluster.

Allocate Storage for Local Backups: For Exadata Cloud@Customer, you can check this option to configure the Exadata storage to enable local database backups.

Database storage (TB): Data storage allocated for Autonomous Database creation in the Autonomous VM Cluster. The minimum value is 5TB.

VM and node are used interchangeably between Oracle Exadata Cloud@Customer and Oracle Public Cloud deployments.

As you configure the resource parameters, the aggregate resources needed to create the Autonomous Exadata VM Cluster and the formulae used to calculate these values are displayed on the right side of the resource configuration section.

The total resources requested for Autonomous Exadata VM Cluster are calculated using the below formulae:

CPU Count = CPU Count per Node x Node Count

Memory = [CPU Count per Node x Database Memory per CPU + Internal Database Service Memory: (40GB)] x Node Count

Local Storage = Internal Local Service Storage: ([Autonomous VM Cluster storage: xxGB] + [Autonomous Container Database storage: 100GB + 50GB x ACD Count] x 1.03) x Node Count

In the above formula, Autonomous VM Cluster storage value depends on the Exadata system model used, as follows:

- X7-2 and X8-2 systems: 137GB

- X8M-2 & X9M-2 systems: 184GB

Exadata Storage = [User Data Storage + Internal Database Service Storage: ( 200GB + 50GB x ACD Count x Node Count )] x 1.25

Network Settings

Virtual cloud network: The virtual cloud network (VCN) in which you want to create the new Autonomous Exadata VM Cluster.

Subnet: A subnet within the above selected VCN for the new Autonomous Exadata VM Cluster.

Optionally, you can use network security groups to control traffic. To do so, Select the corresponding check-box and choose a Network Security Group from the select list.

Click + Another Network Security Group to add more network security groups.

You can use IPv4/IPv6 dual-stack networking, enabling both IPv4 and IPv6 addresses. You can enable this option while creating a VCN, and while creating a subnet, and you can use the corresponding subnet (which has both IP4 and IP6 addresses) while provisioning an AVMC.

APPLIES TO:  Oracle Public Cloud only

Oracle Public Cloud onlyVM cluster network

The VM cluster network in which to create the new Autonomous Exadata VM Cluster.

APPLIES TO:  Exadata Cloud@Customer only

Exadata Cloud@Customer onlyAutomatic maintenance

Optionally, you can configure the automatic maintenance schedule. On Oracle Public Cloud, Click Modify maintenance. On Exadata Cloud@Customer, Click Modify maintenance schedule.

You can then change the maintenance schedule from the default (No preference, which permits Oracle to schedule maintenance as needed) by selecting Specify a schedule and then selecting the months, weeks, days and hours for the schedule. You can also set a lead time to receive a notification message about an upcoming maintenance from Oracle.

When you are finished, save your changes.

For guidance on choosing a custom schedule, see Settings in Maintenance Schedule that are Customizable.

License Type

The license type you wish to use for the new Autonomous Exadata VM Cluster.

You have the following options:

Bring your own license: If you choose this option, make sure you have proper entitlements to use for new service instances that you create.

License included: With this choice, the cost of the cloud service includes a license for the Database service.

Your choice for the license type affects metering for billing.

Show/hide advanced options

By default, advanced options are hidden. Click Show advanced options to display them.

Advanced options: Management

If you want to set a time zone other than UTC or the browser-detected time zone, then select the Select another time zone option, select a Region or country, and select the corresponding Time zone. If you do not see the region or country you want, select Miscellaneous and choose an appropriate Time zone.

The default time zone for the Autonomous Exadata VM Cluster is UTC, but you can specify a different time zone. The time zone options are those supported in both the

Java.util.TimeZoneclass and the Oracle Linux operating system.You can not modify the time zone settings for an Autonomous Exadata VM Cluster already provisioned. If needed, you can create a service request in My Oracle Support. For help with filing a support request, see Create a Service Request in My Oracle Support.

Advanced options: Listener

If you want to choose a Single Client Access Name (SCAN) listener port for Transport Layer Security (TLS) and non-TLS from a range of available ports, enter a port number for Non-TLS port or TLS port in the permissible range (1024 - 8999).

You can also choose mutual TLS (mTLS) authentication by selecting the Enable mutual TLS (mTLS) authentication check-box.

The default port number is 2484 for TLS and 1521 for non-TLS authentication modes.

The port numbers 1522, 1525, 5000, 6100, 6200, 7060, 7070, 7879, 8080, 8181, 8888, and 8895 are reserved for special purposes and can not be used as Non-TLS or TLS port numbers.

The SCAN listener ports can not be changed after provisioning the AVMC resource.

As ORDS certificates are one-way TLS certificates, choosing between one-way TLS and mutual TLS (mTLS) is applicable only to database TLS certificates.

Advanced options: Security attributes

Add a security attribute to control access for your AVMC resource using Zero Trust Packet Routing (ZPR) policies. To add a security attribute, select a Namespace, and provide the Key and Value for the security attribute.

Click Add security attribute to add additional security attributes.

To select a security attribute at this step, you must already have set up security attributes with Zero Trust Packet Routing. For more information, see About Zero Trust Packet Routing.

You also can add security attributes for existing AVMCs also. See Configure Zero Trust Packet Routing (ZPR) for an AVMC for details.

Advanced options: Tags

If you want to use tags, add tags by selecting a Tag Namespace, Tag Key, and Tag Value.

Tagging is a metadata system that allows you to organize and track resources within your tenancy. See Tagging Dedicated Autonomous Database Cloud Resources.

To be able to create 23ai databases, you must provision the AVMC with appropriate tags. See 23ai Database Software Version Tag Requirements for details.

-

Optionally, you can save the resource configuration as a stack by clicking Save as Stack. You can then use the stack to create the resource through the Resource Manager service.

Enter the following details on the Save as Stack dialog, and click Save.- Name: Optionally, enter a name for the stack.

- Description: Optionally, enter a description for this stack.

- Save in compartment: Select a compartment where this Stack will reside.

- Tag namespace, Tag key, and Tag value: Optionally, apply tags to the stack.

For requirements and recommendations for Terraform configurations used with Resource Manager, see Terraform Configurations for Resource Manager. To provision the resources defined in your stack, apply the configuration.

- Submit your details to create the Autonomous Exadata VM Cluster.