Security

Find out about Application and Run security in Data Flow.

Before you can create, manage and run applications in Data Flow, the tenant administrator (or any user with elevated privileges to create buckets and change IAM) must create specific storage buckets and associated policies in IAM. These set up steps are required in Object Store and IAM for Data Flow to function.

Run Security

- Run Security

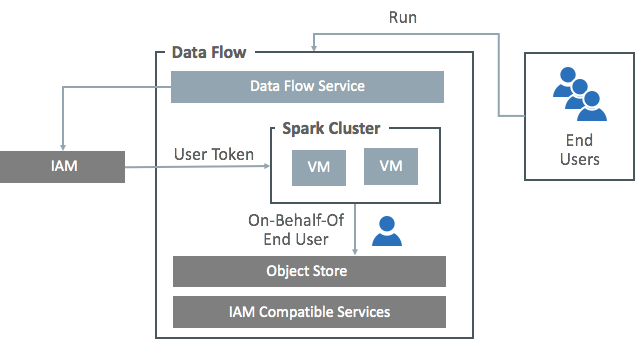

Spark applications that run with Data Flow use the same IAM permissions as the user who starts the run. The Data Flow Service creates a security token in the Spark Cluster that lets it assume the identity of the running user. This means the Spark application can access data transparently based on the end user's IAM permissions. There's no need to hard-code credentials in your Spark application when you access IAM-compatible systems.

If the service you're contacting isn't IAM-compatible, you need to use credential management or key management solutions such as Oracle Cloud Infrastructure Key Management.

For more information, see Oracle Cloud Infrastructure IAM in the IAM documentation.