Enterprise Manager Warehouse

The Enterprise Manager (EM) repository contains critical information such as operational, performance and configuration metrics, and target inventory data for the targets monitored by Enterprise Manager. EM Warehouse provides a convenient way to access and analyze this data using cloud-based tools and services.

EM Warehouse Deployment Options

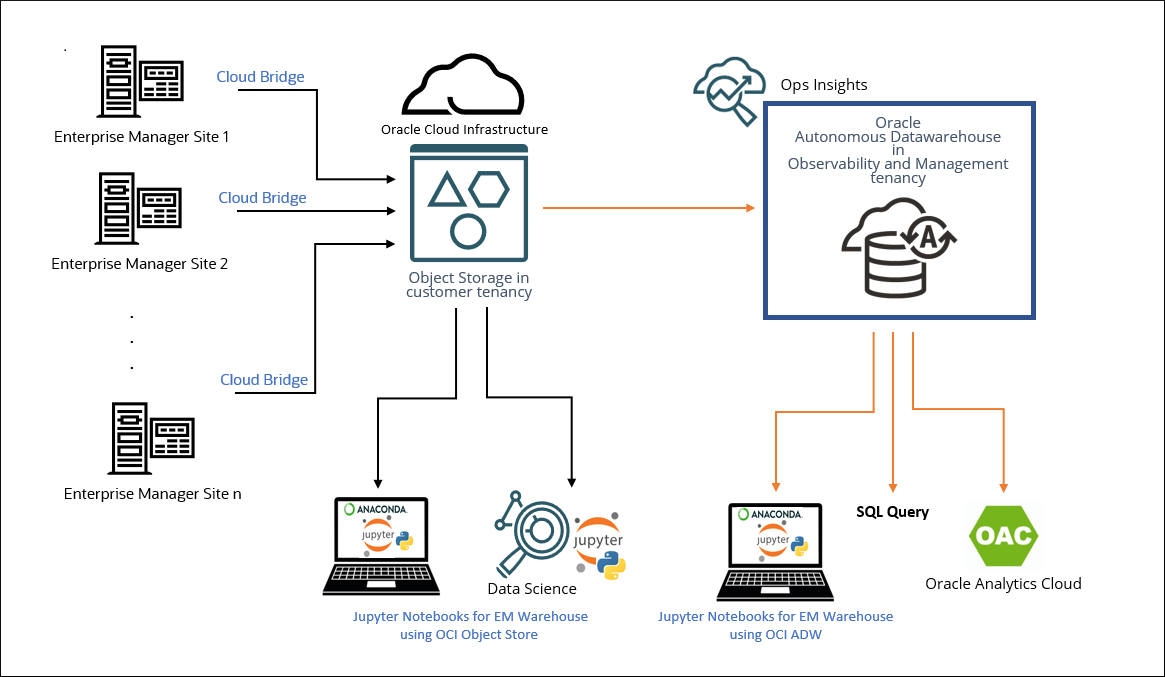

There are two options for deploying EM Warehouse that take different approaches to storing EM data and that provide you with different data analysis options.

- Option 1

Enterprise Manager data is loaded into OCI Object Store. With this option, you can use the published Jupyter Notebooks, or build your own analytics on top of the data in OCI Object Store

- Option 2

Enterprise Manager data is loaded into an Autonomous Data Warehouse (ADW) in OCI's Ops Insights service. With this option, you can write you own SQL scripts, applications, Jupyter Notebooks or use Oracle Analytics Cloud for further analysis of the data.

EM Warehouse continuously ingests Enterprise Manager repository infrastructure monitoring and configuration metric data (from one or more repositories) and stores it in an ADW Warehouse or OCI Object Store. With direct access to raw observability and manageability data, you can gain insight into the current and future state of targets as well as solve use-cases around finding noisy-neighbors across targets and running statistical models to predict future forecasts for EM-managed targets.

The operational data from Enterprise Manager Cloud Control instances is retained for the last 25 months from the current date.

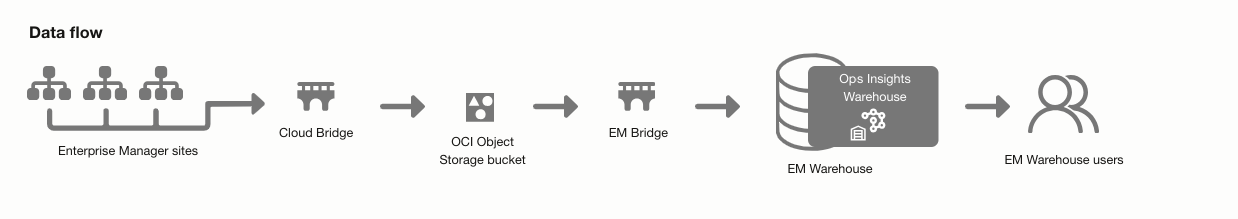

As shown in the following graphic, EM repository data is uploaded via Cloud Bridge to an OCI Object Storage Bucket in your tenancy. From there, data is transferred via EM Bridge to an Ops Insights Warehouse. EM Warehouse is a schema within the Ops Insights Autonomous Data Warehouse.

EM Warehouse Licensing

The use of Enterprise Manager Warehouse (EM Warehouse) features requires an OCI Ops Insights Service subscription.

Pricing

The use of Enterprise Manager Warehouse (EM Warehouse) features requires an OCI Ops Insights Service license subscription of following:

- Oracle Cloud Infrastructure Ops Insights for Warehouse - Extract - Gigabyte Per Month- Oracle Cloud

- Infrastructure Ops Insights for Warehouse - Instance - OCPU Per Hour