Linear Regression

Averages and maximums are over either daily raw data or hourly raw data depending on the global time context as follows: hourly aggregates are used when time period is within the last seven days, otherwise daily aggregates are used.

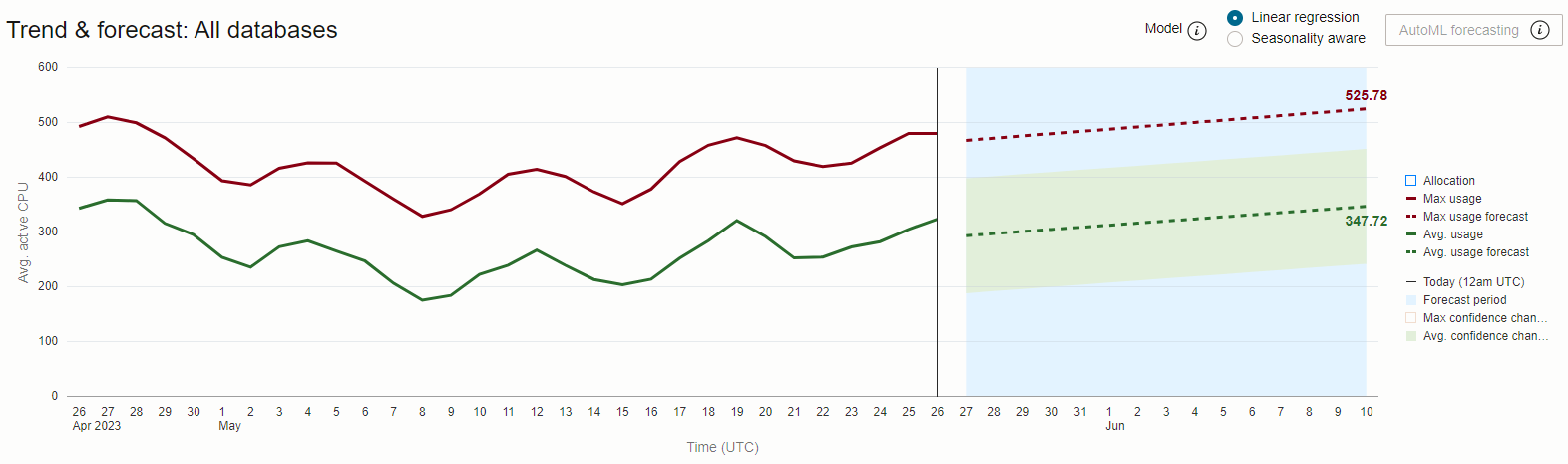

Forecasts periods are set to ½ the length of the trending time period, for example: 30-day trends are projected 15 days forward, and 90-day trends 45 days forward.

The above chart shows daily average and maximum CPU usage over 30-days for the HR-Dev database. We see a consistent and considerable gap between the Avg Usage and Max Usage data series, indicating a high level of variability in CPU demand during the day.

The Max Usage trend and forecast are both very close or touching the Max Allocation for the database, indicating a risk of capacity exhaustion should demand increase or if new workload is introduced.

The difference here between forecast Max Usage (~29 Avg Active CPU) and forecast Avg Usage (~15 Avg Active CPU) is the difference between how much CPU is required to run all work safely and how much CPU is actually required to do all work. When CPU resources are “hard allocated” to databases, this difference represents the amount of resource being paid for minus the amount of resource being used, and is thus an opportunity cost induced by having highly variable CPU demand.

One potential way to mitigate this imputed cost of variability is by sharing CPU resources among several databases and allowing dynamic resource allocation among them in response to changing demand.

Oracle Autonomous AI Database with Auto-scale enabled offers precisely such a solution.