Query External Data with Data Catalog

Oracle Cloud Infrastructure Data Catalog is the metadata management service for Oracle Cloud that helps you discover data and support data governance. It provides an inventory of assets, a business glossary, and a common metastore for data lakes.

Autonomous AI Database can leverage this metadata to dramatically simplify management for access to your data lake's object store. Instead of manually defining external tables to access your data lake, use the external tables that are defined and managed automatically. These tables will be found in Autonomous AI Database protected schemas that are kept up to date with changes in Data Catalog.

For more information about Data Catalog, please refer to Data Catalog documentation.

- About Querying with Data Catalog

By synchronizing with Data Catalog metadata, Autonomous AI Database automatically creates external tables for each logical entity harvested by Data Catalog. These external tables are defined in database schemas that are fully managed by the metadata synchronization process. Users can immediately query data without having to manually derive the schema (columns and data types) for external data sources and manually create external tables. - Concepts Related to Querying with Data Catalog

An understanding of the following concepts is necessary for querying with Data Catalog. - Synchronization Mapping

The synchronization process creates and updates Autonomous AI Database schemas and external tables based on Data Catalog data assets, folders, logical entities, attributes and relevant custom overrides. - Typical Workflow with Data Catalog

There is a typical workflow of actions performed by users who want to query with Data Catalog. - Example: MovieStream Scenario

In this scenario, Moviestream is capturing data in a landing zone on object storage. Much of this data, but not necessarily all, is then used to feed an Autonomous AI Database. Prior to feeding Autonomous AI Database, the data is transformed, cleansed and subsequently stored in the "gold" area. - Example: Partitioned Data Scenario

This scenario illustrates how to create external tables in Autonomous AI Database that are based on Data Catalog logical entities harvested from partitioned data in Object Store.

Parent topic: Query External Data with Autonomous AI Database

About Querying with Data Catalog

By synchronizing with Data Catalog metadata, Autonomous AI Database automatically creates external tables for each logical entity harvested by Data Catalog. These external tables are defined in database schemas that are fully managed by the metadata synchronization process. Users can immediately query data without having to manually derive the schema (columns and data types) for external data sources and manually create external tables.

Synchronization is dynamic, keeping the Autonomous AI Database up-do-date with respect to changes to the underlying data, reducing administration cost as it automatically maintains hundreds to thousands of tables. It also allows multiple Autonomous AI Database instances to share the same Data Catalog, further reducing management costs and providing a common set of business definitions.

The Data Catalog folders/buckets are containers that sync with Autonomous Database schemas. Logical entities within those folders/buckets map to Autonomous Database external tables. These schemas and external tables are automatically generated and maintained through the sync process:

- Folders/Buckets map to database schemas that are for organizational purposes only.

- The organization is meant to be consistent with the data lake and minimize confusion when accessing data thru different paths.

- Data Catalog is the source of truth for the tables contained within schemas. Changes made in the Data Catalog update the schema's tables during a subsequent sync.

To use this capability, a Database Data Catalog Administrator initiates a connection to a Data Catalog instance, selects which data assets and logical entities to synchronize, and runs the sync. The sync process creates schemas and external tables based on the selected Data Catalog harvested data assets and logical entities. As soon as the external tables are created, Data Analysts can start querying their data without having to manually derive the schema for external data sources and create external tables.

The

DBMS_DCAT Package is

available for performing the tasks required to query Data Catalog object store data assets. See DBMS_DCAT Package.

Parent topic: Query External Data with Data Catalog

Concepts Related to Querying with Data Catalog

An understanding of the following concepts is necessary for querying with Data Catalog.

- Data Catalog

-

Data Catalog harvests data assets that point to the object store data sources you want to query with Autonomous AI Database. From Data Catalog you can specify how the data is organized during harvesting, supporting different file organization patterns. As part of the Data Catalog harvesting process, you can select the buckets and files you want to manage within the asset. For further information, see Data Catalog Overview.

- Object Stores

-

Object Stores have buckets containing a variety of objects. Some common types of objects found in these buckets include: CSV, parquet, avro, json, and ORC files. Buckets generally have a structure or a design pattern to the objects they contain. There are many different ways to structure data and many different ways of interpreting these patterns.

For example, a typical design pattern uses top-level folders that represent tables. Files within a given folder share the same schema and contain data for that table. Subfolders are often used to represent table partitions (for example, a subfolder for each day). Data Catalog refers to each top-level folder as a logical entity, and this logical entity maps to an Autonomous AI Database external table.

- Connection

-

A connection is an Autonomous AI Database connection to a Data Catalog instance. For each Autonomous AI Database instance there can be connections to multiple Data Catalog instances. The Autonomous AI Database credential must have rights to access Data Catalog assets that have been harvested from object storage.

- Harvest

-

A Data Catalog process that scans object storage and generates the logical entities from your data sets.

- Data Asset

-

A data asset in Data Catalog represents a data source, which includes databases, Oracle Object Storage, Kafka, and more. Autonomous AI Database leverages Oracle Object Storage assets for metadata synchronization.

- Data Entity

-

A data entity in Data Catalog is a collection of data such as a database table or view, or a single file and normally has many attributes that describe its data.

- Logical Entity

-

In Data Lakes, numerous files typically comprise a single logical entity. For example, you may have daily clickstream files, and these files share the same schema and file type.

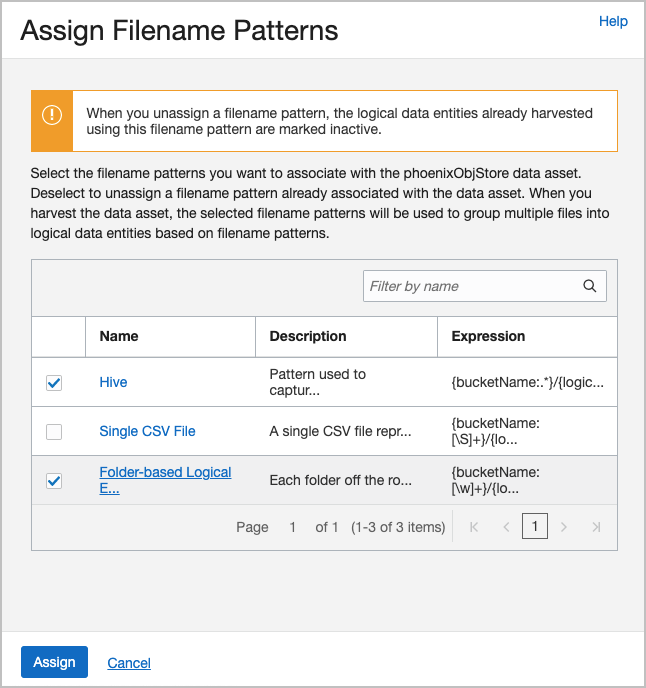

A Data Catalog logical entity is a group of Object Storage files that are derived during harvesting by applying filename patterns that have been created and assigned to a data asset.

- Data Object

-

A data object in Data Catalog refers to data assets and data entities.

- Filename Pattern

-

In a data lake, data may be organized in different ways. Typically, folders capture files of the same schema and type. You must register to Data Catalog how your data is organized. Filename patterns are used to identify how your data is organized. In Data Catalog, you can define filename patterns using regular expressions. When Data Catalog harvests a data asset with an assigned filename pattern, logical entities are created based on the filename pattern. By defining and assigning these patterns to data assets, multiple files can be grouped as logical entities based on the filename pattern.

- Synchronize (Sync)

-

Autonomous AI Database performs synchronizations with Data Catalog to automatically keep its database up-to-date with respect to changes to the underlying data. Synchronization can be performed manually, or on a schedule.

The sync process creates schemas and external tables based on the Data Catalog data assets and logical entities. These schemas are protected, which means their metadata is managed by Data Catalog. If you want to alter the metadata, you must make the changes in Data Catalog. The Autonomous AI Database schemas will reflect any changes after the next sync is run. For further details, see Synchronization Mapping.

Parent topic: Query External Data with Data Catalog

Synchronization Mapping

The synchronization process creates and updates Autonomous AI Database schemas and external tables based on Data Catalog data assets, folders, logical entities, attributes and relevant custom overrides.

| Data Catalog | Autonomous AI Database | Mapping Description |

|---|---|---|

|

Data asset and folder (object storage bucket) |

Schema name |

Default values: By default, the generated schema name in Autonomous AI Database has the following format:

Customizations: The defaultdata-asset-name and folder-name

can be customized by defining custom properties, business names and

display names to override these default names.

Examples:

|

| Logical entity | External table |

Logical entities are mapped to external tables. If the logical entity has a partitioned attribute, it is mapped to a partitioned external table. The external table name is derived from the corresponding logical entity's Display Name, or Business Name. If For example, if |

| Logical entity's attributes | External table columns |

Column names: The external table column names are derived from the corresponding logical entity's attribute display names, or business names. For logical entities derived from Parquet, Avro, and ORC files, the column name is always the display name of the attribute as it represents the field name derived from the source files. For attributes corresponding to a logical entity derived from CSV files, the following attribute fields are used in order of precedence for generating the column name:

Column type: The

For attributes corresponding to a logical entity derived

from Avro files with Column length: The

Column precision: The

For attributes corresponding to a logical entity derived

from Avro files with Column scale: The

|

Parent topic: Query External Data with Data Catalog

Typical Workflow with Data Catalog

There is a typical workflow of actions performed by users who want to query with Data Catalog.

The Database Data Catalog Query Admin or Database Admin grants READ access to the generated external tables so that Data Analysts and other database users can browse and query the external tables.

The table below describes each action in detail. For a description of the different user types included in this table, see Data Catalog Users and Roles.

The

DBMS_DCAT Package is available for performing the tasks

required to query Data Catalog object store data assets. See DBMS_DCAT Package.

| Action | Who is the user | Description |

|---|---|---|

|

Create policies |

Database Data Catalog Administrator |

The Autonomous AI Database resource principal or Autonomous AI Database user credential must have the appropriate permissions to manage Data Catalog and to read from object storage. More information: Required Credentials and IAM Policies. |

|

Create credentials |

Database Data Catalog Administrator |

Ensure database credentials are in place to access

Data Catalog and to query object store. The user calls

More information: DBMS_CLOUD CREATE_CREDENTIAL Procedure, Use Resource Principal with DBMS_CLOUD. |

|

Create connections to Data Catalog |

Database Data Catalog Administrator |

To initiate a connection between an Autonomous AI Database

instance and a Data Catalog instance the user calls

The connection to the Data Catalog instance must use a database credential object with sufficient Oracle Cloud Infrastructure (OCI) privileges. For example, the Resource Principal Service Token for the Autonomous AI Database instance or an OCI user with sufficient privileges can be used. Once the connection has been made, the Data Catalog instance is updated with the

DBMS_DCAT

namespace and custom properties (if they do not already exist).

The user can run a query to see the new connection including all

current connections:

More information: SET_DATA_CATALOG_CONN Procedure, UNSET_DATA_CATALOG_CONN Procedure. |

|

Create a selective sync |

Database Data Catalog Administrator |

Create a sync job by selecting the Data Catalog objects to sync. The user can:

More information: See CREATE_SYNC_JOB Procedure, DROP_SYNC_JOB Procedure, Synchronization Mapping |

|

Sync with Data Catalog |

Database Data Catalog Administrator |

The user initiates a sync operation. The sync is

initiated manually through the

The sync operation creates, modifies and drops external tables and schemas according to the Data Catalog contents and sync selections. Manual configuration is applied using Data Catalog Custom Properties. More information: See RUN_SYNC Procedure, CREATE_SYNC_JOB Procedure, Synchronization Mapping |

|

Monitor sync and view logs |

Database Data Catalog Administrator |

The user can view

the sync status by querying the

USER_LOAD_OPERATIONS view. After the sync

process has completed, the user can view a log of the sync results,

including details about the mappings of logical entities to external

tables.

More information: Monitoring and Troubleshooting Loads |

|

Grant privileges |

Database Data Catalog Query Administrator, Database Administrator |

The database Data Catalog Query Administrator or database Administrator must grant READ on generated external tables to data analyst users. This allows the data analysts to query the generated external tables. |

|

Browse and query external tables |

Data Analyst |

Data analysts are able to query the external tables through any tool or application that supports Oracle SQL. Data Analysts can review the synced schemas and tables in the DCAT$* schemas, and query the tables using Oracle SQL. More information: Synchronization Mapping |

|

Terminate connections to Data Catalog |

Database Data Catalog Administrator |

To remove an existing Data Catalog association, the user calls the UNSET_DATA_CATALOG_CONN procedure. This action is only done when you no longer plan on using Data Catalog and the external tables that are derived from the catalog. This action deletes Data Catalog metadata, and drops synced external tables from the Autonomous AI Database instance. The custom properties on Data Catalog and OCI policies are not affected. More information: UNSET_DATA_CATALOG_CONN Procedure |

Parent topic: Query External Data with Data Catalog

Example: MovieStream Scenario

In this scenario, Moviestream is capturing data in a landing zone on object storage. Much of this data, but not necessarily all, is then used to feed an Autonomous AI Database. Prior to feeding Autonomous AI Database, the data is transformed, cleansed and subsequently stored in the "gold" area.

Data Catalog is used to harvest these sources and then provide a business context to the data. Data Catalog metadata is shared with Autonomous AI Database, allowing Autonomous AI Database users to query those data sources using Oracle SQL. This data may be loaded into Autonomous AI Database or queried dynamically using external tables.

For more information on using Data Catalog, see Data Catalog Documentation.

Parent topic: Query External Data with Data Catalog

Example: Partitioned Data Scenario

This scenario illustrates how to create external tables in Autonomous AI Database that are based on Data Catalog logical entities harvested from partitioned data in Object Store.

The following example is based on Example: MovieStream Scenario and has been adapted to demonstrate integrating with partitioned data. Data Catalog is used to harvest these sources and then provide a business context to the data. For further details about this example, see Example: MovieStream Scenario.

For more information on using Data Catalog, see Data Catalog Documentation.

Parent topic: Query External Data with Data Catalog