Push Notifications to OCI Monitoring¶

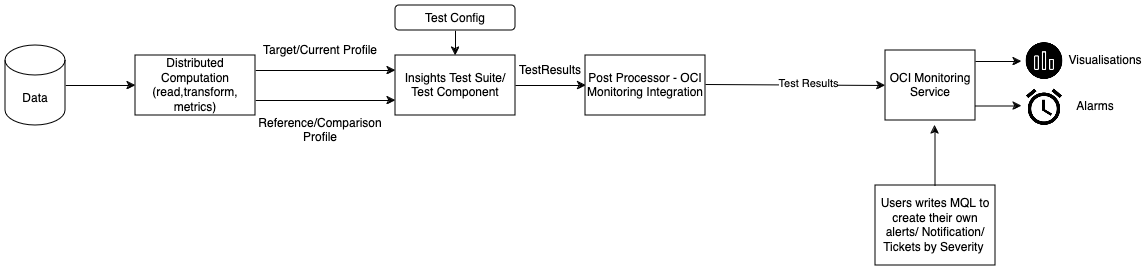

The Test or Test Suites component lets users configure test cases that check if the metric results produced during Profile computation breach a particular threshold. Any detected threshold breaches are notified to a user in a way that is easy to visualize and track. ML Monitoring Application provides Notifications features to push threshold breaches to OCI Monitoring.

Prerequisites¶

Since users are notified on threshold breaches, these thresholds have to be configured using the Test or Test Suites component (either using the Application Configuration or the Test Configuration). The Test Results are used for notification purposes.

To push test results to OCI Monitoring, appropriate IAM policies should be configured as described here: https://docs.oracle.com/en-us/iaas/Content/Security/Reference/monitoring_security.htm#iam-policies.

High-level Overview¶

User authors Application Configuration or the Test Configuration to compute metric(s) and run tests:

The Metrics component and the TestConfig component are needed to runs tests on selected metrics.

A post-processor called OCIMonitoringApplicationPostProcessor is configured, with details like compartment_id, namespace and dimensions to be used while configuring OCI Monitoring. This component sends the results of tests run on metrics to OCI Monitoring.

After a successful run of ML Monitoring Application, you can verify that the results of the test that are set in the application configuration have been pushed to OCI Monitoring from the OCI Console. The test result values can be visualized using graphs or charts, and filtered using dimensions that were set while configuring the post-processor component.

You can configure alarms on the test result values, with the help of simple MQL queries to set details on notifications.

Configure ML Monitoring Application to Push Notifications to OCI Monitoring¶

This section covers the steps necessary to configure ML Insights to run tests on selected model metrics, push the Insights Test Results to OCI Monitoring, and get notified when a threshold set for a particular test has been breached.

Sample Configuration File¶

In the application configuration file, a post-processor called OCIMonitoringApplicationPostProcessor is specified, which pushes the Test Results to the OCI Monitoring service.

ml-monitoring-config.json

{

"monitor_id": "<monitor_id>",

"storage_details": {

"storage_type": "OciObjectStorage",

"params": {

"namespace": "<namespace>",

"bucket_name": "<bucket_name>",

"object_prefix": "<prefix>"

}

},

"input_schema": {

"Age": {

"data_type": "integer",

"variable_type": "continuous",

"column_type": "input"

},

"EnvironmentSatisfaction": {

"data_type": "integer",

"variable_type": "continuous",

"column_type": "input"

}

},

"baseline_reader": {

"type": "CSVDaskDataReader",

"params": {

"file_path": "oci://<path>"

}

},

"prediction_reader": {

"type": "CSVDaskDataReader",

"params": {

"data_source": {

"type": "ObjectStorageFileSearchDataSource",

"params": {

"file_path": ["oci://<path>"],

"filter_arg": [

{

"partition_based_date_range": {

"start": "2023-06-26",

"end": "2023-06-27",

"data_format": ".d{4}-d{2}-d{2}."

}

}

]

}

}

},

"dataset_metrics": [

{

"type": "RowCount"

}

],

"feature_metrics": {

"Age": [

{

"type": "Min"

},

{

"type": "Max"

}

],

"EnvironmentSatisfaction": [

{

"type": "Mode"

},

{

"type": "Count"

}

]

},

"transformers": [

{

"type": "ConditionalFeatureTransformer",

"params": {

"conditional_features": [

{

"feature_name": "Young",

"data_type": "integer",

"variable_type": "ordinal",

"expression": "df.Age < 30"

}

]

}

}

],

"post_processors": [

{

"type": "SaveMetricOutputAsJsonPostProcessor",

"params": {

"file_name": "<file_name>",

"file_location_expression": "<expression>",

"date_range": {

"start": "2023-08-01",

"end": "2023-08-05"

},

"can_override_profile_json": false,

"namespace": "<namespace>",

"bucket_name": "<bucket_name>"

}

},

{

"type": "OCIMonitoringApplicationPostProcessor",

"params" : {

"compartment_id": "compartment_id",

"namespace": "namespace",

"dimensions": {

"monitor_id": "monitor_id"

}

}

}

],

"tags": {

"tag": "value"

}

},

"test_config": {

"tags": {

"key_1": "these tags are sent in test results"

},

"feature_metric_tests": [

{

"feature_name": "Age",

"tests": [

{

"test_name": "TestGreaterThan",

"metric_key": "Min",

"threshold_value": 17

},

{

"test_name": "TestIsComplete"

}

]

}

],

"dataset_metric_tests": [

{

"test_name": "TestGreaterThan",

"metric_key": "RowCount",

"threshold_value": 40,

"tags": {

"subtype": "falls-xgb"

}

}

]

}

}

Configure OCI Monitoring Service in OCI Console¶

Each Insights test configured produces a Test result of PASS(0)/ FAIL(1), which are pushed to OCI Monitoring.

Under the Observability & Management section in the OCI Console sidebar menu, select Metrics Explorer under the Monitoring subsection.

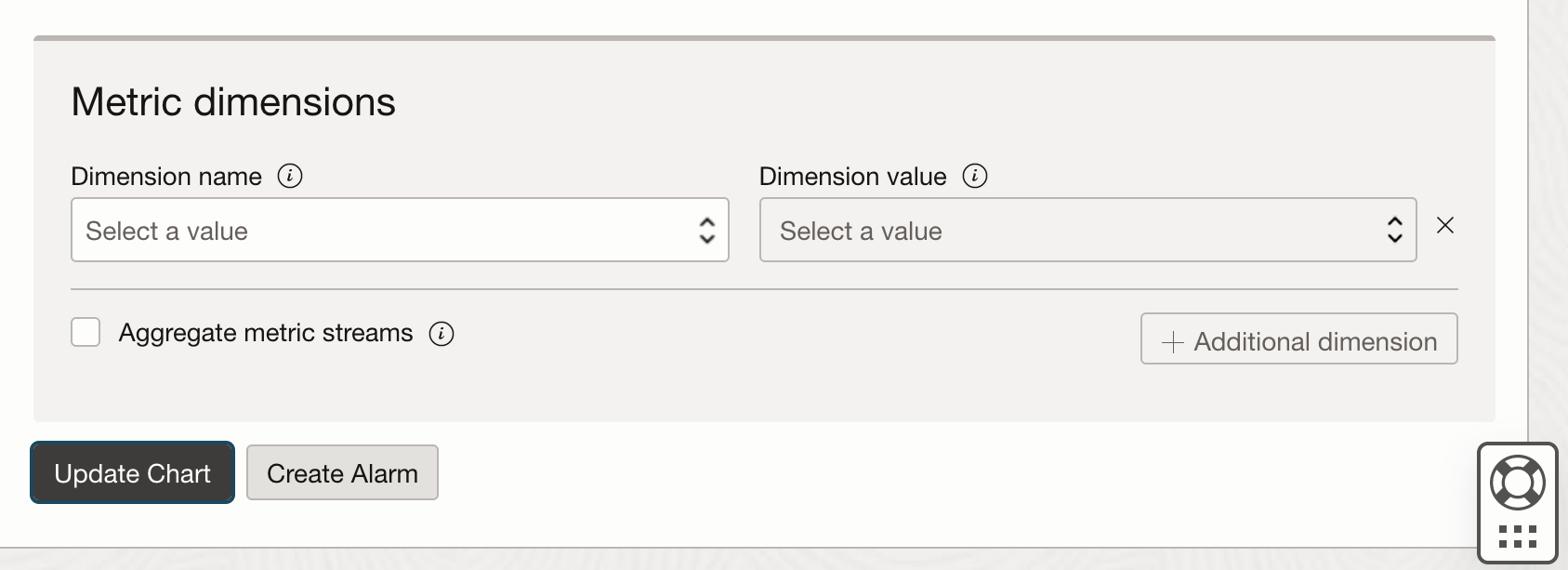

The metrics sent to OCI monitoring can be visualized by specifying the same parameters that were specified while configuring the OCIMonitoringApplicationPostProcessor. Details on configuring the OCIMonitoringApplicationPostProcessor are documented here: OCIMonitoringApplicationPostProcessor

Compartment: The OCID of the compartment containing the resources monitored by the metric.

Metric namespace: The source service or application emitting the metric.

Metric name: This refers to the specific test name on which run results can be visualized (for example, TestGreaterThan or TestIsPositive). This test name has to be configured in the test config while running the Insights library.

Interval/Statistic: These fields can be set based on user preference to visualize run results or configure alarms

Metric dimensions: This field enables the user to filter run results using any of the keys specified via the dimensions parameter. Default dimensions passed by the OCIMonitoringApplicationPostProcessor are enumerated here: OCIMonitoringApplicationPostProcessor

The results corresponding to the above query can be visualized as a data table or graph over a specified duration.

Configure Alarms using OCI Monitoring¶

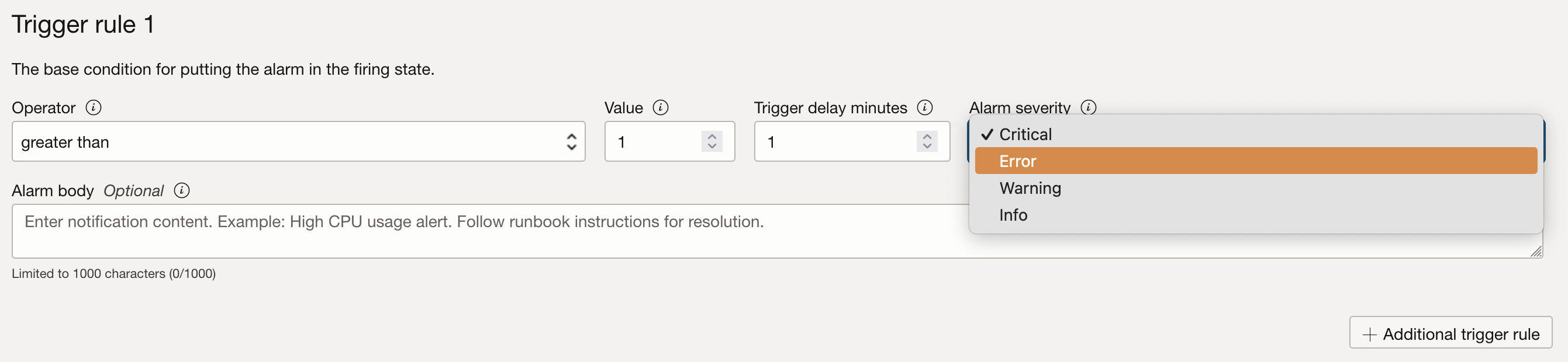

The query specified in the Metric Explorer section can be used to configure alarms via the Create Alarm option as shown here.

Alarm configuration details are captured comprehensively here: https://docs.oracle.com/en-us/iaas/Content/Monitoring/Tasks/managingalarms.htm

When configuring alarms, specify a trigger rule to cause the alarm to fire, along with a corresponding severity.

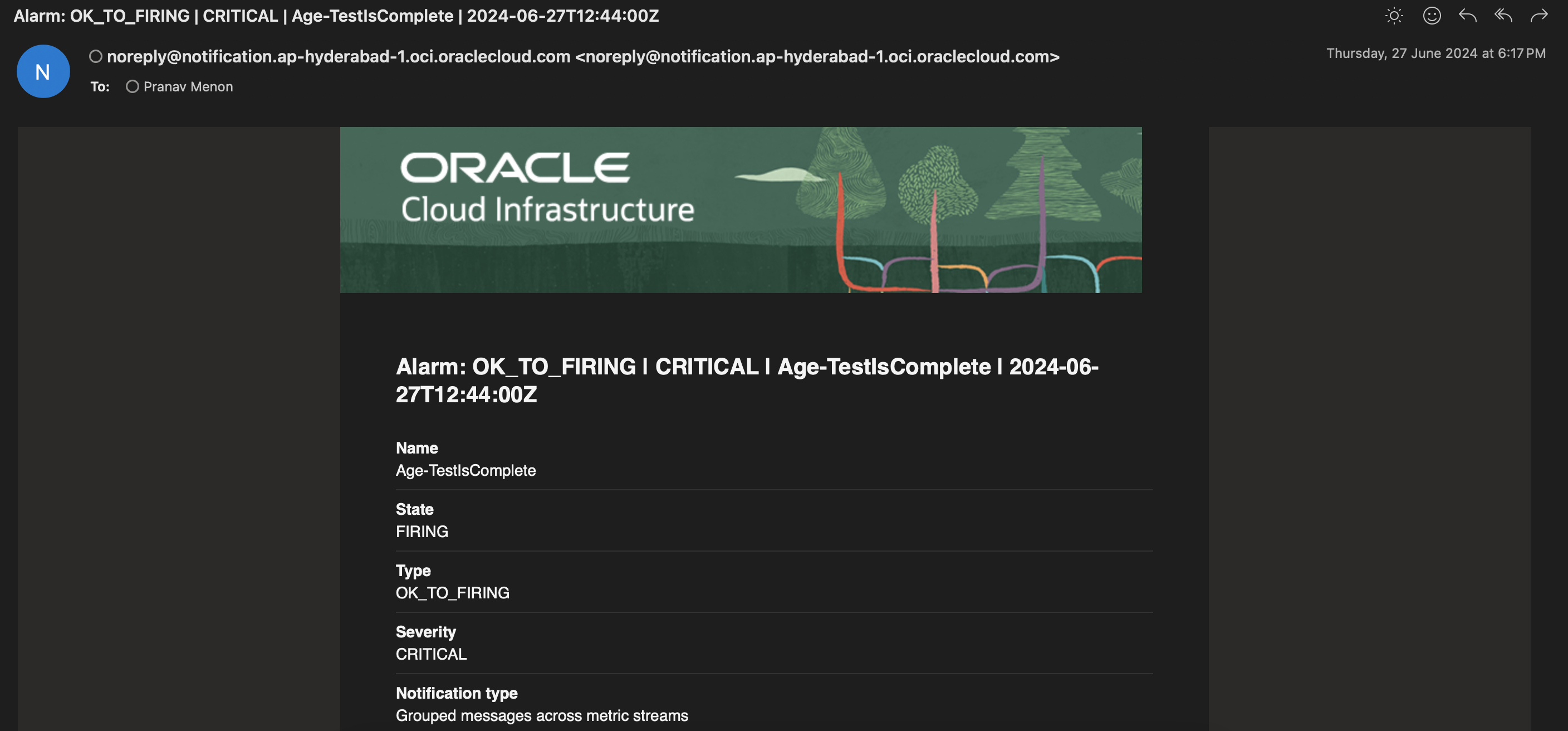

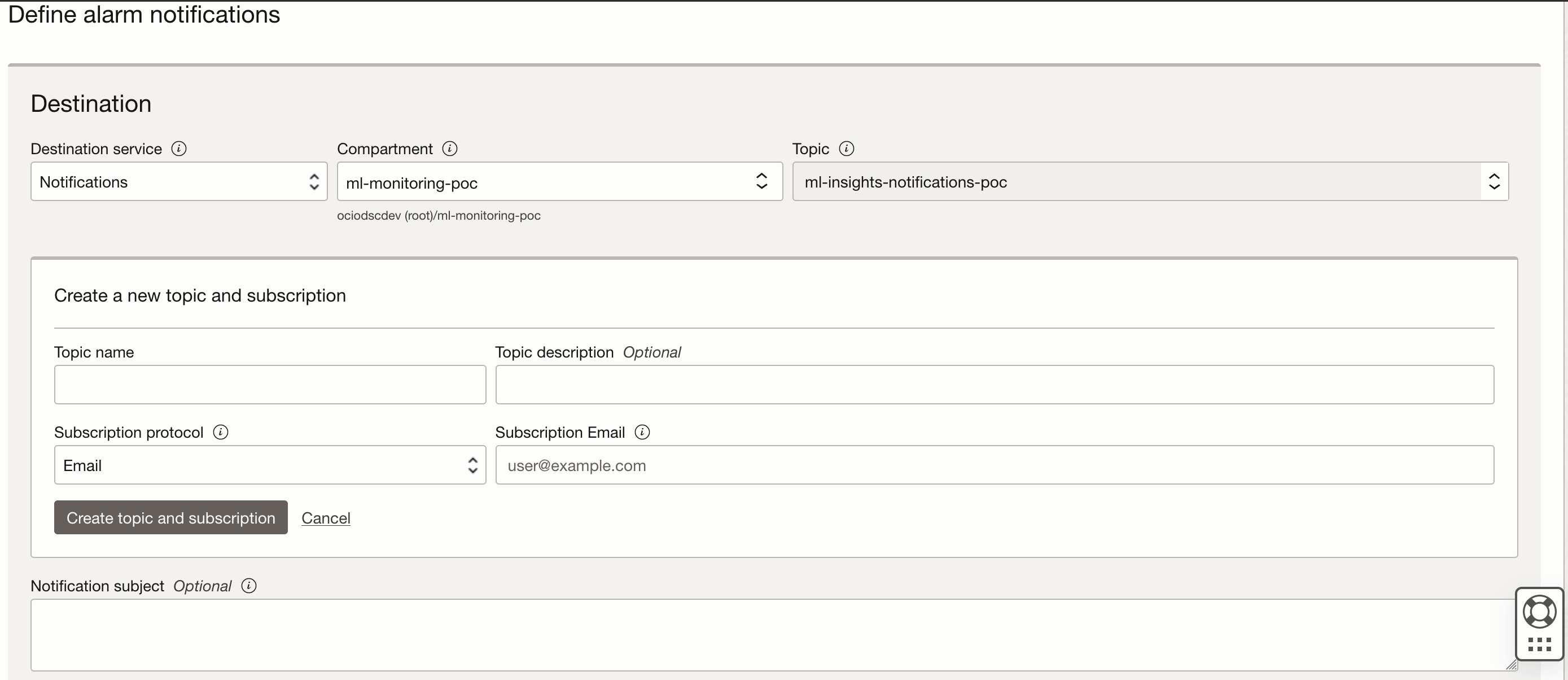

- The alarm notifications can be specified by creating a Topic (as described here), which allows users to configure a subscription protocol (like Email, Slack. or SMS), and the format of the notification received.

For example, creating a topic with an Email subscription protocol requires the user to specify a subscription email to which notifications on threshold breaches will be received. On creating the alarm, the user will be notified via email to subscribe to the topic, followed by receiving an email notification when a breach is observed.