Prediction Run¶

A ML Monitoring Application Run can be specifically defined as a Prediction Run, when one wants to calculate the performance drift with respect to other Ml Monitoring Application Runs using the ActionType as RUN_PREDICTION.

Prediction Run used to generate metrics and compare the drift against the baseline run, baseline run(if available) serve as a reference point or benchmark for a prediction run.

How to use it¶

Here is a simple sample configuration to run the prediction run using Ml Monitoring Application.

In order to run prediction run using Ml monitoring Application, user needs to configure a prediction_reader in the application configuration Application Configuration, a Prediction Reader is responsible for reading the prediction data required for metric evaluation.

Below are the sample configurations for prediction reader which supports a simple file path of OCI Object storage location

"prediction_reader": {

"type": "CSVDaskDataReader",

"params": {

"file_path": "<OCI_STORAGE_FILE_PATH_FOR_BASELINE_INPUT_DATA>"

}

}

Examples:

"prediction_reader": {

"type": "CSVDaskDataReader",

"params": {

"file_path": "oci://<bucket_name>@<namespace>/<object_prefix>/dataset.csv"

}

}

If file path contains multiple data files:

"prediction_reader": {

"type": "CSVDaskDataReader",

"params": {

"file_path": "oci://<bucket_name>@<namespace>/<object_prefix>/*.csv"

}

}

Configuration required for running Prediction Run

CONFIG_LOCATION: This is the HTTP location of oci storage application config, which is a mandatory parameter to kick start prediction run. The application config must have a read access to the specified HTTP location for the OCI resources needed to run the prediction run.

RUNTIME_PARAMETER: Runtime parameter are the parameters that defines the state of a single monitor run. In order to specify, if a monitor run is prediction run, user needs to pass ACTION_TYPE as RUN_PREDICTION as one of the arguments of runtime parameters as shown below.

"CONFIG_LOCATION": "<HTTP_LOCATION_OF_OCI_STORAGE_APPLICATION_CONFIG_FILE_PATH>"

"RUNTIME_PARAMETER": "{"ACTION_TYPE":"RUN_PREDICTION"}"

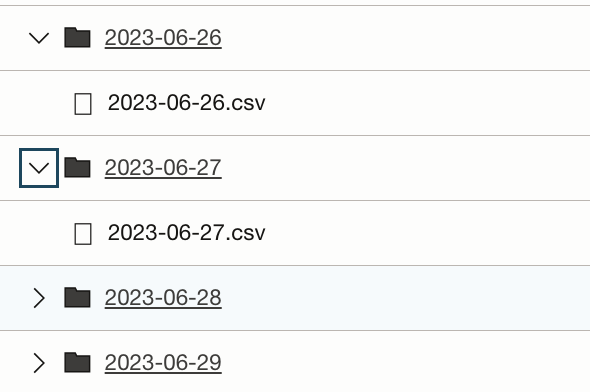

In case of Partition Data based on Date Time supported format, one needs to include a specific data source in the application config for the prediction reader using ObjectStorageFileSearchDataSource as data source as shown below. The partition data which needs to be filtered for the prediction run evaluation must follow the below structure with start and end dates mentioned in the filter_args parameters.

"prediction_reader": {

"type": "CSVDaskDataReader",

"params": {

"data_source": {

"type": "ObjectStorageFileSearchDataSource",

"params": {

"file_path": [

"oci://<bucket_name>@<namespace>/<object_prefix>/dataset.csv"

],

"filter_arg": [

{

"partition_based_date_range": {

"start": "2023-06-26",

"end": "2023-06-27",

"data_format": ".d{4}-d{2}-d{2}."

}

}

]

}

}

}

},

"post_processors": [

{

"type": "SaveMetricOutputAsJsonPostProcessor",

"params": {

"file_name": "profile.json",

"test_results_file_name": "test_result.json",

"file_location_expression": "profile-$start_$end.json",

"date_range": {

"start": "2023-08-01",

"end": "2023-08-05"

},

"can_overwrite_profile_json": false,

"can_overwrite_test_results_json": false,

"namespace": "<NAMESPACE>",

"bucket_name": "<BUCKET_NAME>"

},

{

"type": "OCIMonitoringApplicationPostProcessor",

"params": {

"compartment_id": "<COMPARTMENT_ID>",

"namespace": "<NAMESPACE>",

"date_range": {

"start": "2023-08-01",

"end": "2023-08-05"

},

"dimensions": {

"key1": "value1",

"key2": "value2"

}

}

}

]

The start and end date mentioned in the above prediction_reader (only for ObjectStorageFileSearchDataSource) and for post processor SaveMetricOutputAsJsonPostProcessor, OCIMonitoringApplicationPostProcessor if present in the application configuration, can be overwritten using runtime parameter configuration DATE_RANGE as shown below, without uploading the new application configuration.

The given runtime parameters for the DATE_RANGE will override the start and end filters of the ObjectStorageFileSearchDataSource, SaveMetricOutputAsJsonPostProcessor and OCIMonitoringApplicationPostProcessor present in the application configuration.

"CONFIG_LOCATION": "<HTTP_LOCATION_OF_OCI_STORAGE_APPLICATION_CONFIG_FILE_PATH>"

"RUNTIME_PARAMETER": "{"ACTION_TYPE":"RUN_PREDICTION", "DATE_RANGE":{"start":"2023-06-28", "end":"2023-06-29"}}"

Baseline Profile Reference¶

If the application config contains the metric which needs a baseline reference like the drift metrics for calculating the performance of a model with respect to a baseline data then ML Monitoring application will take the latest baseline profile that is generated during the baseline run if present.

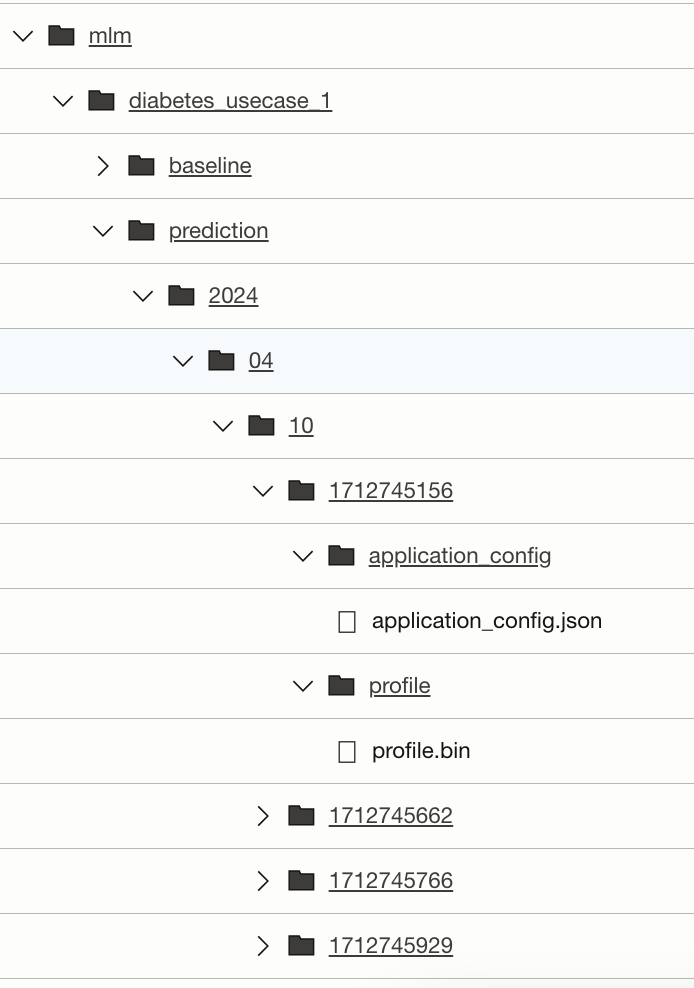

Latest baseline profile location is defined on the value of the monitor_run_id as Monitor run id for ML Monitoring application is the epoch time of the underlying resources used during Monitor run. Sample baseline location is shown below.

<location>/MLM/<monitorId>/baseline/<Latest_Year>/<Latest_Month>/<Latest_Day>/<latest_epoch_time>/profile/profile.bin

Output of Prediction Run¶

When the action type is a prediction run the application will do the following -

Internal Application State¶

Profile and Application configuration are stored for a monitor run which will be used in subsequent runs for comparison and metric calculating like drift metrics etc.

A profile will be written in the location specified in the storage_details section of the application configuration file in the storage_details section. This Profile stores summary about data. It includes the profile header as well as information about features, metrics.

<location>/MLM/<monitorId>/prediction/<Year>/<Month>/<Day>/<monitor_run_id>/profile/profile.binApplication configuration passed by the user will be saved in the storage_details section of the application configuration

<location>/MLM/<monitorId>/prediction/<Year>/<Month>/<Day>/<monitor_run_id>/application_config/application_config.json

User Configured Output¶

If post processor is provided in the config the default application will execute the post processors and store the store the output if required by that Post processor to the location mentioned in Post Processor section.

The logs would be written in the log group details provided while setting up the ML Job.

Note

Here monitor_run_id is (epoch time stamp of run).