Digital Assistant Insights

For each digital assistant, you can view Insights reports, which are developer-oriented analytics on usage patterns.

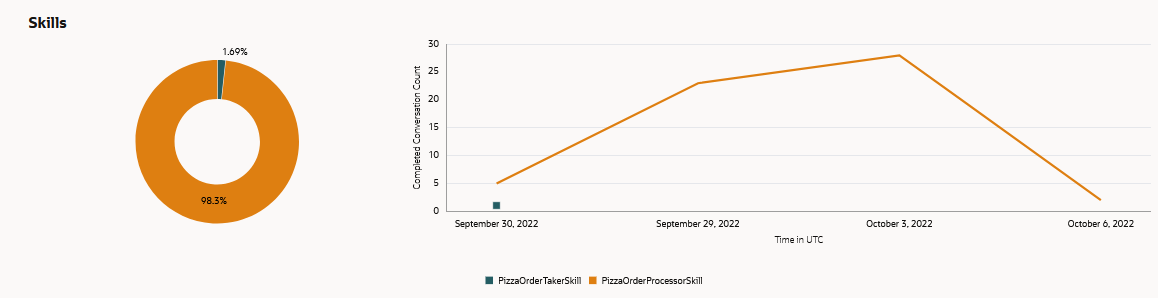

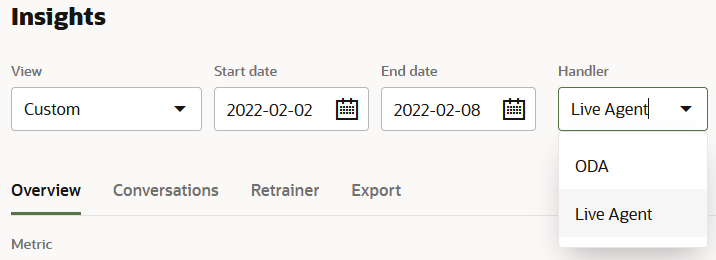

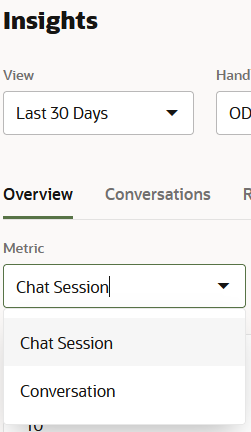

You can track metrics at both the user session level and at the conversation

level. A user chat session begins when a user contacts a digital assistant and ends

either when a user has closed the chat window or after the chat session expiration

specified by the channel configuration. You can toggle between the conversation and chat

session reporting using the Metric filter.

Description of the illustration da-conversation-sessions-toggle.png

Insights tracks chat sessions at the source. In this case, it's the digital assistant that initiated a chat session, not the digital assistant's constituent skills. Because users connect directly to the digital assistant, the skills have no bearing on the chat session tracking.

Any in-progress chat sessions will be expired after the release of 21.12.

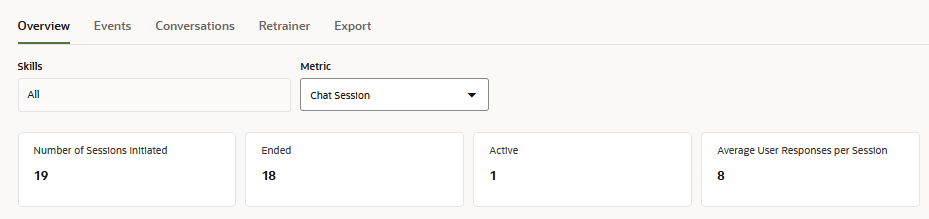

Chat Session Metrics for Digital Assistants

- Number of Sessions Initiated – The total number of chat sessions initiated by the digital assistant.

- Ended – The number of chat sessions that

ended explicitly by users closing the chat window, or that have expired after

the period specified by the channel configuration.

Note

Any in-progress sessions will be expired after the release of 21.12. Sessions initiated through the skill tester are expired after 24 hours of inactivity. Currently, the functionality for ending a session by closing the chat window is supported by the Oracle Digital Assistant Native Client SDK for Web. - Active – The chat sessions that remain active because the chat window remains open or because they haven't yet timed out.

- Average User Responses per Session – The

average number of responses from users across the total number of sessions

initiated by the digital assistant. Every time a user asks a question, replies

to the digital assistant, or interacts with it is counted as a response.

- Session Trends – A comparison of the active,

ended, and initiated sessions. These metrics are displayed in proportion to one

another on the donut chart, which contrasts the totals for ended and active

sessions against the total number of initiated sessions. The trend line chart

plot the counts for these metrics by date.

Conversation Metrics for Digital Assistants

With digital assistant Insights reports, you can find out:

- The number of conversations initiated from a digital assistant over a given time

period and their rate of completion.

Note

Conversations are not the same as metered requests. To find out more about metering, refer to Oracle PaaS and IaaS Universal Credits Service Descriptions. - The popularity of the skills registered to a digital assistant as determined by the traffic to each skill.

These reports display data when Enable Insights, located in

the General page of Settings

![]() is switched on. To access the reports, open a digital assistant and then select

is switched on. To access the reports, open a digital assistant and then select

![]() in its left navbar.

in its left navbar.

You can also view detailed reports on individual skills that show things such as how often each intent is called (and which percentage of those calls have completed) and the paths that users take through the skill. See Insights.

Report Types

- Overview - Use this dashboard to quickly find out the total number of voice and text conversations by channel and by time period. The report's metrics break this total down by the number of complete, incomplete, and in-progress conversations. In addition, this report tells you how the skill completed, or failed to complete, conversations by ranking the usage of the skill's transactional and answer intents in bar charts and word clouds.

- Conversations - Displays the transcripts for the conversations that occurred duing a session. You can read a plain text version of this conversation and also review it within the context of the digital assistant's skill routing and intent resolution.

- Events - Displays metrics and graphs for the external events relayed to the skills within the digital assistant and the outbound events sent to external sournces.

- Retrainer - This is the counterpart to the skill-level Retrainer, where you improve the intent resolution for the registered skills using the live data that flows through the digital assistant.

- Export - Lets you download a CSV file of the Insights data collected by Oracle Digital Assistant. You can create a custom Insights report from the CSV.

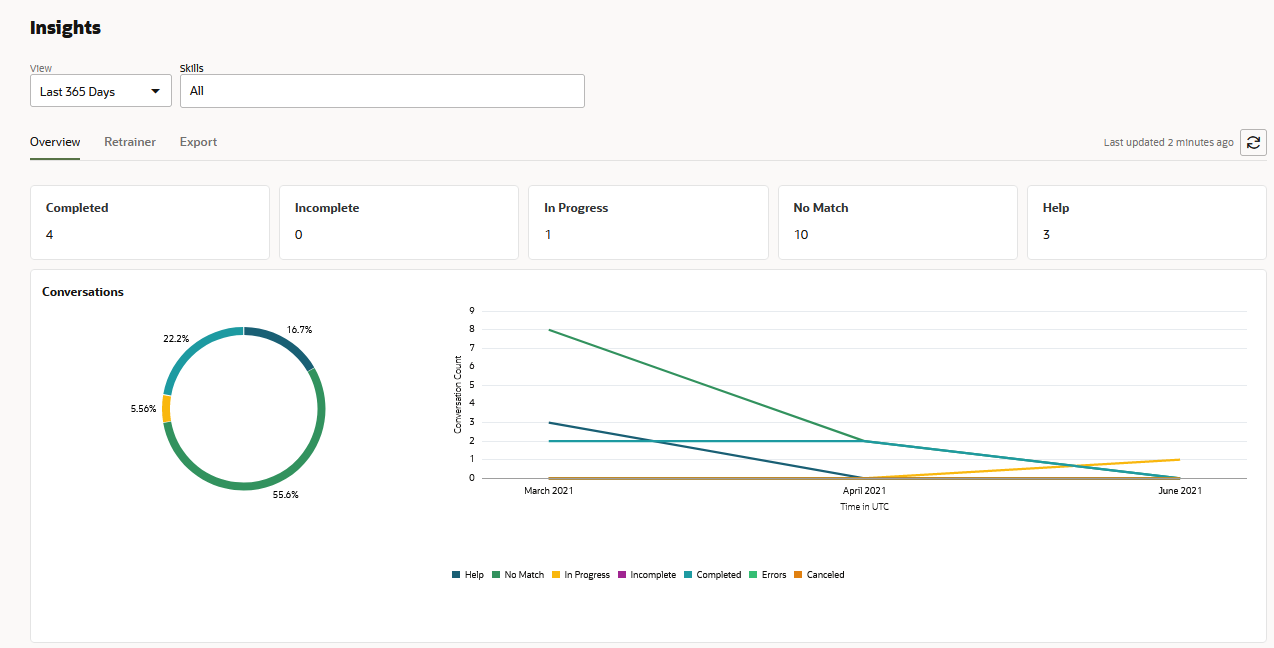

Review the Overview Metrics and Graphs

- Completed – The number of conversations that

were routed through the digital assistant and were then completed by the

individual skills. Conversations that conclude with an End Flow state or at a

state where End flow (implicit) is selected as the transition are considered

complete. In YAML-authored skills, conversations are counted as complete when

the traversal through the dialog flow ends with a

returntransition or at a state with theinsightsEndConversationproperty.Note

This property and thereturntransition are not available in Visual Flow Designer. - Incomplete – Conversations that users didn't complete, because they abandoned the skill, or couldn't complete because of system-level errors, timeouts, or flaws in the skill's design.

- In Progress – The number of skill conversations (that were initiated by the digital assistant) that are still ongoing. Use this metric to track multi-turn conversations. An in-progress conversation becomes an incomplete conversation after a session expires.

- No Match - The number of times that the digital assistant could not match any of its registered skills to a user message.

- Canceled - The number of times that users exited a skill by entering "cancel".

- Help - The number of times the Help system

intent was invoked.

When Insights has been disabled for a digital assistant, the Completed count will still continue to increase if Insights has been enabled for any of the member skills. Despite this, there will be no data logged for the digital-assistant specific Help and No Match metrics until you swich on Enable Insights.

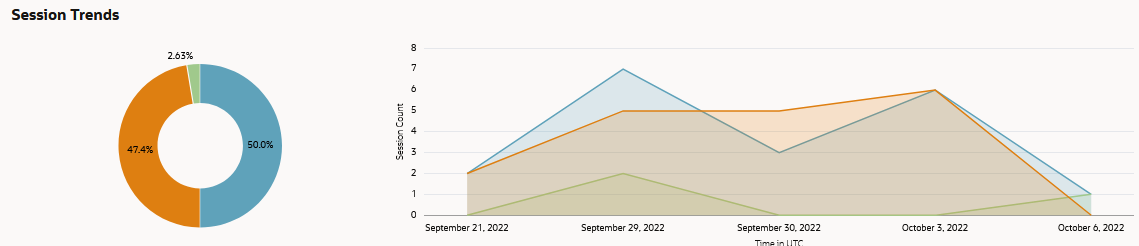

Description of the illustration da-insights-overview.png - Languages: Charts the number of

conversations for each skill by supported language.

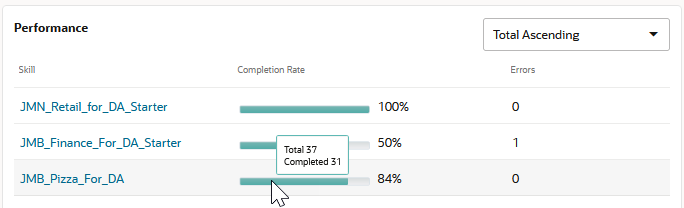

- Performance: Shows the number of

conversations by skill.

The Trend view provides a graph of completed conversations over the selected time period.

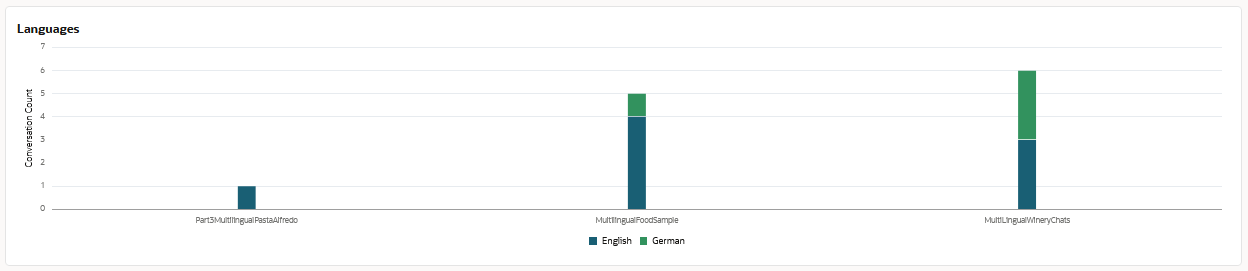

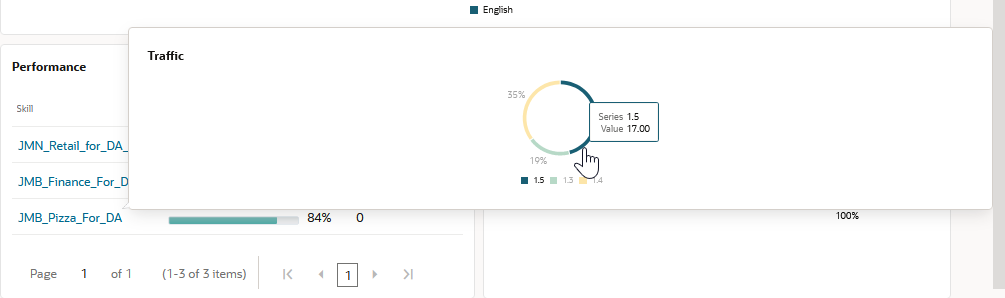

- Skills - The Summary view shows the number of conversations handled

by the skill for a given period. By hovering over the progress bar, you can find

out the number of completed conversations out of the total.

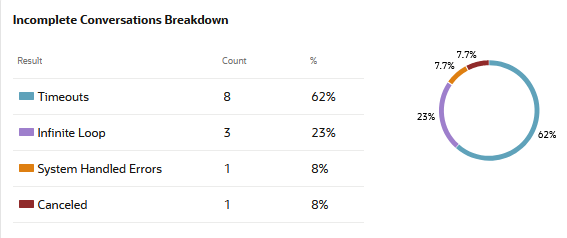

Description of the illustration da-insights-skills-summary.pngIf a skill has been versioned during the selected time period, you can find out the by-version distribution of conversations using the Traffic graph. Clicking a skill opens this graph, which illustrates the volume of conversations handled by each version of the skill in terms of the total conversations handled by the skill for the selected period. Each arc on the Traffic graph represents a version of the skill, with the length and accompanying percentage indicating the volume of conversations that it handled. The hover text for each of these arcs describes the percentage in terms of the conversation count. To break this number down, say, to find out what this count means in terms of the intents invoked for a particular version of the skill, click the arc to drill down to the skill-level Insights.If there are any incomplete conversations during the selected period, the total number is broken down by the following error categories:- Timeouts – Timeouts are triggered when an in-progress conversation is idle for more than an hour, causing the session to expire.

- System-Handled Errors – System-handled errors are handled by the system, not the skill. These errors occur when the dialog flow definition is not equipped with error handling.

- Infinite Loop – Infinite loops can occur because of flaws in the dialog flow definition, such as incorrectly defined transitions.

- Canceled - The number of times that users exited a skill by explicitly canceling the conversation.

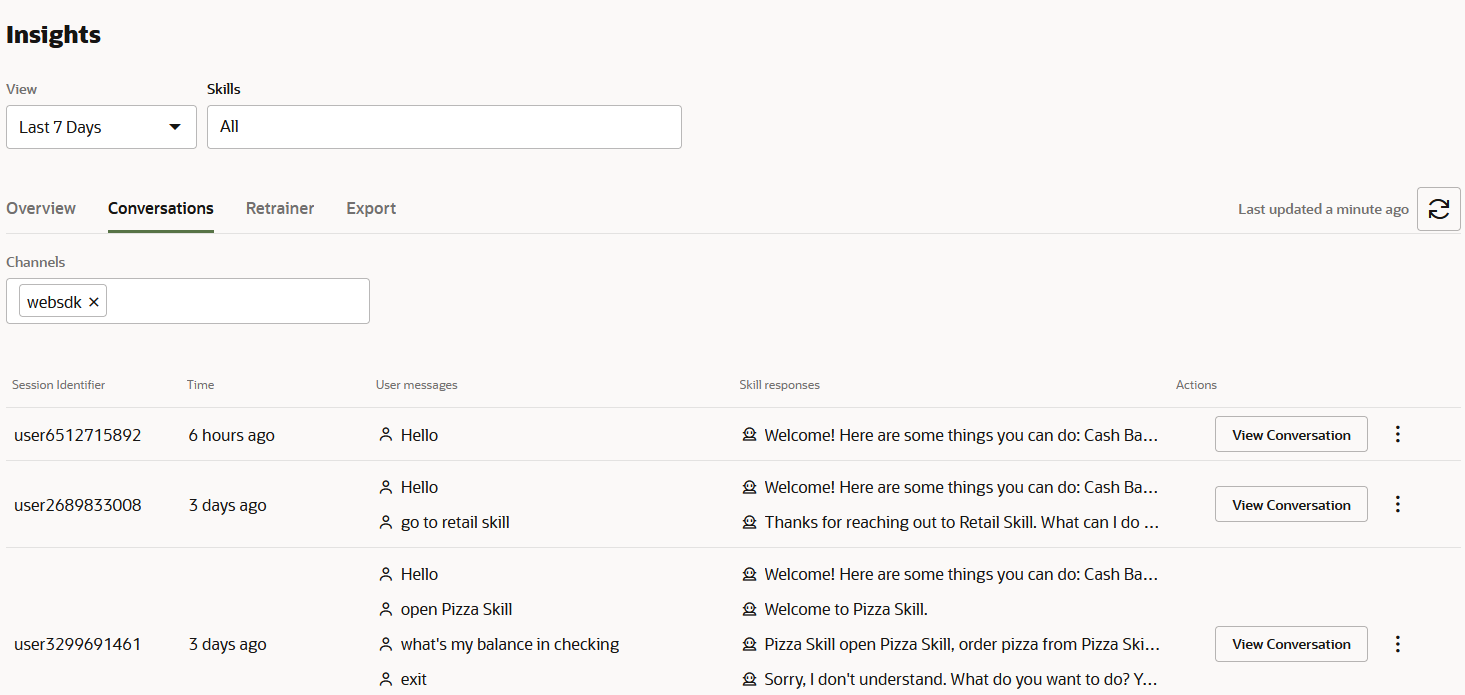

View the Conversations Report

Instead of filtering an exported spreadsheet, you can use the Conversation report to track the digital assistant's routing of the chat to its various skills and also find out which intents were invoked along the way.

This report, which you can filter by both channel and skill, lists the

conversations in chronological order by Session ID (the ID the user's session on a

channel) and presents a transcript of the conversation in its User Messages and Skill

Responses columns. The Session ID is created when a client connects to channel and

contains all of the data from the skills that participated in a conversation. This ID

expires if there's no activity for 24 hours, but may never expire if the client

continues with its conversations.

Description of the illustration da-conversations-report.png

The View and Skills filters that you can apply to the Conversations report do not alter the contents of the conversation transcripts displayed in the User Messages and Skill Responses columns or in the View Conversations mode.

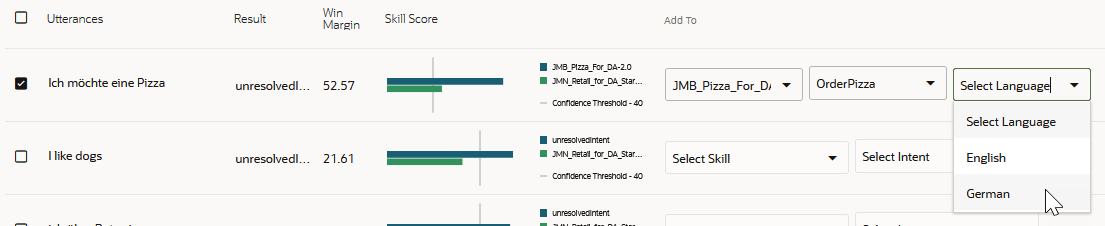

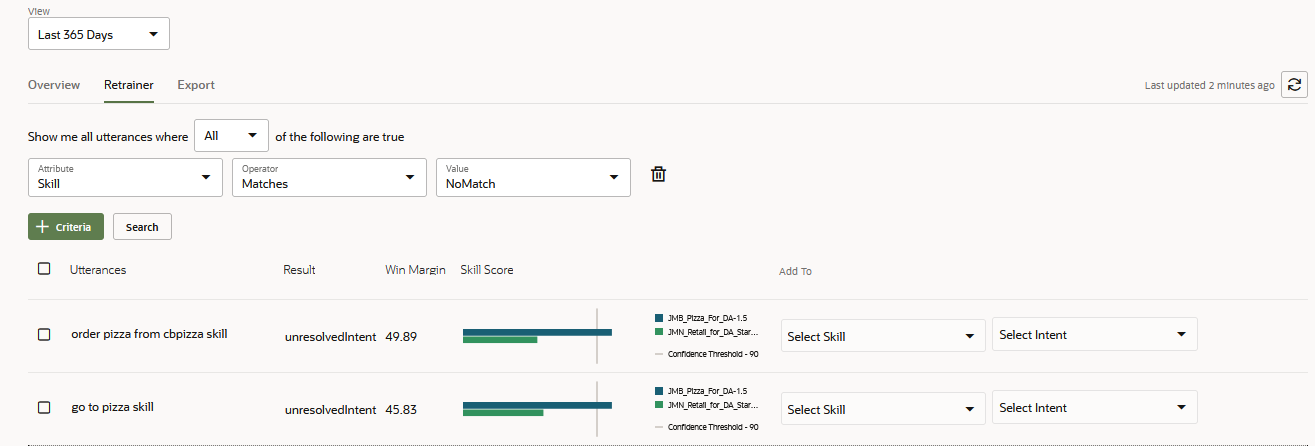

Apply the ODA Retrainer

You can improve the intent resolution for the registered skills by updating their training data with customer input. Some of this input may not have been resolved to any skill, or it may have been routed to the wrong skill. The Retrainer helps you evaluate this user input and add it to a skill if you consider it a useful addition to the training data.

Description of the illustration da-retrainer.png

By default, Retrainer applies the NoMatch filter so that it returns all of the user messages that could not be matched to any skill registered to the digital assistant. For each of these returned phrases, the report presents the top two highest-ranking skills, the Win Margin that separates them and, through a horizontal bar chart, their contrasting confidence scores.

- Filter the registered skills. For example, you can filter for all of the phrases that matched to particular skill within the digital assistant, or you can apply the NoMatch for the phrases that did not match up with any of the skills.

- Select the utterance.

- Select a draft version of the skill for the utterance. If no draft version exists, then create one. If you can't select a skill, it's because it uses native multi-language support. In this case, you can't update the skill from here. You'll have to use the skill-level retrainer instead.

- Select the intent. After you add the phrase as an utterance in the training corpus, you can no longer select it for retraining.

- If your skill supports more than one native

language, then you can add the utterance to the

language-appropriate training set by choosing from among the

languages in the Language menu.

Note

This option is only available for natively supported skills. - Retrain the skill.

- Republish the skill.

- Update the digital assistant with the latest version of the skill.

PII Anonymization

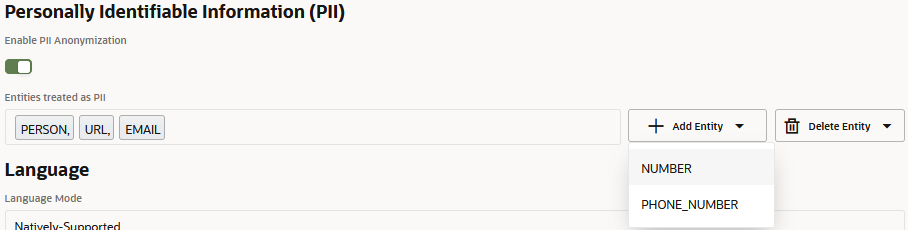

Like the skill-level insights, you can apply anonymization to Personally Identifiable Information (PII) values. At the digital assistant level, anonymization is applied to the conversations handled by the digital assistant. Anonymization enabled at the digital assistant level does not extend to the skills. If anonymization is enabled for a skill, but not for the digital assistant, then only the skill conversations will be anonymized and vice versa. For completely anonymized conversations, you need to apply anonymization to the digital assistant and its skills separately.

- PERSON

- NUMBER

- PHONE NUMBER

- URL

Enable PII Anonymization

- Click Settings > General.

- Switch on Enable PII Anonymization.

- Click Add Entity to select the entity values

that you want to anonymize in the Insights reports and the logs.

NoteIf you want to discontinue the anonymization for an entity, or if you don't want an anonym to be used at all, the select the entity and then click Delete Entity. Once you delete an entity, the actual value appears in the Insights report and throughout the Insights reports for subsequent conversations, even if it previously appeared in its anonymized form.

Anonymized values for the selected entities are persisted only after you enable anonymization. They are not applied to prior conversations. Depending on the date range selected for the Insights reports or export files, the entity values might appear in both their actual and anonymized forms. You can apply anonymization to any non-anonymized value when you create an export task. These anonyms apply only to the exported file and are not persisted in the database.Note

Anonymization is permanent (the export task-applied anonymization notwithstanding). You can't recover the actual values after you enable anonymization.

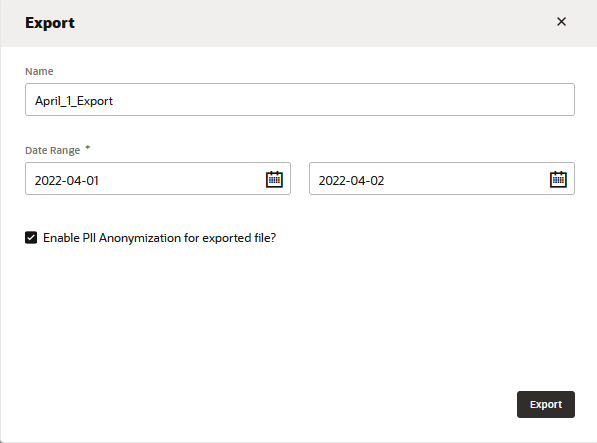

Create an Export Task

If you want another perspective on Insights reporting, then you can create your own reports from exported Insights data. This data is exported in a CSV file. You can write a script to sort the contents of this file.

- Open the Exports page and then click + Export.

- Enter a name for the report and then enter a date range.

- Click Enable PII anonymization for the exported

file to replace any non-anonymized values with anonyms in the

exported file. These anonyms exist only in the exported file if PII is not

enabled in the digital assistant settings. They are not persisted to the

database and they do not appear in the logs or in the Conversations report. This

option is enabled by default whenever you set anonymization for the digital assistant.

Note

The PII anonymization that's enabled for the skill or digital assistant settings factors into how PII values that get anonymized in the export file and also contributes to the consistency of the anonymization in the export file. - Click Export.

- When the task succeeds, click Completed to

download a ZIP of the CSV.

Note

The CSV file for a digital assistant contains data for the skills that are directly called through the digital assistant. The data for skills called outside of the context of a digital assistant is not included in this file.

Description of the illustration insights-export-dialog.png

The data may be spread across a series of CSVs when the export task returns more than 1,048,000 rows. In such cases, the ZIP file will contain a series of ZIP files, each containing a CSV.

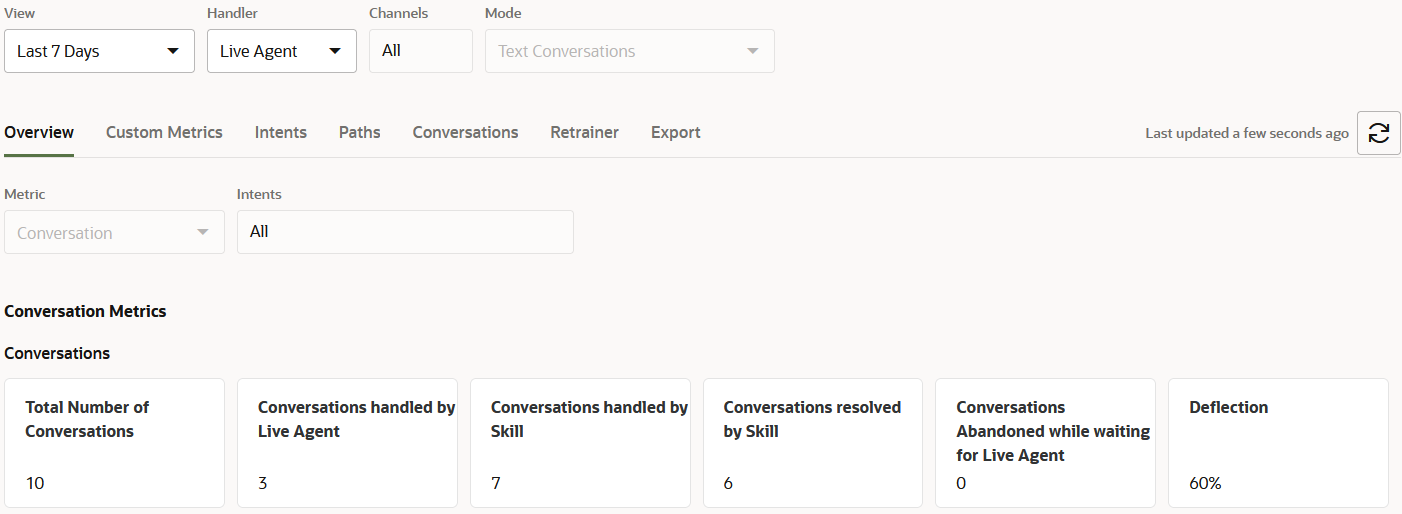

Live Agent Metrics for Digital Assistants

Insights reporting, through its Skill and Live Agent handlers, covers all of the communication between the end user, the skill, and the live agent. This is not the case for DA as Agent conversations, where Insights only covers the conversation up until the chat has been transferred to the live agent. For full reporting on DA as Agent conversations, use Oracle Fusion Service Analytics.

Live Agent Conversation Metrics for Digital Assistants

- Total Number of Conversations – The total number of conversations routed by the digital assistant for the selected time period and the channel. This total includes conversations both with and without live agent requests.

- Conversations Handled by Live Agent – The total number of conversations routed by the digital assistant that included a request for a live agent.

- Conversations Handled by ODA – The total number of conversations (complete or incomplete) that were handled by the digital assistant or its skills because no live agent requests were made.

- Conversations Resolved by ODA – The number of conversations routed by the digital assistant that completed (that is, reached an exit state) with no live agent requests.

- Conversations Abandoned While Waiting for Live Agent - The number of conversations routed by the digital assistant that were never handed off to a live agent, despite having requested one. Conversations can be considered abandoned when users never connect with live agents, possibly because they've left the conversation or were timed out.

- Deflection – The percentage of conversations deflected from the live agent which is calculated dividing the tally of Conversations Resolved by ODA by the tally for the Total Number of Conversations (handled by the digital assistant).

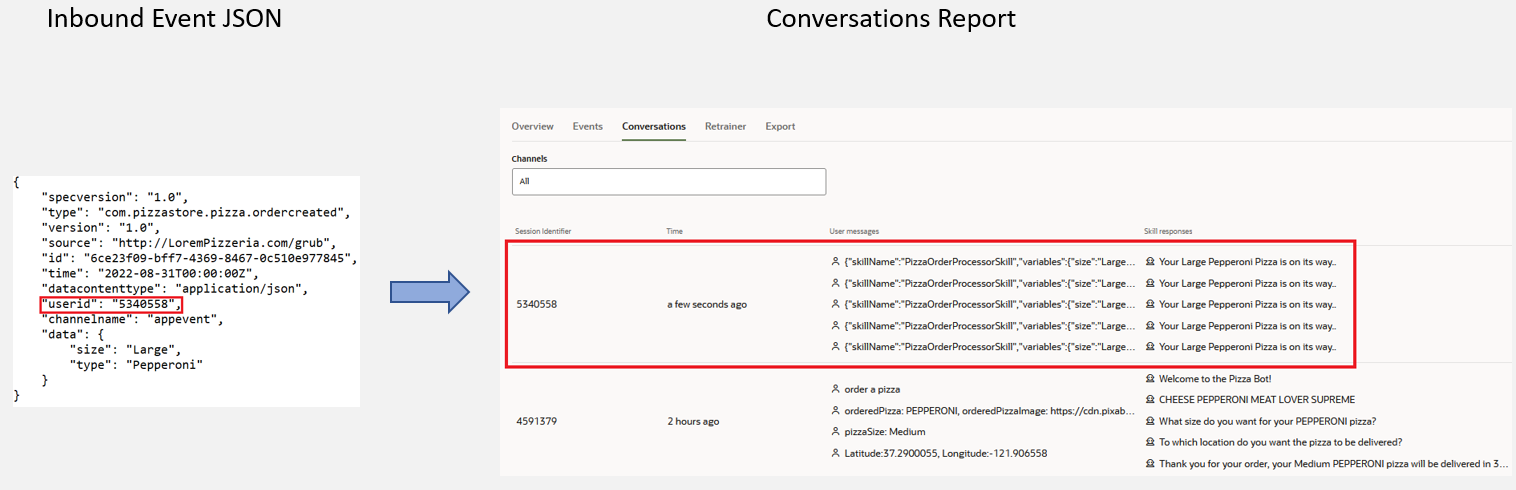

Events Insights

If your digital assistant includes skills that have been equipped to both receive events from, and publish events back to, external sources, then you can monitor the volume of these inbound and outbound events using the Event Insights' charts and graphs.

Inbound Events

- Total number inbound messages – A tally of

the events received by the skill. The inbound events with errors are also

included in this total. The events tracked in these reports depend on the use

case as reflected in the event JSON object. Because the content of an inbound

event is provided by this object, an event may represent a repeated order made

by the user. In this case, it's not the exchange between the skill and the user,

but the result of a previous conversation. When the user reviews past orders and

taps Order Again, for example, the skill receives the event and sends the user a

confirmation message. In terms of the Insights, the tally for Total

number of inbound messages metric, which represents these repeat

orders, is incremented, as is the number of completed conversations in the

Overview report. Looking at the Conversation report, these repeated orders are

aggregated by the user session ID (the

useridevent context attribute). - Number of inbound event messages with errors – The tally of inbound events that did not result in user notification messages because of errors. Typically, these are validation errors that occurred because the event payload did not conform with the schema, problems with the configuration for the skill that consumes the event, or with the configuration of the event itself.

- Number of inbound event sources – The number of sources that orginate the inbound events.

- Trend of inbound messages – The volume of inbound messages (including those with errors), over time.

- Inbound messages breakdown – The total of

inbound events (including those with errors) broken down by event source.

Tip:

You can create user-friendly names for the inbound event sources by creating user-defined resource bundles for them. - Inbound messages with errors – A comparison of the inbound events that resulted in notification events to the inbound events with errors.

Outbound Events

Outbound events do not exist without the inbound events.

- Total number of outbound messages – A tally of the events published by the skills registered to the digital assistant.

- Trend of outbound messages – The volume of published events over a period of time.

- Outbound messages breakdown – The breakdown of outbound messages by source. For Release 22.10, only application events are tracked.

- Event distribution – The frequency of

published events.

Tip:

You can create user-friendly names for the events in the word cloud by creating user-defined resource bundles for the event names.