Features

Here are the features that you can configure in the Oracle Web SDK.

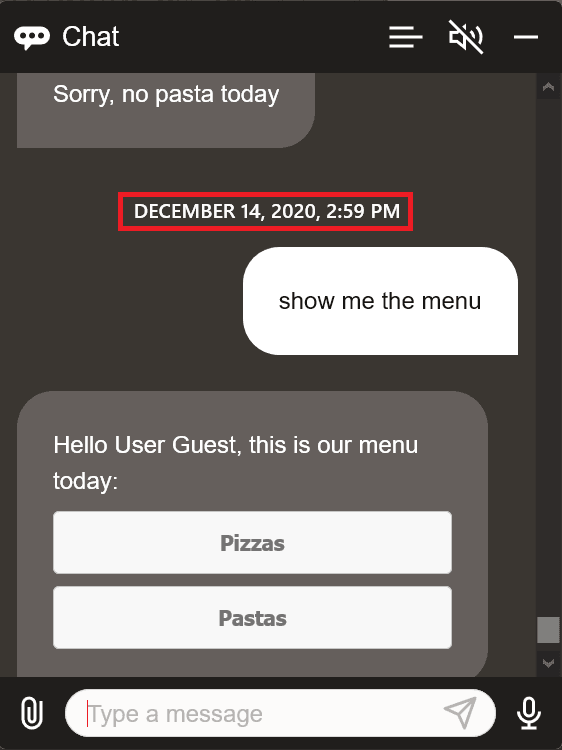

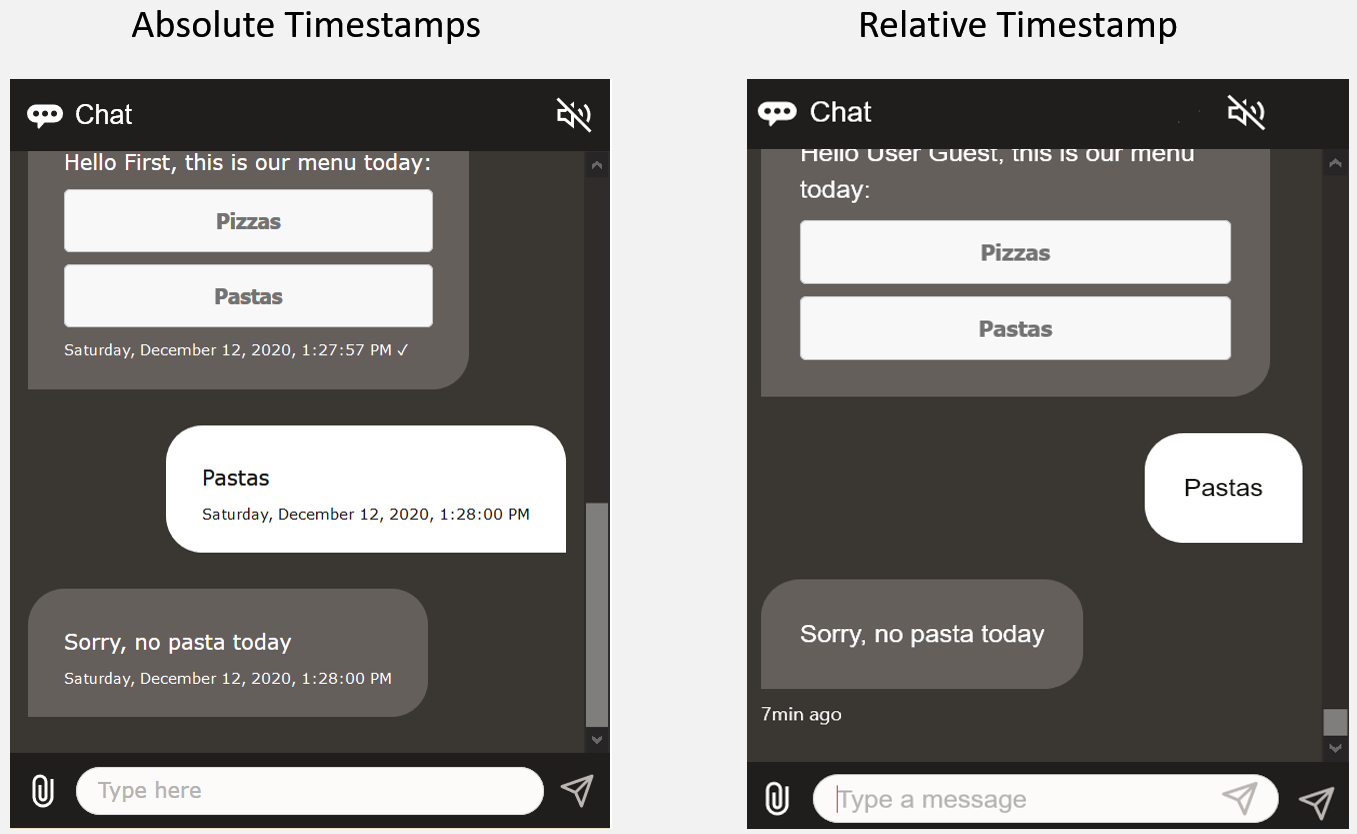

Absolute and Relative Timestamps

- Feature flag:

timestampMode: 'relative' | 'absolute'(default:'relative') - Feature configuration:

timestampMode

You can enable absolute or relative timestamps for chat messages.

Absolute timestamps display the exact time for each message. Relative timestamps display only

on the latest message and express the time in terms of the seconds, days, hours, months, or

years ago relative to the previous message.

Description of the illustration relative-v-absolute-timestamps.png

The precision afforded by absolute timestamps make them ideal for archival tasks, but

within the limited context of a chat session, this precision detracts from the user experience

because users must compare timestamps to find out the passage of time between messages.

Relative timestamps allow users to track the conversation easily through terms like Just

Now and A few moments ago that can be immediately understood. Relative timestamps

improve the user experience in another way while also simplifying your development tasks:

because relative timestamps mark the messages in terms of seconds, days, hours, months, or

years ago, you don't need to convert them for timezones.

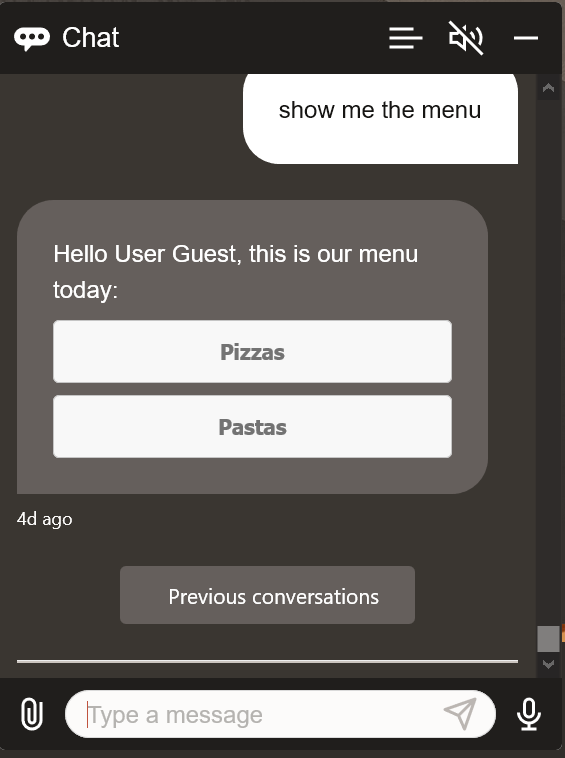

How Relative Timestamps Behave

timestampMode: 'relative' or timestampMode:

'default'), an absolute timestamp displays before the first message of the

day as a header. This header displays when the conversation has not been cleared and

older messages are still available in the history. A relative timestamp then displays on each new message.

Description of the illustration most-recent-message-timestamp.png

This timestamp is updated at following regular intervals (seconds, minutes, etc.) until a new message is received.

- For first 10s

- Between 10s-60s

- Every minute between 1m-60m

- Every hour between 1hr-24hr

- Every day between 1d-30d

- Every month between 1m-12m

- Every year after first year

Add a Relative Timestamp

- Enable relative timestamps –

timestampMode: 'relative' - Optional steps:

- Set the color for the relative timestamp –

timestamp: '<a hexadecimal color value>' - For multi-lingual skills, localize the timestamp text using

these keys:

Key Default Text Description relTimeNowNowThe initial timestamp, which displays for the first 9 seconds. This timestamp also displays when the conversation is reset. relTimeMomenta few moments agoDisplays for 10 to 60 seconds. relTimeMin{0}min agoUpdates every minute relTimeHr{0}hr agoUpdates every hour relTimeDay{0}d agoUpdates every day for the first month. relTimeMon{0}mth agoUpdates every month for the first twelve months. relTimeYr{0}yr agoUpdates every year. - Use the

timeStampFormatsettings to change the format of the absolute timestamp that displays before the first message of each day.

- Set the color for the relative timestamp –

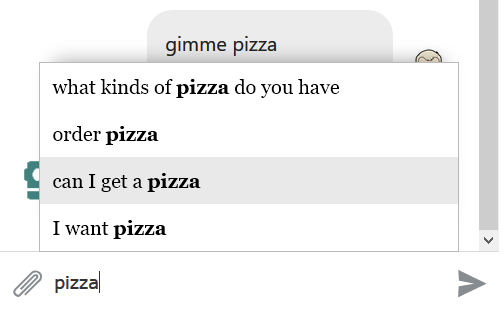

Autocomplete

- Feature flag:

enableAutocomplete: true(default:false) - Enable client side caching:

enableAutocompleteClientCache

enableAutocomplete: true and add a set of optimized user messages

to the Create Intent page. Once enabled, a popup displays these

messages after users enter three or more characters. The words in the suggested messages

that match the user input are set off in bold. From there, users can enter their own

input, or opt for one of the autocomplete messages instead.

This feature is only available over WebSocket.

When a digital assistant is associated with the Oracle Web channel, all of the sample utterances configured for any of the skills registered to that digital assistant can be used as autocomplete suggestions.

Auto-Submitting a Field

When a field has the autoSubmit property set to

true, the client sends a

FormSubmissionMessagePayload with the

submittedField map containing either the valid field values that

have been entered so far. Any fields that are not set yet (regardless of whether they

are required), or fields that violate a client-side validation are not included in the

submittedField map. If the auto-submitted field itself contains a

value that's not valid, then the submission message is not sent and the client error

message displays for that particular field. When an auto-submit succeeds, the

partialSubmitField in the form submission message will be set to

the id of the autoSubmit field.

Replacing a Previous Input Form

When the end user submits the form, either because a field has

autosubmit set to true, the skill can send a new

EditFormMessagePayload. That message should replace the previous

input form message. By setting the replaceMessage channel extension

property to true, you enable the SDK to replace previous input form

message with the current input form message.

Automatic RTL Layout

When the host page's base direction is set with <html dir="rtl">

to accomodate right-to-left (RTL) languages, the chat widget automatically renders on

the left side. Because the widget is left-aligned for RTL langauges, its icons and text

elements are likewise repositioned. The icons are in the opposite positions from where

they would be in a left-to-right (LTR) rendering. For example, the send, mic and

attachment icons are flipped so that the mic and send icons occupy the left side of the

input field (with the directional send icon pointing left) while the attachment icon is

on the right side of the input field. The alignment of the text elements, such as

inputPlaceholder and chatTitle, is based on

whether the text language is LTR or RTL. For RTL languages, the inputPlaceHolder text

and chatTitle appear on the right side of the input field.

Avatars

avatarBot- The URL of the image source, or the source string of the SVG image that's displayed alongside the skill messages.avatarUser- The URL of the image source, or the source string of the SVG image that's displayed alongside the user messages. Additionally, if the skill has a live agent integration, the SDK can be configured to show a different icon for agent messages.avatarAgent- The URL of the image source, or the source string of the SVG image that's isplayed alongside the agent messages. If this value is not provided, butavatarBotis set, then theavatarBoticon is used instead.

These settings can only be passed in the initialization settings. They cannot be modified dynamically.

new WebSDK({

URI: '<URI>',

//...,

icons: {

avatarBot: '../assets/images/avatar-bot.png',

avatarUser: '../assets/images/avatar-user.jpg',

avatarAgent: '<svg xmlns="http://www.w3.org/2000/svg" height="24" width="24"><path d="M12 6c1.1 0 2 .9 2 2s-.9 2-2 2-2-.9-2-2 .9-2 2-2m0 9c2.7 0 5.8 1.29 6 2v1H6v-.99c.2-.72 3.3-2.01 6-2.01m0-11C9.79 4 8 5.79 8 8s1.79 4 4 4 4-1.79 4-4-1.79-4-4-4zm0 9c-2.67 0-8 1.34-8 4v3h16v-3c0-2.66-5.33-4-8-4z"/></svg>'

}

})Cross-Tab Conversation Synchronization

Feature flag: enableTabsSync: true (default:

true)

Users may need to open the website in multiple tabs for various reasons. With

enableTabsSync: true, you can synchronize and continue the user's

conversation from any tab, as long as the connections parameters (URI,

channelId, and userId) are the same across all

tabs. This feature ensures that users can view messages from the skill on any tab and

respond from the same tab or any other one. Additionally, if the user clears the

conversation history in one tab, then it's deleted from the other tabs as well. If the

user updates the chat language on one tab, then the chat language gets synchronized to

the other tabs.

- A new tab synchronizes with existing tab(s) for the new messages between the user and the skill on opening. If you have not configured the SDK to display messages from the conversation history, the initial chat widget on the new tab will appear empty when opened.

- If you have configured the SDK to display conversation history, the

messages from the current chat on existing tabs will appear as part of

conversation history on a new tab. Setting

disablePastActionstoallorpostback, may prevent interaction with the actions for messages in the new tab. - The Safari browser currently does not support this feature.

Custom Message Rendering

Feature flag: delegate.render: (message) => boolean (default:

undefined)

render

delegate function which takes the message model as the input and returns a

boolean flag as the output. It must return true to replace the default

rendering with your custom rendering for a particular message type. If

false is returned, the default message is rendered instead.

For custom rendering, all of the action click handling, and the disabling or enabling of action must be handled explicitly.

samples directory to check how you can use this feature with such

frameworks like React, Angular, and Oracle JavaScript Extension Toolkit (JET).

Default Client Responses

Feature flag: enableDefaultClientResponse: true (default:

false)

Use this flag to provide default client-side responses along with a typing

indicator when the skill response has been delayed, or when there's no skill response at

all. If the user sends out the first message/query, but the skill does not respond

within the number of seconds set by the defaultGreetingTimeout flag,

the skill can display a greeting message that's configured using the

defaultGreetingMessage translation string. Next, the client checks

again for the skill response. The client displays the skill response if it has been

received, but if it hasn't, then the client displays a wait message (configured with the

defaultWaitMessage translation string) at intervals set by

defaultWaitMessageInterval. When the wait for the skill response

exceeds the threshold set by the typingIndicatorTimeout flag, the

client displays a sorry response to the user and stops the typing indicator. You can

configure the sorry response using the defaultSorryMessage translation

string.

Delegation

Feature configuration: delegate

delegate parameter, or use the setDelegate method. The delegate object may optionally contain the beforeDisplay, beforeSend, beforePostbackSend, beforeEndConversation and render delegate functions.const delegate = {

beforeDisplay: function(message) {

return message;

},

beforeSend: function(message) {

return message;

},

beforePostbackSend: function(postback) {

return postback;

},

beforeEndConversation: function(message) {

return new Promise((resolve, reject) => {

setTimeout(() => {

resolve(message);

}, 2000);

});

},

render: function(message) {

if (message.messagePayload.type === 'card') {

// Perform custom rendering for card using msgId

return true;

}

return false;

}

}

Bots.setDelegate(delegate);beforeDisplay

The beforeDisplay delegate allows a skill's message to be modified

before it is displayed in the conversation. The message returned by the delegate

displays instead of the original message. The returned message is not displayed if the

delegate returns a falsy value like null, undefined,

or false. If the delegate errors out, then the original message will be

displayed instead of the message returned by the delegate. Use the

beforeDisplay delegate to selectively apply the in-widget WebView linking behavior.

beforeSend

The beforeSend delegate allows a user message to be modified before

it is sent to the chat server as part of sendMessage. The message

returned by the delegate is sent to the skill instead of the original message. The

message returned by the delegate is not set if the delegate returns a falsy value like

null, undefined, or false, then

the message is not sent. If it errors out, the original message will be sent instead of

the message returned by the delegate.

beforePostbackSend

The beforePostbackSend delegate is similar to

beforeSend, just applied to postback messages from the user. The

postback returned by the delegate is sent to the skill. If it returns a falsy value,

like null, undefined, or false, then

no message is sent.

beforeEndConversation

beforeEndConversation delegate allows an interception at the

end of a conversation flow if some pre-exit activity must be performed. The

function receives the exit message as its input parameter and it

must return a Promise. If this Promise resolves with

the exit message, then the CloseSession exit message is sent to the

chat server. Otherwise, the exit message is prevented from being

sent....

beforeEndConversation: function(message) {

return new Promise((resolve, reject) => {

setTimeout(() => {

resolve(message);

}, 2000);

});

}render

The render delegate allows you to override the default message

rendering. If the render delegate function returns

true for a particular message type, then the WebSDK creates a

placeholder slot instead of the default message rendering. To identify the placeholder,

add the msgId of the message as the id of the element.

In the render delegate function, you can use this identifier to get the

reference for the placeholder and render your custom message template. See Custom Message Rendering.

Draggable Launch Button and Widget

Feature flag: enableDraggableButton: true (default:

false)

Sometimes, particularly on mobile devices where the screen size is limited, the chat

widget or the launch button can block content in a web page. By setting

enableDraggableButton: true, you can enable users to drag the

widget or the launch button out of the way when it's blocking the view. This flag only

affects the location of the launch button, not the chat widget: the widget will still

open from its original location.

Dynamic Typing Indicator

Feature flag: showTypingIndicator: 'true'

A typing indicator tells users to hold off on sending a message because the skill is

preparing a response. By default, skills display the typing indicator only for their

first response when you initialize the SDK with showTypingIndicator:

'true'. For an optimal user experience, the skill should have a dynamic

typing indicator, which is a typing indicator that displays after each skill response.

Besides making users aware the skill has not timed out but is still actively working on

a response, displaying the typing indicator after each skill response ensures that users

won’t attempt to send messages prematurely, as might be the case when the

keepTurn property directs the skill to reply with a series of

separate messages that don’t allow user to interject a response.

- Initialize the SDK with

showTypingIndicatorset totrue. - Call the

showTypingIndicatorAPI

showTypingIndicator can only enable the display of the

dynamic typing indicator when:

- The widget is connected to the Oracle Chat Server. The dynamic typing indicator will not appear when the connection is closed.

- The SDK has been initialized with

showTypingIndicatorset totrue.Note

This API cannot work when the SDK is used in headless mode.

typingIndicatorTimeout, that has default setting of 20 seconds. If

the API is called while a typing indicator is already displaying, then the timer is

reset and the indicator is hidden.

The typing indicator disappears as soon as the user receives the skill’s messages. The typing indicator moves to the bottom of the chat window if a user enters a message, or uploads an attachment, or sends a location, while it’s displaying.

Control Embedded Link Behavior

- Custom handling:

linkHandler: { onclick: <function>, target: '<string>' } - In the In-widget webview :

linkHandler: { target: 'oda-chat-webview' } - In a new window:

openLinksInNewWindow: 'true'

The Web SDK provides various ways for you to control the target location of the URL links within the conversations. By default, clicking any link in the conversation opens the linked URL in the new tab. You can configure the widget to open it in a specific target using linkHandler setting.

linkHandler setting expects an object that can take two optional fields, target and onclick. The target field accepts a string that identifies the location where the linked URL is to be displayed (a tab, window, or <iframe>). The following keywords have special meanings for where to load the URL:

'_self': the current browsing context.'_blank': usually a new tab, but you can configure browsers to open a new window instead.'_parent': the parent browsing context of the current one. If there's no parent browsing context, the behavior defaults to'_self'.'_top': the topmost browsing context (that is, the "highest" context that's an ancestor of the current one). If no ancestors, it behaves as'_self'.

settings = {

...,

linkHandler: { target: '_blank'}

};

const Bots = new WebSDK(settings);<iframe name="container" width="400" height="300"></iframe>

<script>

settings = {

...

linkHandler: { target: 'container'}

}

const Bots = new WebSDK(settings);

</script> onclick property of linkHandler allows you to add an event listener on all anchor links in the conversation. The listener is fired before the link is opened, and can be used to perform actions based on the link. The listener is passed an event object as parameter that is generated by the click action. You can prevent the link from being opened by returning false from the listener. The listener is also bound to the anchor element that is clicked. The context refers to the HTMLAnchorElement inside the listener. You can set either one or both properties of the linkHandler setting, but passing one of the fields is required.linkHandler: {

onclick: function(event) {

console.log('The element clicked is', this);

console.log('The event fired from the click is', event);

console.log('The clicked link is', event.target.href);

console.log('Preventing the link from being opened');

return false;

}

}Tip:

In some scenarios, you may want to override browser preferences to open links explicitly in a new window. To do this, passopenLinksInNewWindow: true in the settings.

Embedded Mode

- Feature flag:

embedded: true(default:false) - Pass the ID of target container element:

targetElement

- Adding

embedded: true. - Defining the

targetElementproperty with the ID of the DOM element (an HTML component) that's used as the widget's container (such as'container-div'in the following snippet).

<head>

<meta charset="utf-8">

<title>Oracle Web SDK Sample</title>

<script src="scripts/settings.js"></script>

<script>

const chatSettings = {

URI: YOUR_URI,

channelId: YOUR_CHANNELID,

embedded: true,

targetElement: 'container-div'

...

</script>

</head>

<body>

<h3 align="center">The Widget Is Embedded Here!</h3>

</body>

<div id="container-div"

style="height: 600px; width: 380px; padding: 0; text-align: initial">

</div>The widget occupies the full width and height of the container. If it can't be accommodated by the container, then the widget won't display in the page.

End the Conversation Session

Feature flag: enableEndConversation: true (default:

true)

Starting with Version 21.12, the SDK adds a close button to the chat widget header

by default (enableEndConversation: true) that enables users to end the

current session.

endConversationConfirmMessage and

endConversationDescription keys. When a user dismisses the prompt

by clicking Yes, the SDK sends the skill an event message that

marks the current conversation session as ended. The instance then disconnects from the

skill, collapses the chat widget, and erases the current user's conversation history. It

also raises a chatend event that you can register

for:Bots.on('chatend', function() {

console.log('The conversation is ended.');

});You can also end a session by calling the

Bots.endChat() method (described in the reference that

accompanies the Oracle Web SDK that's available from the Downloads page). Calling this method may be

useful when the SDK is initialized in headless mode.

Focus on the First Action in a Message

Feature flag: focusOnNewMessage: 'action' (default:

'input')

For users who prefer keyboard-based navigation (which includes power users), you

can shift the focus from the user input field to the first (or left most),

action button in a message. By default, the chat widget sets the focus back

to the user input field with each new message (focusOnNewMessage:

'input'). This works well for dialog flows that expect a

lot of textual input from the user, but when the dialog flow contains a

number of messages with actions, users can only select these actions through

mousing or reverse tab navigation. For this use case, you can change the

focus to the first action button in the skill message as it's received by

setting focusOnNewMessage: 'action'. If the message does

not contain any actions, the focus is set to the user input field.

Keyboard Shortcuts and Hotkeys

hotkeys object, you can create Alt Key combination shortcuts that activate, or shift focus to, UI elements in the chat widget. Users can execute these shortcuts in place of using the mouse or touch gestures. For example, users can enter Alt + L to launch the chat widget and Alt + C to collapse it. You assign the keyboard keys to elements using the hotkeys object's key-value pairs. For example: const settings = {

// ...,

hotkeys: {

collapse: 'c', // Usage: press Alt + C to collapse the chat widget when chat widget is expanded

launch: 'l' // Usage: press Alt + L to launch the chat widget when chat widget is collapsed

}

};- You can pass only a single letter or digit for a key.

- You can use only keyboard keys a-z and 0-9 as values.

The attribute is not case-sensitive.

| Key | Element |

|---|---|

clearHistory |

The button that clears the conversation history. |

close |

The button that closes the chat widget and ends the conversation. |

collapse |

The button that collapses the expanded chat widget. |

input |

The text input field on the chat footer |

keyboard |

The button that switches the input mode from voice to text. |

language |

The select menu that shows the language selection list. |

launch |

The chat widget launch button |

mic |

The button that switches the input mode from text to voice. |

send |

The button that sends the input text to the skill. |

shareMenu |

The share menu button in the chat footer |

shareMenuAudio |

The menu item in the share menu popup that selects an audio file for sharing. |

shareMenuFile |

The menu item in the share menu popup that selects a generic file for sharing |

shareMenuLocation |

The menu item in the share menu popup that selects the user location for sharing. |

shareMenuVisual |

The menu item in the share menu popup that selects an image or video file for sharing. |

Headless SDK

Feature flag: enableHeadless: true (default: false)

enableHeadless:

true in the initial settings. The communication can be implemented as

follows:

- Sending messages - Calls

Bots.sendMessage(message)to pass any payload to server. - Receiving messages - Responses can be listened for using

Bots.on('message:received', <messageReceivedCallbackFunction>). - Get connection status update - Listens for updates on the status of

the connection using

Bots.on('networkstatuschange', <networkStatusCallbackFunction>). The callback has a status parameter that is updated with values from 0 to 3, each of which maps to WebSocket states:0:WebSocket.CONNECTING1:WebSocket.OPEN2:WebSocket.CLOSING3:WebSocket.CLOSED- Return suggestions for a query – Returns a Promise that

resolves to the suggestions for the given query string. The Promise is

rejected if it takes too long (which is approximately 10 seconds) to

fetch the suggestion.

Bots.getSuggestions(utterance) .then((suggestions) => { const suggestionString = suggestions.toString(); console.log('The suggestions are: ', suggestionString); }) .catch((reason) => { console.log('Suggestion request failed', reason); });

Note

To use this API, you need to enable autocomplete (

) and configure autocomplete for the intents.enableAutocomplete: true

Multi-Lingual Chat

The Web SDK's native language support enables the chat widget to detect a user's language or allow users to select the conversation language. Users can switch between languages, but only in between conversations, not during a conversation because the conversation gets reset whenever a user selects a new language.

Enable the Language Menu

multiLangChat property with an object

containing the supportedLangs array, which is comprised of language

tags (lang) and optional display labels (label).

Outside of this array, you can optionally set the default language with the

primary key (primary: 'en' in the following

snippet).multiLangChat: {

supportedLangs: [{

lang: 'en'

}, {

lang: 'es',

label: 'Español'

}, {

lang: 'fr',

label: 'Français'

}, {

lang: 'hi',

label: 'हिंदी'

}],

primary: 'en'

}Tip:

You can add an event listener for thechatlanguagechange event (described

in the reference that accompanies the Oracle Web SDK that's available from the Downloads page), which is triggered when a chat language

has been selected from the dropdown menu or has been

changed.Bots.on('chatlanguagechange', function(language) {

console.log('The selected chat language is', language);

});- You need to define a minimum of two languages to enable the dropdown menu to display.

- The

labelkey is optional for the natively supported languages:frdisplays as French in the menu,esdisplays as Spanish, and so on. - Labels for the languages can be set dynamically by passing the

labels with the

i18nsetting. You can set the label for any language by passing it to itslanguage_<languageTag>key. This pattern allows setting labels for any language, supported or unsupported, and also allows translations of the label itself in different locales. For example:

If thei18n: { en: { langauge_de: 'German', language_en: 'English', language_sw: 'Swahili', language_tr: 'Turkish' }, de: { langauge_de: 'Deutsche', language_en: 'Englisch', language_sw: 'Swahili', language_tr: 'Türkisch' } }i18nproperty includes translation strings for the selected language, then the text for fields like the input placeholder, the chat title, the hover text for buttons, and the tooltip titles automatically switch to the selected language. The field text can only be switched to a different language when there are translation strings for the selected language. If no such strings exist, then the language for the field text remains unchanged. - The widget automatically detects the language in the user profile and activates

the Detect Language option if you omit the

primarykey. - While

labelis optional, if you've added a language that's not one of the natively supported languages, then you should add a label to identify the tag, especially when there is no i18n string for the language. For example, if you don't definelabel: 'हिंदी', for thelang: hi, then the dropdown displays hi instead, contributing to a suboptimal user experience.

Disable Language Menu

Starting with Version 21.12, you can also configure and update the chat language

without also having to configure the language selection dropdown menu by passing

multiLangChat.primary in the initial configuration without also

passing a multiLangChat.supportedLangs array. The value passed in the

primary variable is set as the chat language for the

conversation.

Language Detection

If you omit the

primary key, the widget automatically detects the language in

the user profile and activates the Detect Language option in

the menu.

You can dynamically update the selected language by calling the

setPrimaryChatLanguage(lang) API. If the passed

lang matches one of the supported languages, then that language is

selected. When no match can be found, Detect Language is

activated. You can also activate the Detected Language option by

calling setPrimaryChatLanguage('und') API, where 'und'

indicates undetermined or by passing either multiLangChat: {primary:

null} or multiLangChat: {primary: 'und'}.

setPrimaryChatLanguage(lang) API even when the dropdown menu has

not been configured. For

example:Bots.setPrimaryChatLanguage('fr')Voice recognition, when configured, is available when users select a supported language. It is not available when the Detect Language option is set. Selecting a language that is not supported by voice recognition disables the recognition functionality until a supported language has been selected.

Multi-Lingual Chat Quick Reference

| To do this... | ...Do this |

|---|---|

| Display the language selection dropdown to end users. | Pass

multiLangChat.supportedLangs.

|

| Set the chat language without displaying the language selection dropdown menu to end users. | Pass multiLangChat.primary.

|

| Set a default language. | Pass multiLangChat.primary with

multiLangChat.supportedLangs. The

primary value must be one of the supported

languages included the array.

|

| Enable language detection. | Pass primary: null or primary:

'und' with multiLangChat.

|

| Dynamically update the chat language. | Call the

setPrimaryChatLanguage(lang) API.

|

In-Widget Webview

You can configure the link behavior in chat messages to allow users to access web pages from within the chat widget. Instead of having to switch from the conversation to view a page in a tab or separate browser window, a user can remain in the chat because the chat widget opens the link within a Webview.

Configure the Linking Behavior to the Webview

- To open all links in the webview, pass

linkHandler: { target: 'oda-chat-webview' }in the settings. This sets the target of all links tooda-chat-webview, which is the name of theiframein the webview. - To open only certain links in the webview while ensuring that other

links open normally in other tabs or windows, use the

beforeDisplaydelegate. To open a specific message URL action in the webview, replace theaction.typefield’s'url'value with'webview'. When the action type is'webview'in thebeforeDisplayfunction, the action button will open the link in the webview when clicked.

Open Links from Within the Webview

Links that are embedded within a page that displays within the WebView can only be

opened within the WebView when they are converted into an anchor element

(<a>), with a target attribute defined as

target="oda-chat-webview".

Customize the WebView

webViewConfig setting

which accepts an object. For

example:{ referrerPolicy: 'no-referrer-when-downgrade', closeButtonType: 'icon', size: 'tall' The configuration can also by updated dynamically by passing a

webViewConfig object in the

setWebViewConfig method. Every property in the object is

optional.

| Field | Value | Description |

|---|---|---|

accessibilityTitle

|

String | The name of the WebView frame element for Web Accessibility. |

closeButtonIcon

|

String | The image URL/SVG string that is used to display the close button icon. |

closeButtonLabel

|

String | Text label/tooltip title for the close button. |

closeButtonType

|

|

Sets how the close button is displayed in the WebView. |

referrerPolicy

|

ReferrerPolicy

|

Indicates which referrer to send when fetching the frame's

resource. The referrerPolicy policy value must be a

valid directive. The default

policy applied is

'no-referrer-when-downgrade'.

|

sandbox

|

A String array | An array of of valid restriction strings that allows for the

exclusion of certain actions inside the frame. The restrictions that

can be passed to this field are included in the description of the

sandbox attribute in MDN Web Docs.

|

size

|

|

The height of the WebView compared to the height of the chat

widget. When set to 'tall', it is set as 80% of the

widget's height, when set to 'full' it equals the

widget's height.

|

title

|

String | The title that's displayed in the header of the WebView container. |

- Pages which provide response header

X-frame-options: denyorX-frame-options: sameoriginmay not open in the WebView due to server-side restrictions that prevent the page from being opened inside iframes. In such cases, the WebView presents the link back to the user so that they can open it in a new window or tab. - Due to server-side restrictions, authorization pages can't opened inside the WebViews, as authorization pages always return

X-frame-options: denyto prevent a clickjacking attack. - External links, which can't open correctly within the WebView. Only

links embedded in the conversation messages can be opened in the WebView.

Note

Because external messages are incompatible with the WebView, do not target any external link to be opened in the WebView.

webViewErrorInfoText

i18n translation

string:settings = {

URI: 'instance',

//...,

i18n: {

en: {

webViewErrorInfoText: 'This link can not be opened here. You can open it in a new page by clicking {0}here{/0}.'

}

}

}Long Polling

Feature flag: enableLongPolling: true (default:

false)

The SDK uses WebSockets to connect to the server and converse with skills. If for

some reason the WebSocket is disabled over the network, traditional HTTP calls can be

used to chat with the skill. This feature is known as long polling because the SDK must

continuously call, or poll, the server to fetch the latest messages from skill. This

fallback feature can be enabled by passing enableLongPolling: true in

the initial settings.

Typing Indicator for User-Agent Conversations

Feature flag: enableSendTypingStatus: boolean (default:

false)

This feature allows agents to ascertain if users are still engaged in the

conversation by sending the user status to the live agent. When

enableSendTypingStatus is set to true, the SDK

sends a RESPONDING typing status event along with the text that is

currently being typed by the user to Oracle B2C

Service or Oracle Fusion

Service. This, in turn, displays a typing indicator on the agent console. When the user has

finished typing, the SDK sends a LISTENING event to the service to hide

the typing indicator on the agent console.

The typingStatusInterval configuration, which has a minimum value of

three seconds, throttles the typing status update.

enableAgentSneakPreview (which by default is

false) must be set to true and Sneak Preview must be

enabled in Oracle B2C

Service chat configuration.

You do not have to configure live typing status on the user side. The user can see the typing status of the agent by default. When the agent is typing, the SDK receives a

RESPONDING status message which results in the display

of a typing indicator in the user's view. Similarly, when the agent is idle, the SDK

receives a LISTENING status message which hides the typing

indicator.

Voice Recognition

enableSpeech: true(default:false): SettingenableSpeech: trueenables the microphone button to display in place of the send button whenever the user input field is empty.enableSpeechAutoSendcontrols auto‑send vs populate input. Using theenableSpeechAutoSendflag, you can configure whether or not to send the text that’s recognized from the user’s voice directly to the chat server with no manual input from the user. By setting this property totrue(the default), you allow the user’s speech response to be automatically sent to the chat server. By setting it tofalse, you allow the user to edit the message before it's sent to the chat server, or delete it.startVoiceRecording(onSpeechRecognition, onSpeechNetworkChange, options) / stopVoiceRecording(): Your skill can also utilize voice recognition with thestartVoiceRecording(onSpeechRecognition, onSpeechNetworkChange)method to start recording and thestopVoiceRecordingmethod to stop recording. (These methods are described in the User's Guide that's included with the SDK.)setSpeechLocale(locale): Ensures a supported recognition locale.

OCI Speech Integration

Feature configuration: ociSpeechConfig

Oracle OCI Speech Live Transcribe uses the Whisper Speech recognition model to provide many locale-agnostic languages that ODA Speech does not support.

You can test Live Transcribe by following these steps. If you get a message saying that you are unauthorized, contact your administrator to set up the policies that grant you access to the speech resources. For other questions, refer to the Speech documentation.

- Authenticate to OCI

- Retrieve and return the OCI Speech Realtime JWT

For Step 1, we recommended running the server on OCI using the Instance Principal authentication method.

Local Testing

This script is for local testing only. It is not secure and should not be used in production.

const common = require('oci-common'); // For authenticating (See https://www.npmjs.com/package/oci-common)

const aispeech = require('oci-aispeech'); // To retreive the JWT token (See https://www.npmjs.com/package/oci-aispeech)

const express = require('express');

const os = require('os');

const PORT = 8085; // Change if needed

const COMPARTMENT_ID = '';

const PROFILE_NAME = 'OCI_SPEECH'; // As an example not to overwrite an eventual existing default profile

const PROFILE_PATH = `${os.homedir()}/.oci/config`;

const REGION = '';

async function getRealtimeSessionToken(region, compartmentId) {

try {

// Use the AuthDetailsProvider suited for your use case.

// Read more at - https://docs.oracle.com/en-us/iaas/Content/API/Concepts/sdk_authentication_methods.htm

const authenticationProvider = new common.SessionAuthDetailProvider(PROFILE_PATH, PROFILE_NAME);

authenticationProvider.setRegion(region);

// Initialize the OCI AI Speech API Client

const speechClient = new aispeech.AIServiceSpeechClient({ authenticationDetailsProvider: authenticationProvider });

// Create a request and dependent object(s).

const createRealtimeSessionTokenDetails = {

compartmentId: compartmentId,

};

const createRealtimeSessionTokenRequest = {

createRealtimeSessionTokenDetails: createRealtimeSessionTokenDetails,

};

// Send request to the Client.

const createRealtimeSessionTokenResponse = await speechClient.createRealtimeSessionToken(createRealtimeSessionTokenRequest);

return {realtimeSessionToken: createRealtimeSessionTokenResponse.realtimeSessionToken.token};

} catch (e) {

console.error(e);

return e;

}

}

const app = express();

app.use(express.json());

app.listen(PORT, () => {

console.log('OCI speech token server listening on port:', PORT);

});

app.get('/getToken', async (_request, response) => {

const token = await getRealtimeSessionToken(REGION, COMPARTMENT_ID);

response.setHeader('Access-Control-Allow-Origin', '*');

if (token.realtimeSessionToken) {

response.send({

compartmentId: COMPARTMENT_ID,

region: REGION,

token: token.realtimeSessionToken

});

} else {

response.status(token.statusCode || 500).send();

}

});PROFILE_NAME and REGION as noted in the above snippet. When the OCI session expires, run it again using this command in the terminal:

oci session authenticate --profile-name OCI_SPEECH --region --tenancy-name settings.js file, add the following to retrieve the OCI Speech Realtime JWT from the Node server:const chatWidgetSettings = {

...

ociSpeechConfig: ociSpeechConfig

};

/**

* Example of OCI speech configuration

*/

async function getOCIAuthCredentials() {

const response = await fetch('http://localhost:8085/getToken');

if (!response.ok) throw new Error(String(response.status));

return response.json();

};

const ociSpeechConfig = {

getOCIAuthCredentials: getOCIAuthCredentials,

useOCISpeech: 'always' // default is auto

};Voice Visualizer

Feature configuration: enableSpeechAutoSend

Voice mode is indicated when the keyboard

Description of the illustration voice-visualizer.png

enableSpeechAutosend is

true (enableSpeechAutoSend: true), messages are

sent automatically after they're recognized. Setting enableSpeechAutoSend:

false switches the input mode to text after the voice message is

recognized, allowing users to edit or complete their messages using text before sending

them manually. Alternatively, users can complete their message with voice through a

subsequent click of the voice icon before sending them manually.

The voice visualizer is created using AnalyserNode. You can implement the voice visualizer in headless mode using the

startVoiceRecording

method. Refer to the SDK to find out more about

AnalyserNode and frequency levels.