Creating a Table

This feature is not available for MySQL database services.

To create a table for a specific schema, in the Navigator tab, select Tables from the object type drop-down list, click Object submenu, and select Create Object.

To create a table from an existing one for a specific schema, right-click the table object in the Navigator tab, and select Use as Template.

To edit a table for a specific schema, right-click a table object in the Navigator tab, and select Edit.

The table properties are grouped in several panes.

If you are editing an existing table, you can visit the panes in any order. If you click Create before you finish creating the table, right-click the table name, select Edit, and continue creating the table.

Editing a partitioned table is not recommended. To identify whether a table is partitioned or not, right-click the table name and select Edit. If the table is partitioned, a warning message will be displayed.

Schema: Database schema in which to create the table. By default, a new table is created in the existing schema or the schema that you are logged into.

Name: Name for the table.

The different panes are described in the following sections:

Columns Pane

Specifies properties for each column in the table.

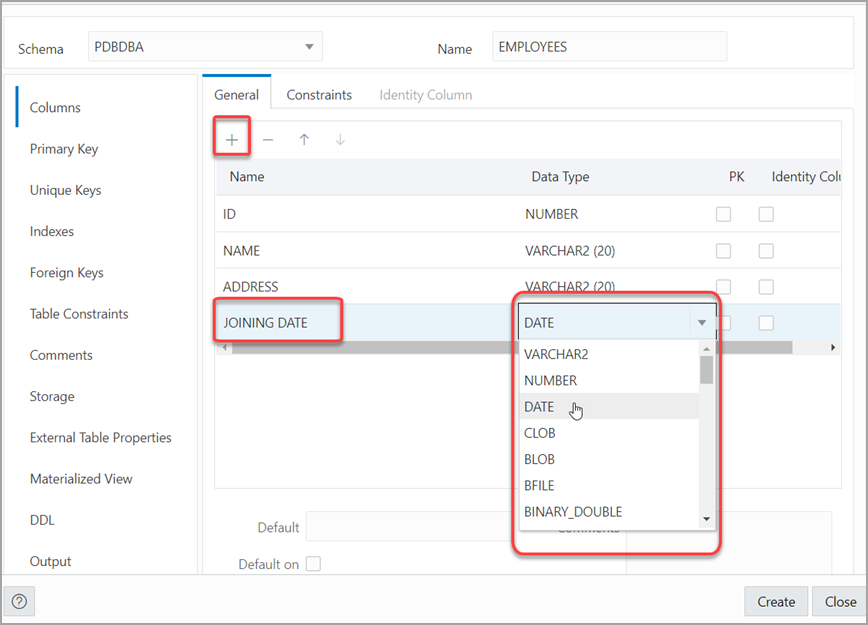

General tab

Lists the columns available in the table.

To add a column, click Add Column (+). A new row is added to the table below. Select the row and enter the details for the column.

To delete a column, select the row and click Remove Column (-). To move a column up or down in the table, select it and use the up-arrrow and down-arrow icons.

The fields are:

-

Name: Name for the column.

-

Datatype: Data type for the column.

-

Default: If no value is specified, the default value inserted into the column when a row is inserted.

-

Default on NULL: Applicable for Oracle Database 12c and later releases. If this option is selected, when a row is inserted into the table and the value specified for the column is NULL, the default value is inserted into the column.

-

Expression: Expression for computing the value in the column.

-

Comments: Optional descriptive comments about the column. Use this field to provide descriptions for the attributes.

In the table:

-

PK: If this option is selected, the column becomes the primary key.

-

Identity Column: If this option is selected, the column becomes an identity column. This is applicable only for Oracle Database 12c and later releases. For more details, see the Identity Column tab.

Constraints tab

Displays the Not Null and Check Constraints for a column. A check constraint requires values in a column to comply with a specified condition.

-

Not Null Constraint: Name: Name for the Not Null constraint.

-

Not Null Constraint: Not Null: If this option is selected, the column must contain data. You cannot specify no value or an explicit null value for this column when you insert a row. If this option is not checked, the column can contain either data or no data. A primary key column cannot be null.

-

Check Constraint: Name: Name for the check constraint definition.

-

Check Constraint: Constraint: Condition that must be met for a column to fulfill the check constraint. You can use any valid CHECK clause (without the CHECK keyword). For example, to indicate that the value in a numeric column named RATING must be from 1 to 10, you can specify: rating >=1 and rating <= 10.

-

Enabled: If this option is selected, the constraint is checked when data is entered or updated in the column.

-

Deferrable: If this option is selected, you can defer checking the validity of the constraint until the end of a transaction.

-

Initially Immediate: If this option is selected, the constraint is checked whenever you add, update, or delete data from the column.

-

Validate: If this option is selected, the existing data is checked to see if it conforms to the constraint.

Identity Column tab

Applicable for Oracle Database 12c and later releases. The Identity Column tab lists the properties of the identity column. This tab becomes available only after the Identity Column checkbox is selected for the column in the General tab. An identity column is an autoincrement column that can be used to identify a table row. Only one identity column can be specified for a table.

-

Generate: Always means that values cannot be explicitly included for the identity column in INSERT OR UPDATE statements, By Default means values for the identity column are generated automatically if no values are specified explicitly, By Default on Null means values are generated for the column only when a NULL value is supplied.

-

Start with: Starting value of the sequence.

-

Increment: Interval between successive numbers in a sequence.

-

Min value: Lowest possible value for the sequence. The default is 1 for an ascending sequence and -(10^26) for a descending sequence.

-

Max value: Highest possible value for the sequence. The default is 10^27 for an ascending sequence and -1 for a descending sequence.

-

Cache and Cache size: Cache causes sequence values to be preallocated in cache, which can improve application performance; Cache size indicates the number of sequence values preallocated in cache. No Cache causes sequence values not to be preallocated in cache.

-

Cycle: Indicates whether the sequence "wraps around" to reuse numbers after reaching its maximum value (for an ascending sequence) or its minimum value (for a descending sequence). If cycling of values is not enabled, the sequence cannot generate more values after reaching its maximum or minimum value.

-

Order: Indicates whether sequence numbers are generated in the order in which they are requested. If No Order is specified, sequence numbers are not guaranteed to be in the order in which they were requested.

Primary Key Pane

Specifies the primary key for the table.

The primary key is the column, or set of columns, that uniquely identifies each row in the table. If the Primary Key checkbox is selected for a column in the General tab, the corresponding fields are automatically populated in the Primary Key pane. You can make changes to the properties as required.

An index is automatically created on the primary key.

-

Name: Name of the constraint to be associated with the primary key definition.

-

Enabled: If this option is checked, the primary key constraint is enforced: that is, the data in the primary key column (or set of columns) must be unique and not null.

-

Index: Name of the index to which the primary key refers.

-

Tablespace: Name of the tablespace associated with the index.

-

Available Columns: Lists the columns that are available to be added to the primary key definition. You can select multiple attributes, if required, for the primary key.

-

Selected Columns: Lists the columns that are included in the primary key definition.

To add a column to the primary key definition, select it in Available Columns and click the Add (>) icon; to remove a column from the primary key definition, select it in Selected Columns and click the Remove (<) icon. To move all columns from available to selected (or the reverse), use the Add All (>>) or Remove All (<<) icon. To move a column up or down in the primary key definition, select it in Selected Columns and use the arrow buttons.

Unique Keys Pane

Specifies one or more unique constraints for the table.

A unique constraint specifies a column, or set of columns, whose data values must be unique: each data value must not be null, and it must not be the same as any other value in the column.

To add a unique constraint, click the Add button; to delete a unique constraint, select it and click the Remove button.

-

Name: Name of the unique constraint.

-

Enabled: If this option is selected, the unique constraint is enforced.

-

Rely: If this option is selected, the constraint in NOVALIDATE mode is taken into account during query rewrite.

-

Deferrable: If this option is selected, in subsequent transactions, constraint checking can be deferred until the end of the transaction using the SET CONSTRAINT(S) statement.

-

Initially Immediate: If this option is selected, the constraint is checked at the end of each subsequent SQL statement.

-

Validate: If the option is selected, the existing data is checked to see if it conforms to the constraint.

-

Index: Name of the index to which the unique key refers.

-

Tablespace: Name of the tablespace associated with the index.

-

Available Columns: Lists the columns that are available to be added to the unique constraint definition.

-

Selected Columns: Lists the columns that are included in the unique constraint definition.

To add a column to the unique constraint definition, select it in Available Columns and click the Add (>) icon; to remove a column from the unique constraint definition, select it in Selected Columns and click the Remove (<) icon. To move all columns from available to selected (or the reverse), use the Add All (>>) or Remove All (<<) icon. To move a column up or down in the unique constraint definition, select it in Selected Columns and use the arrow buttons.

Indexes Pane

Lists the indexes defined for the table.

To add an index, click Add Index (+); to delete an index, select it and click Remove Index (-).

-

Name: Name of the index.

-

Type: The type of Oracle index.

Non-uniquemeans that the index can contain multiple identical values;Uniquemeans that no duplicate values are permitted;Bitmapstores rowids associated with a key value as a bitmap. -

Tablespace: Name of the tablespace for the index.

-

Expression: A column expression is an expression built from columns, constants, SQL functions, and user-defined functions. When you specify a column expression, you create a function-based index.

-

Available Columns and Selected Columns: Columns selected for the index. To select a column, click the column in the Available Columns box, and then click the click the Add Selected Columns icon to move it to the Selected Columns box.

Foreign Keys Pane

Specifies one or more foreign keys for the table.

A foreign key specifies a column ("local column"), whose data values match values in the primary key or unique constraint of another table.

-

Name: Name of the foreign key definition.

-

Enabled: If this option is checked, the foreign key is enforced.

-

Rely, Deferrable, Initially Immediate, Validate: See the description of these fields in the Unique Keys pane.

-

Referenced Constraint: Schema: Name of the schema containing the table with the primary key or unique constraint to which this foreign key refers.

-

Referenced Constraint: Table: Name of the table with the primary key or unique constraint to which this foreign key refers.

-

Referenced Constraint: Constraint: Name of the primary key or unique constraint to which this foreign key refers.

-

Referenced Constraint: On Delete: Action to take automatically when a row in the referenced table is deleted and rows with that value exist in the table containing this foreign key:

NO ACTION(shown by a crossing line in diagrams) performs no action on these rows;CASCADE(shown by an "X") deletes these rows;SET NULL(shown by a small circle) sets null all columns in those rows that can be set to a null value. -

Assocations: Local Column: Lists the column in the currently selected (local) table that is included in the foreign key definition. For each referenced column in the foreign key definition, select the name of a column in the edited table.

-

Associations: Referenced Column: For each local column, identifies the column in the other (foreign) table that must have a value matching the value in the local column.

Table Constraints Pane

Specifies one or more check constraints for the table.

A check constraint specifies a condition that must be met when a row is inserted into the table or when an existing row is modified.

-

Name: Name of the check constraint definition.

-

Check Condition: Condition that must be met for a row to fulfil the check constraint. You can use any valid CHECK clause (without the CHECK keyword). For example, to indicate that the value in a numeric column named RATING must be from 1 to 10, you can specify rating >=1 and rating <= 10.

-

Enabled: If this option is checked, the check constraint is enforced.

Storage Pane

Enables you to specify storage options for the table.

When you create or edit a table or an index, you can override the default storage options.

-

Organization: Specifies that the table is stored and organized with (Index) or without an index (Heap) or as an external table (External).

-

Tablespace: Name of the tablespace for the table or index.

-

Logging: ON means that the table creation and any subsequent INSERT operations against the table are logged in the redo log file. OFF means that these operations are not logged in the redo log file.

-

Row Archival: YES enables in-database archiving, which allows you to archive rows within the table by marking them as invisible.

External Table Pane

Specifies options for an external table.

An external table is a read-only table whose metadata is stored in the database but whose data in stored outside the database.

External Table

-

Access Driver Type: Specifies the type of external table.

-

ORACLE_LOADER: Extracts data from text data files. This is the default access driver, which loads data from external tables to internal tables.

-

ORACLE_DATAPUMP: Extracts data from binary dump files. This access driver can perform both loads and unloads.

-

ORACLE_BIGDATA: Extracts data from Oracle Big Data Appliance.

-

ORACLE_HDFS: Extracts data stored in a Hadoop Distributed File System (HDFS).

-

ORACLE_HIVE: Extracts data stored in Apache HIVE.

-

-

Default Directory: Specifies the default directory to use for all input and output files that do not explicitly name a directory object. The location is specified with a directory object, not a directory path.

-

Access Params: Assigns values to the parameters of the specific access driver for the external table. Access parameters are optional.

-

OPAQUE_FORMAT_SPEC: The opaque_format_spec specifies all access parameters for the ORACLE_LOADER, ORACLE_DATAPUMP, ORACLE_HDFS, and ORACLE_HIVE access drivers. Field names specified in the opaque_format_spec must match columns in the table definition, else Oracle Database ignores them.

-

USING CLOB: Enables you to derive the parameters and their values through a subquery. The subquery cannot contain any set operators or an ORDER BY clause. It must return one row containing a single item of data type CLOB.

-

-

Reject Limit: The number of conversion errors that can occur during a query of the external data before an Oracle Database error is returned and the query is aborted.

-

Project Column: Determines how the access driver validates the rows of an external table in subsequent queries.

-

ALL: Processes all column values, regardless of which columns are selected, and validates only those rows with fully valid column entries. If any column value raises an error, such as a data type conversion error, the row is rejected even if that column was not referenced in the select list of the query.

-

REFERENCED: Processes only those columns in the select list of the query.

The ALL setting guarantees consistent result sets. The REFERENCED setting can result in different numbers of rows returned, depending on the columns referenced in subsequent queries, but is faster than the ALL setting. If a subsequent query selects all columns of the external table, then the settings behave identically.

-

-

Location: Specifies the data files for the external table. Use the Add (+) icon to add each location specification.

-

For ORACLE_LOADER and ORACLE_DATAPUMP, the files are named in the form

directory:file. The directory portion is optional. If it is missing, then the default directory is used as the directory for the file. If you are using the ORACLE_LOADER access driver, then you can use wildcards in the file name. An asterisk (*) signifies multiple characters and a question mark (?) signifies a single character. -

For ORACLE_HDFS, LOCATION is a list of Uniform Resource Identifiers (URIs) for a directory or for a file. There is no directory object associated with a URI.

-

For ORACLE_HIVE, LOCATION is not used. Instead, the Hadoop HCatalog table is read to obtain information about the location of the data source (which could be a file or another database).

-

Opaque Format Spec

Specifies all access parameters for the ORACLE_LOADER, ORACLE_DATAPUMP, ORACLE_HDFS, and ORACLE_HIVE access drivers.

For example:

RECORDS DELIMITED BY NEWLINE CHARACTERSET US7ASCII

TERRITORY AMERICAN

BADFILE log_file_dir:'ext_1v3.bad'

LOGFILE log_file_dir:'ext_1v3.log'

FIELDS TERMINATED BY "|" OPTIONALLY ENCLOSED BY '^' LDRTRIM

( PROD_ID,

CUST_ID ,

TIME_ID DATE(10) "YYYY-MM-DD",

CHANNEL_ID ,

PROMO_ID ,

QUANTITY_SOLD ,

AMOUNT_SOLD ,

UNIT_COST ,

UNIT_PRICE

)

and the full statement:

CREATE TABLE SH.SALES_TRANSACTIONS_EXT

(

PROD_ID NUMBER ,

CUST_ID NUMBER ,

TIME_ID DATE ,

CHANNEL_ID NUMBER ,

PROMO_ID NUMBER ,

QUANTITY_SOLD NUMBER ,

AMOUNT_SOLD NUMBER (10,2) ,

UNIT_COST NUMBER (10,2) ,

UNIT_PRICE NUMBER (10,2)

)

ORGANIZATION EXTERNAL

(

TYPE ORACLE_LOADER

DEFAULT DIRECTORY DATA_FILE_DIR

ACCESS PARAMETERS

(

RECORDS DELIMITED BY NEWLINE CHARACTERSET US7ASCII

TERRITORY AMERICAN

BADFILE log_file_dir:'ext_1v3.bad'

LOGFILE log_file_dir:'ext_1v3.log'

FIELDS TERMINATED BY "|" OPTIONALLY ENCLOSED BY '^' LDRTRIM

( PROD_ID ,

CUST_ID ,

TIME_ID DATE(10) "YYYY-MM-DD",

CHANNEL_ID ,

PROMO_ID ,

QUANTITY_SOLD ,

AMOUNT_SOLD ,

UNIT_COST ,

UNIT_PRICE

)

)

LOCATION ( "DATA_FILE_DIR":'sale1v3.dat')

)

REJECT LIMIT 100

;CLOB Subquery

Type or copy and paste the query.

Materialized View Pane

Specifies options for a materialized view.

Query: Contains the SQL code for the query part of the view definition. Type or copy and paste the query.

General

-

On Pre-built Table: If Yes, an existing table is registered as a preinitialized materialized view. This option is particularly useful for registering large materialized views in a data warehousing environment. The table must have the same name and be in the same schema as the resulting materialized view, and the table should reflect the materialization of a subquery.

-

Reduced Precision: Yes authorizes the loss of precision that will result if the precision of the table or materialized view columns do not exactly match the precision returned by the subquery. If No , the precision of the table or materialized view columns must exactly match the precision returned by the subquery, or the create operation will fail.

-

For Update: Select Yes to allow a subquery, primary key, object, or rowid materialized view to be updated. When used in conjunction with Advanced Replication, these updates will be propagated to the master.

-

Real Time MV: Select Yes to create a real-time materialized view or a regular view. A real-time materialized view provides fresh data to user queries even when the materialized view is not in sync with its base tables due to data changes. Instead of modifying the materialized view, the optimizer writes a query that combines the existing rows in the materialized view with changes recorded in log files (either materialized view logs or the direct loader logs). This is called on-query computation.

-

Query Rewrite: If Enable, the materialized view is enabled for query rewrite, which transforms a user request written in terms of master tables into a semantically equivalent request that includes one or more materialized views.

-

Build: Specifies when to populate the materialized view. Immediate indicates that the materialized view is to be populated immediately. Deferred indicates that the materialized view is to be populated by the next refresh operation. If you specify Deferred, the first (deferred) refresh must always be a complete refresh; until then, the materialized view has a staleness value of unusable, so it cannot be used for query rewrite.

-

Use Index: If Yes, a default index is created and used to speed up incremental (fast) refresh of the materialized view. If No, this default index is not created. (For example, you might choose to suppress the index creation now and to create such an index explicitly later.)

-

Index Tablespace: Specifies the tablespace in which the materialized view is to be created. If a tablespace is not selected, the materialized view is created in the default tablespace of the schema containing the materialized view.

-

Cache: If Yes, the blocks retrieved for this table are placed at the most recently used end of the least recently used (LRU) list in the buffer cache when a full table scan is performed. This setting is useful for small lookup tables. If No, the blocks are placed at the least recently used end of the LRU list.

Refresh Clause

-

Refresh: Select Yes to enable refresh operations.

-

Refresh Type: The method of refresh operation to be performed:

-

Complete Refresh: Executes the defining query of the materialized view, even if a fast refresh is possible.

-

Fast Refresh: Uses the incremental refresh method, which performs the refresh according to the changes that have occurred to the master tables. The changes for conventional DML changes are stored in the materialized view log associated with the master table. The changes for direct-path INSERT operations are stored in the direct loader log.

-

Force Refresh: Performs a fast refresh if one is possible; otherwise, performs a complete refresh.

-

-

Action: The type of refresh operation to be performed:

-

On Demand: Performs a refresh when one of the DBMS_MVIEW refresh procedures are called.

-

On Commit: Performs a fast refresh whenever the database commits a transaction that operates on a master table of the materialized view. This may increase the time taken to complete the commit, because the database performs the refresh operation as part of the commit process.

-

Specify: Performs refresh operations according to what you specify in the Start on and Next fields.

-

-

Start Date: Starting date and time for the first automatic refresh operation. Must be in the future.

-

Next Date: Time for the next automatic refresh operation. The interval between the Start on and Next times establishes the interval for subsequent automatic refresh operations. If you do not specify a value, the refresh operation is performed only once at the time specified for Start on.

-

With: Refresh type, which determines the type of materialized view:

-

Primary Key: Creates a primary key materialized view, which allows materialized view master tables to be reorganized without affecting the eligibility of the materialized view for fast refresh.

-

Row ID: Creates a rowid materialized view, which is useful if the materialized view does not include all primary key columns of the master tables.

-

-

Default Storage: If Yes, DEFAULT specifies that Oracle Database will choose automatically which rollback segment to use. If you specify DEFAULT, you cannot specify the rollback_segment. DEFAULT is most useful when modifying, rather than creating, a materialized view.

-

Storage Type: MASTER specifies the remote rollback segment to be used at the remote master site for the individual materialized view. LOCAL specifies the remote rollback segment to be used for the local refresh group that contains the materialized view. This is the default.

-

Rollback Segment: Enter the name of the rollback segment.

-

Using Constraint: If this option is checked, more rewrite alternatives can be used during the refresh operation, resulting in more efficient refresh execution. The behavior of this option is affected by whether you select Enforced or Trusted.

-

Enforced: Causes only enforced constraints to be used during the refresh operation.

-

Trusted: Enables the use of dimension and constraint information that has been declared trustworthy by the database administrator but that has not been validated by the database. If the dimension and constraint information is valid, performance may improve. However, if this information is invalid, then the refresh procedure may corrupt the materialized view even though it returns a success status.

-

DDL Pane

You can review and save the SQL statements that are generated when creating or editing the object. If you want to make any changes, go back to the relevant panes and make the changes there.

-

For a new table, click CREATE to view the generated DDL statements.

-

When you edit table properties, click UPDATE to view the generated ALTER statements. For a new table, the UPDATE tab will not be available.

When you are finished, click Apply.