Intelligent Advisor

Oracle Intelligent Advisor lets business users deliver consistent and auditable advice across channels and business processes by capturing rules in natural-language using Microsoft Word and Excel documents, and then building interactive customer-service experiences, called interviews, around those rules. You can leverage existing interviews by incorporating them into your skills.

For example, an energy company has a utility skill that lets customers report outages, pay bills, and view monthly usage. It also has a web form interview that gives advice on how to save on electricity. The company can enhance the utility skill by making that same interview available from the skill, where it is conducted in the form of a chat conversation. The skill designer doesn't have to write dialog flow to model the interview rules, and the interview rules can be maintained in just one place. You can learn more about Oracle Intelligent Advisor at Intelligent Advisor Documentation.

You only can use the interviews for anonymous users. The skill can't access interviews that are enabled for portal users or agent users.

How the Intelligent Advisor Framework Works

The Intelligent Advisor service, in tandem with the Intelligent Advisor dialog flow component in Oracle Digital Assistant, allows you to integrate an Oracle Intelligent Advisor interview into your skill.

When a skill conducts an interview, it displays each field in a way that's appropriate for the channel, as described in How Artifacts Display in a Conversation. To navigate through the interview, customers either answer the question or say one of the following slash commands:

| Slash Command | Action |

|---|---|

| /reset | Go back to the first question. |

| /back | Go back to previous question. |

| /exit | Exit the interview. If a user exits an interview and then again triggers the Intelligent Advisor dialog flow state while in the same session, the skill will ask the user if they want to resume the previous interview. |

Tip:

You can configure your own text for the slash commands. For example, you can change/back to /previous.

Integrating your skill with an interview is a three-step process.

Add an Intelligent Advisor Service

Before you can access Oracle Intelligent Advisor interviews from any skills, you need to add an Intelligent Advisor service, which configures the connection between Oracle Digital Assistant and an Intelligent Advisor Hub's API client.

We'll guide you through the steps for obtaining the API client information from Intelligent Advisor Hub and for creating the Intelligent Advisor service in Digital Assistant. The steps in the Intelligent Advisor Hub must be completed by a Hub administrator.

-

From Intelligent Advisor Hub, click

to open the side menu, click Permissions, and then click the Workspaces tab.

to open the side menu, click Permissions, and then click the Workspaces tab.

-

Click the desired collection and ensure that Chat Service is selected so that when deployments in the collection are activated, they are activated for chat service by default.

-

Click the API clients tab.

-

If you don't see a client that has the Chat Service role, then assign the role to one of the existing clients or create one with the Chat Service role enabled.

-

Open the API client, make sure that the client is enabled, and make a note of the secret and the API client's identifier, which you'll need to create the Intelligent Advisor service.

-

Either select Hub administrator for the page or select Manager for the workspace. If neither of these are selected, then you won't be able to display the Hub's active chat deployments from the Intelligent Advisor service page. Nor will you be able to create new skills directly from the service page.

-

You can sign out of the Hub.

In Digital Assistant, click

to open the side menu, click Settings, click Additional Services, and click the Intelligent Advisor tab.

to open the side menu, click Settings, click Additional Services, and click the Intelligent Advisor tab.

-

Click + Service.

-

In the New Intelligent Advisor Service dialog, provide a unique name for the service.

This is the name that you'll use for the Intelligent Advisor component's Intelligent Advisor Service Name property in your skill's dialog flow.

-

Enter the host for the Intelligent Advisor Hub. Leave out the

https://prefix. For example:myhub.example.com. -

Set the Client ID and Client Secret to the API client identifier and secret that you noted earlier.

-

Click Create.

-

Click Verify Settings to ensure that a connection can be made using the entered settings.

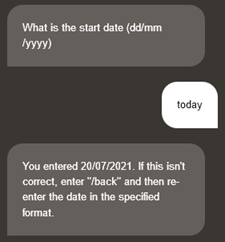

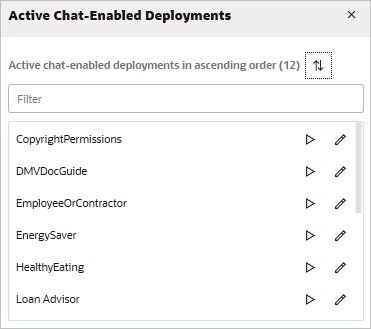

As you create skills that use the service, the Intelligent Advisor service page will display the names of the skills and the Hub deployments that the skills use. You can open the skill tester for any of these skills directly for the service's page. You also can create skills directly from this page. See Create and Test Skills From Intelligent Advisor Service Page.

For more information about API clients, see Activate a project and Update an API client's details in Intelligent Advisor Documentation.

Create and Test Skills From Intelligent Advisor Service Page

The Intelligent Advisor service page lists all the skills that use the Hub's active chat deployments, and you can run the skill tester for any of the listed skills. In addition, from this page, you can create a skill that accesses an active deployment.

To test a skill that's on the list, click the Test this Skill icon that appears next to the skill's name. When the Conversation Tester opens, start the conversation. With typical skills, you can simply type hi and press Enter to start. However, sometimes the skill is looking for specific phrases. In that case, if you enter a phrase that it doesn't understand, it should give you instructions on what to type.

Description of the illustration ia-test-skill.png

To create a skill from the Intelligent Advisor service page, select a service for anonymous users, and then click + New Skill. (Skills can't access deployments that are enabled for portal users or agent users.) Provide a display name for the skill, select a deployment from the drop-down, and click Create. The new skill appears in the list of skills that use the service.

Description of the illustration ia-new-skill-dialog.png

If the service's API client doesn't have either Hub administrator or Manager selected, then the drop-down can't list the deployments, and you'll get a notification that you do not have permission to perform the operation. See Add an Intelligent Advisor Service.

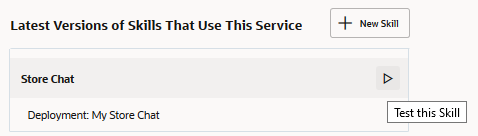

List Available Deployments

To see a list of the Hub's deployments that you can access from skills, go to Additional Services, click Intelligent Advisor, select the Hub's service, and click Deployments.

The list shows all the deployments that are chat enabled. However, you only can use the deployments for anonymous users. The skill can't access deployments that are enabled for portal users or agent users.

If a deployment is web enabled, then you'll see the Start web interview icon![]() next to its name. You can click the icon to run the interview in a new browser tab.

next to its name. You can click the icon to run the interview in a new browser tab.

To access the deployment in the Hub, click Manage deployment ![]() .

.

Description of the illustration ia-deployments.png

If the service's API client doesn't have either Hub administrator or Manager selected, then the page can't display the deployments and you'll get a notification that you do not have permission to perform the operation. See Add an Intelligent Advisor Service.

Creating a Conversational Interview

Although it's not a hard requirement, you can dramatically increase the effectiveness of your chat-based Oracle Intelligent Advisor interviews by employing conversational techniques.

Most interviews are optimized for forms on web pages, where screen labels, section labels, and component types, such as drop-down lists and check boxes, give visual clues about the context of the question and the choices you can make. Because skill conversations are different from traditional web interfaces, you might not be able to simply use your form-based interview for a conversation. To illustrate the point, have a person join you and sit back-to-back, with one person acting as the bot and the other acting as the interviewee. Read your form-based interview aloud (reading every label and prompt) and ask yourself if this is a conversation you think your customers would want to have with a bot.

Rather than re-using an interview that's specifically designed for a web page, consider designing a variation that's more conversational in manner. Because you'll want to use the same policy model as the single source-of-truth, use the Oracle Intelligent Advisor Inclusions feature to create additional interviews for that policy model. See Inclusions in Intelligent Advisor Documentation. Alternatively, consider passing seed data to the interview to indicate that the interview is being conducted in a skill and have the interview hide or display artifacts accordingly, similar to the steps described in Customize multi-channel interviews for different user types in Intelligent Advisor Documentation except that you'll use your own attribute. See Pass Attribute Values and Connection Parameters.

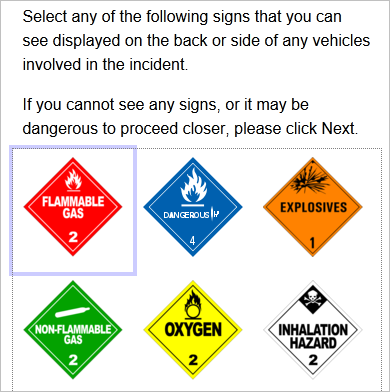

Here's an example. The following interview presents a set of image toggles. On a web page, the user can click the images that apply.

Description of the illustration ia-hazards-form.png

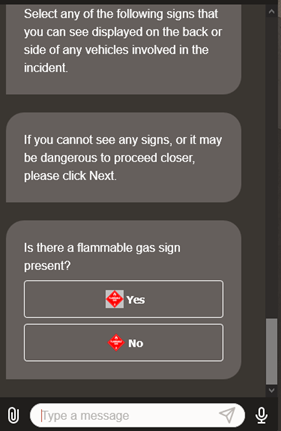

When conducted in a skill, the interview presents each image and asks the user if the sign is present. The user has to answer Yes or No for each sign, as shown in this example.

Description of the illustration ia-hazards-skill.png

Because the rules for this interview only care if any sign is present, the interview can be optimized for a skill by displaying a single image with all of the signs and asking just one question – "Are any of these signs present?" The rules simply need to be modified to add a condition for there is any sign present, as shown here.

dangerous goods need to be handled if

there is a combustibles sign present or

there is an explosives sign present or

there is a flammable gas sign present or

there is an inhalation sign present or

there is a nonflammable sign present or

there is an oxygen sign present or

there is a flammable solid sign present or

there is any sign presentAlso note that the lead-in text isn't applicable to the behavior of the skill conversation and, therefore, should be modified or hidden.

What Makes an Interview Conversational

The ideal interview for a skill would be one that uses the same concise natural language that a human would encounter in a person-to-person conversation. Instead of a cold, robotic, boring series of questions, strive for an interactive conversation that's welcoming, helpful, familiar, encouraging, and non-judgmental. Here are some ways to make an interview more conversational.

- Create a persona for your interview and have the interview convey a consistent personality that users can identify with. For example, you might create a persona who is professional and personable. Or you might want to create a persona who is professorial.

- Let the user know the goal and benefit for each set of related questions. For example: "Before we can approve your loan, we need to know about your assets and liabilities."

- Use active voice whenever possible. For example, instead of "The request has been submitted", say "The request is on its way."

- Use point-of-view terms such as "you", "your", "I", and "we". For example: "How much do you want to borrow?"

- Break up long series of yes/no questions with occasional interjections. For example: "OK. I have some more questions about this."

- If you have a long list of choices, break the list into smaller ones and ask a question that will help filter which list to display. Another option is to break up the list into smaller ones that each include "none." As soon as the user selects a choice, skip the remaining lists. Put the most common choices first and the least common last.

- Use encouraging words. For example: "We're almost done. I just need to get some references."

- Minimize repetition and avoid redundancy. For example: instead of "What's the asset type?" and "What's the asset value", you can say "What type of asset is it?" and "What's its value?"

- Use contractions.

- Use familiar words. For example, instead of "Fulfillment Date", you could say "When did you receive your order?"

- Because a default value is displayed as a "suggested value", be judicious in its use. Don't set a default value unless you really want to suggest that as the optimum input. For example, if you gave

What is your employment status?a default ofemployed, the conversational output would beWhat is your employment status? Suggested value "employed", which might be a bit jarring for someone who is out of work or retired. - Try to reduce interview questions by using user variables, profile variables, and composite bag entities to gather as many answers as possible and passing the answers to the interview through the Intelligent Advisor component's Seed Data property as explained in Pass Attribute Values and Connection Parameters.

After you complete your initial draft of the interview, you can quickly create a test skill and test the skill as described in Create and Test Skills From Intelligent Advisor Service Page. You might want to test it out on several people to get their feedback. After you complete the production skill, you can use the skill's analytics to help re-evaluate and refine the interview.

If you haven't designed a skill conversation before, you might want to read Conversational Design to learn about best practices.

How Artifacts Display in a Conversation

A skill conversation can't display some UI affordances, such as drop-down lists, checkboxes, and radio buttons. Instead, the affordances are converted to buttons.

- Drop-down lists and non-Boolean radio button sets are output as button sets

- For a check box group, each check box in the group is output with its prompt followed by Yes and No buttons

- A Boolean radio button is output as a prompt followed by Yes and No buttons (and an Uncertain button if the radio button is optional)

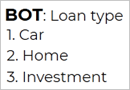

In text-only channels, the buttons are displayed as text the user has to type the answer.

Some affordances, such as dates and sliders, don't display if they don't have prompts. Some of the affordances aren't supported, such as signatures, captchas, and custom properties.

Before you design your interview, you should understand how the artifacts are handled differently between forms on web pages and skill conversations in the various channels.

As an example, this table shows how an image button group appears on a web form as compared to a skill conversation in a rich-UI channel, Oracle B2C Service default chat, and a text-only channel. Note that for the Oracle B2C Service default chat and the text-only channel, auto-numbering was enabled for the example.

| Intelligent Advisor Web Form | Rich-UI Channel | Oracle B2C Service Default Chat | Text-Only Channel |

|---|---|---|---|

Description of the illustration ia-image-button-group-form.png |

Description of the illustration ia-image-button-group-rich.png |

Description of the illustration ia-image-button-group-da-agent.png |

Description of the illustration ia-image-button-group-text-only.png |

This table describes how each interview artifact appears in a conversation.

| Intelligent Advisor Artifact | Rich-UI Channel | Oracle B2C Service Default Chat | Text-Only Channel |

|---|---|---|---|

| Button group: text, image, and text and image |

Displays the prompt and the buttons, which are labeled using the item values. For text-and-image button groups and for image button groups, both the image and the item value are displayed. For image button groups, the buttons are displayed either horizontally or vertically depending on whether Horizontal or Vertical is selected for the input control in Policy Modeler. Slack channels don't display the images. |

Displays the prompt and the buttons, which are labeled using the item values. For image button groups, the buttons are displayed either horizontally or vertically depending on whether Horizontal or Vertical is selected for the input control in Policy Modeler. |

Displays the prompt and a list of the item values. The user types the text for the entry that they want. |

| Calendar (date, time, and date time) | Displays the prompt and accepts a date, date and time, or time, depending on the

input data's Attribute.

Accepts any format that's valid for the Digital

Assistant DATE and TIME entities, respectively,

such as today, 5/16/1953

11:00pm, or 13:00.

Non-formatted values resolve to UTC time zone.

The valid date format depends on the locale settings for the DATE entity and the Intelligent Advisor component's Interview Locale property. See the property description at Intelligent Advisor Properties. |

Displays the prompt and accepts a date, date and time, or time, depending on the

input data's Attribute.

Accepts any format that's valid for the Digital

Assistant DATE and TIME entities, respectively,

such as today, 5/16/1953

11:00pm, or 13:00.

Non-formatted values resolve to UTC time zone.

The valid date format depends on the locale settings for the DATE entity and the Intelligent Advisor component's Interview Localeproperty. See the property description at Intelligent Advisor Properties. |

Displays the prompt and accepts a date, date and time, or time, depending on the

input data's Attribute.

Accepts any format that's valid for the Digital

Assistant DATE and TIME entities, respectively,

such as today, 5/16/1953

11:00pm, or 13:00.

Non-formatted values resolve to UTC time zone.

The valid date format depends on the locale settings for the DATE entity and the Intelligent Advisor component's Interview Locale property. See the property description at Intelligent Advisor Properties. |

| Captcha | Not supported | Not supported | Not supported |

| Checkbox |

Each checkbox is output with its prompt followed by Yes and No buttons. You can use the |

Each checkbox is output with its prompt followed by Yes and No buttons. You can use the |

Each checkbox is output with the prompt followed by You can use the |

| Currency | Displays the label and accepts a numeric response (no currency symbol).

The valid number format depends on the locale settings for the NUMBER entity and the Intelligent Advisor component's Interview Locale property. See the property description at Intelligent Advisor Properties. |

Displays the label and accepts a numeric response (no currency symbol).

The valid number format depends on the locale settings for the NUMBER entity and the Intelligent Advisor component's Interview Locale property. See the property description at Intelligent Advisor Properties. |

Displays the label and accepts a numeric response (no currency symbol).

The valid number format depends on the locale settings for the NUMBER entity and the Intelligent Advisor component's Interview Locale property. See the property description at Intelligent Advisor Properties. |

| Custom property | Not supported | Not supported | Not supported |

| Drop-down, filtered drop-down, and fixed list |

Displays buttons, which are labeled using the display values. |

Displays a list of the display values. The user types the text for the entry that they want. |

Displays a list of the display values. The user types the text for the entry that they want. |

| Explanation | You can use the Intelligent Advisor component's Show Explanation property to specify whether to display the explanation. | You can use the Intelligent Advisor component's Show Explanation property to specify whether to display the explanation. | You can use the Intelligent Advisor component's Show Explanation property to specify whether to display the explanation. |

| Form | Displays the label followed by a button with its label set to the file name plus the file type, such as (PDF).

|

Displays the label, file name, file type, such as (PDF), and a clickable link to open the form in a web browser.

|

Displays the label, file name, file type, such as (PDF), and the URL.

|

| Image | Displays the image. Ignores custom properties. | Displays the image. Ignores custom properties. | Images aren't supported in text-only channels. |

| Image toggle |

Displays the image in a Yes button and again in a No button. Slack channels don't display the images. You can use the Intelligent Advisor component's

|

Displays the image in a Yes button and again in a No button. You can use the Intelligent Advisor component's

|

Displays the label followed by You can use the Intelligent Advisor component's

|

| Label | Ignores style and custom properties. | Ignores style and custom properties. | Ignores style and custom properties. |

| Masked text | The expected format is shown as Answer format: <mask>.

|

The expected format is shown as Answer format: <mask>.

|

The expected format is shown as Answer format: <mask>.

|

| Number | Displays the label and accepts a numeric response.

The valid number format depends on the locale settings for the NUMBER entity and the Intelligent Advisor component's Interview Locale property. See the property description at Intelligent Advisor Properties |

Displays the label and accepts a numeric response.

The valid number format depends on the locale settings for the NUMBER entity and the Intelligent Advisor component's Interview Locale property. See the property description at Intelligent Advisor Properties |

Displays the label and accepts a numeric response.

The valid number format depends on the locale settings for the NUMBER entity and the Intelligent Advisor component's Interview Locale property. See the property description at Intelligent Advisor Properties |

| Password | Because the user's utterance is passed as clear text, you should not ask for passwords. | Because the user's utterance is passed as clear text, you should not ask for passwords. | Because the user's utterance is passed as clear text, you should not ask for passwords |

| Radio button, Boolean |

Displays the prompt and Yes and No buttons. If the field is optional, Uncertain is also displayed. You can use the |

Displays the prompts, You can use the |

Displays the prompts You can use the |

| Radio button set, non-Boolean | Outputs the prompt and buttons, which are labeled using the display values. | Outputs the prompt and the display values. The user types the text for the entry that they want. | Outputs the prompt and the display values. The user types the text for the entry that they want. |

| Screen | The title text is displayed. HTML formatting is supported except for Slack. | The title text is displayed. HTML formatting is supported. | The title text is displayed. The actual HTML markup is output. Ignores style and custom properties. |

| Signature | Not supported | Not supported | Not supported |

| Slider |

Displays the label. Then it displays one of the following artifacts:

|

Displays the label. Then it displays one of the following text:

|

Displays the label. Then it displays one of the following text:

|

| Switch |

The label is output followed by 2 buttons – Yes and No. You can use the |

Displays the label followed by You can use the |

Displays the label followed by You can use the |

| Tabular and portrait entity collects | Displays the prompt, the label for the add button, and Yes and No buttons. If the user clicks Yes the user is prompted to enter the fields for a table row for the next entity. Then the skill repeats the process until the user answers No. | The behavior is the same as for rich-UI channels except that the user has to type Yes or No.

|

The behavior is the same as for rich-UI channels except that the user has to type Yes or No.

|

| Text box and text area |

For read-only, displays the label and value. Otherwise, displays the label and waits for a response. Even if the field is optional, the user must provide text before the conversation continues to the next step. |

For read-only, displays the label and value. Otherwise, displays the label and waits for a response. Even if the field is optional, the user must provide text before the conversation continues to the next step. |

For read-only, displays the label and value. Otherwise, displays the label and waits for a response. Even if the field is optional, the user must provide text before the conversation continues to the next step. |

| Upload | Not supported by embedded chat inlay. | Not supported. | Supported. |

- IntelligentAdvisor - answerNotValid: The answer is not in the correct format. Try again.

- IntelligentAdvisor - defaultValue:Suggested value is {0}

- IntelligentAdvisor - doneHelp: (Upload) When you are done with the upload, say {0}.

- IntelligentAdvisor - maskLabel: (Masked) Answer format: {0}

- IntelligentAdvisor - numberMinMax: (Slider) Enter a number between {0} and {1}.

- IntelligentAdvisor - outOfOrderMessage: You have already answered this question. When you want to step backwards to change a previous answer, say {0}.

- IntelligentAdvisor - resumeSessionPrompt: Do you want to restart the interview from where you previously left?

- IntelligentAdvisor - yesNoMessage: (Boolean Radio Button, Checkbox, Switch, Collects) Enter either {0} or {1}

Tips for Conversational Design of Interviews

Here are some interview-design suggestions for the various field types.

| Field Type | Suggestion |

|---|---|

| Assessment, advice, conclusion | Each field is output as a separate utterance. If you have several fields, the advice might scroll off the screen. Consider combining the information into as few fields as possible. For example, instead of saying |

| All | All input fields must have prompts.

If the form uses HTML and CSS

markup to make the interview present as a form, you might end up with a lot of blank

lines in the skill conversation. To prevent this, set the Intelligent Advisor

component's Remove HTML Tags From Output property to

|

| Button, radio |

Make sure that you provide unambiguous meaningful choices that the user can quickly scan and immediately know the appropriate response. For example, instead of asking For text-only channels, where the user types the response, don't put punctuation in the labels. For example, instead of saying

Also see the suggestions for text buttons. |

| Button, text | Make sure that you provide unambiguous meaningful choices that the user can quickly scan and immediately know the appropriate response. For example, instead of asking For text-only channels, where the user types the response, keep the labels short and don't put punctuation in the labels. |

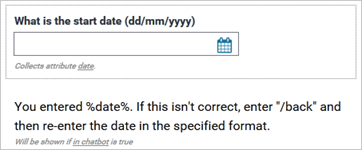

| Calendar (date, date and time, time) |

To enable locale-based formatting for the input date, ensure that Consider End User Locale is switched to On for the DATE entity as described in Locale-Based Date Resolution and ensure that The user can enter words that imply a date or time such as today, now, Wednesday, noon, or 1:00. The natural language parser will try to make a reasonable guess for the specific date and/or time. It uses the current UTC time for its calculations. For example:

Depending on where the user is in relation to the UTC time zone, the date or time might not resolve as the user intended. For this reason, the prompt should indicate an explicit format in the prompt, and the interview should echo what the input actually resolved to. You might also want to output a string such as Here's an example of an interview design that shows the desired format and echoes the resolved date:

Here's what the conversation looks like: |

| Checkbox | For each item in the checkbox set, the skill outputs the checkbox label and Yes and No buttons (or text for text-only channels).

Because the label for the full checkbox set will quickly scroll off the screen, consider using questions for the checkbox labels. For example, instead of saying If the set is too long, the user might tire of clicking a multitude of Yes and No buttons, and may not carefully read the prompts. Instead of creating a dozen or more checkboxes in a group, think of ways you can help the user narrow down the number of checkboxes, such as by using categories. Say, for example, the checkboxes fall into three groups. Ask if the first group is applicable, and, if so, present the checkboxes for that group, and so on. Or alternatively, break up the checkboxes into logical groupings. |

| Drop-down | Avoid long choice lists that require the user to scroll on a mobile phone or in a chat widget. If you have a long list of choices, think of ways to help the user narrow down their request so that the skill can provide a more concise list. Alternatively, break the list up into multiple drop-downs, each of which includes none. As soon as the user selects a choice, skip the remaining drop-down lists.

For text-only channels, consider that the user's input has to exactly match the button label, so keep the label as short as possible. |

| Image buttons | For text-only channels where the images aren't shown, make sure that the item value, which is used for the button text, clearly describes the choice. |

| Image toggle | The label should be in the form of a yes/no question. |

| Label, input | Always provide a prompt for an input field. Consider phrasing as a question. For example, instead of saying |

| Label, output | Output the information in complete sentences. For example, instead of sayingDon't put related hints, helpful information, or suggestions after an input field. In a conversation, this information will be output after the user answers the question. Instead put it in the label. For example, instead of saying say |

| Read-only | Output the information in a complete sentence. For example, instead of saying

|

| Switch |

Because switches display as Yes/No choices, consider using a question for the label. For example, instead of saying say |

| Tabular entity collect | The field label should explain that the user will have the opportunity to add multiple items. For example: Tell us about each of your assets?The label for the add button should be a question, such as |

| Text | In a conversation, the user must enter a value before moving to the next question. Therefore, if optional, provide some value for the user to enter to skip to the next question. For example, you can sayYou'll have to modify your interview to properly handle that value. For text fields that are associated with currency and numeric attributes, the valid format depends on the skill's settings for the NUMBER and CURRENCY entities. When the entity's Consider End User Locale is switch to On, then the valid format depends on |

| Title | Omit or use as a conversational cue about what to expect next. For example,

instead of saying |

Designing Interviews for Text-Only Channels

Text-only channels can't display buttons or images. If your skill that uses the Intelligent Advisor component will be accessible through text-only channels, here are some suggestions for designing interviews that work with both Rich UI channels and text-only channels.

- Turn on auto-numbering for the skill (via the skill's Enable Auto Numbering on Postback Actions in Task Flows configuration setting) and/or digital assistant (using the digital assistant's Enable Auto Numbering on Postback Actions setting). When autonumber is turned on, the user can type the number instead of the full text.

- Consider using all lower case for lists and buttons to make it easier for the user to type.

- Set the

systemComponent_IntelligentAdvisor_yesLabel,systemComponent_IntelligentAdvisor_noLabel, andsystemComponent_IntelligentAdvisor_uncertainLabelresource bundle entries to lower case text to make it easier for the user to type that response. - Don't set the

systemComponent_IntelligentAdvisor_yesLabelandsystemComponent_IntelligentAdvisor_noLabelresource bundle entries to values that the natural language parser (NLP) won't recognize as a variation ofYesandNorespectively. For switches, check boxes, and Boolean radio buttons, the skill displays the values from thesystemComponent_IntelligentAdvisor_yesLabelandsystemComponent_IntelligentAdvisor_noLabelresource bundle entries (andsystemComponent_IntelligentAdvisor_uncertainLabelfor optional Boolean radio buttons). For text responses, the skill passes the user response through the NLP and converts any variations of yes and no to true and false respectively. If you set the labels to strings that the NLP can't convert to true or false, the skill will return a message that the answer isn't in the correct format. For example, you can setsystemComponent_IntelligentAdvisor_yesLabeltookoryeahand the NLP will convert the utterance to true. However, if you setsystemComponent_IntelligentAdvisor_yesLabeltoPleasethe skill won't acceptPleaseas a valid response.

Use the Intelligent Advisor Component in Your Skill

Before you can access an anonymous interview from a skill, ask a Digital Assistant administrator to add an Intelligent Advisor service to your instance, and ask a Hub Manager to deploy your interview to the Intelligent Advisor Hub and activate it for chat service. After those tasks are completed, you can access the interview from your skill by adding the Intelligent Advisor component to your dialog flow.

Tip:

You can quickly create the skill from the Intelligent Advisor service page. ClickThe following steps walk you through using the component in a skill that was created for Visual dialog mode.

-

In your skill, click Entities

to view the Entities page, and then select the DATE entity.

to view the Entities page, and then select the DATE entity.

-

Switch Consider End User Locale to On and select Nearest from the Resolve Date as drop-down.

The interview uses these settings to determine the input date format and to interpret ambiguous dates as described in Ambiguity Resolution Rules for Time and Date Matches and Locale-Based Date Resolution.

- Verify that Consider End User Locale is switched to On for the CURRENCY and NUMBER entities.

-

Click Flows

and then select the flow into which you want to insert the Intelligent Advisor

component.

and then select the flow into which you want to insert the Intelligent Advisor

component.

- Mouse over the line connecting the two components where you want to insert the new

component and click

. - In the Search field of the Add State

dialog, type

intelland then select the Intelligent Advisor component that is displayed. - Fill in the Name and Description fields and click Insert.

-

In the property inspector for the newly-added component, set the component's Intelligent Advisor Service Name property to the name of the service that was added to the Settings > Additional Services > Intelligent Advisor page.

-

Set Deployment Project Name to the name of the anonymous interview that was deployed on the Intelligent Advisor Hub.

Tip:

If you're not sure about the exact spelling of the name, go to Additional Services, click Intelligent Advisor, select the Hub's service, and click Deployments to see a list of the deployment names. -

If you want the component to hide screen titles, set Hide All Screen Titles to

true. By default, the interview displays screen titles. -

By default, the interview doesn't display the explanation. You can set the Show Explanation property to

alwaysto display the explanation every time, or toaskif you want the user to choose whether to see the explanation. -

If the skill has already obtained values for any of the interview's attributes, then you can use the Seed Data property to pass the values. Otherwise, remove the property.

See Pass Attribute Values and Connection Parameters to learn how to pass use the seed data and how to use the data in an interview.

-

By default, the skill outputs the interview's HTML and CSS markup. If the interview contains HTML and CSS markup that causes the conversation to contain unnecessary blank lines, consider setting Remove HTML Tags from Output to

true. -

If the interview expects a certain currency, then set Interview Expected Currency to the ISO-4217 code for the expected currency. When a code is specified, the user only can input currency values in the formats that are allowed for that currency.

- Add a state for handling the error that is thrown if there's a problem with the

Intelligent Advisor integration.

- In the property insector select the Transitions tab.

- Create a new Action and name it

error. - In the Transition to field, select Add State.

- Select the Send Message template or one of the User

Messaging templates, fill in a Name and

Description, and then click Insert.

A new component should be inserted in the flow diagram with an error transition connecting it to the Intelligent Advisor component

-

Select the newly-inserted component and insert and enter the text you'd like users to see in the case of an error, e.g.

We are having a problem with a connection. Can you please send email to contact@example.com to let them know that the loan advisor isn't working? Thank you.

- (Optional), fine-tune the strings that are used by the component for labels and standard messages. See Resource Bundle Entries for Intelligent Advisor.

-

If the skill can be accessed by text-only channels, make sure the skill's Enable Auto Numbering on Postback Actions in Task Flows configuration setting is set to

trueor to an expression that resolves totruefor text-only channels, such as the following expression:${(system.channelType=='twilio'||system.channelType=='osvc' )?then('true','false')}This enables the user to simply select an option by typing a number instead of the full text of the option.

You can access this setting by selecting

in the left navigation for the skill and selecting the Configuration tab. - (Optional), Click Preview

and test the interview for all the channels that will be able to access the skill.

and test the interview for all the channels that will be able to access the skill.

For details about each of the component properties, see Intelligent Advisor Properties.

For details about each of the properties of the YAML-version of the component and a simple example of their use, see System.IntelligentAdvisor.

Pass Attribute Values and Connection Parameters

If your skill has already obtained values for an interview's attributes, you can use the Seed Data property in the Intelligent Advisor component to pass the values to the interview. If your interview requires parameters for web service connectors, you can use the Connection Parameters property to pass the values.

Use the Seed Data property to define key-value pairs to pass values for any interview attribute that has the Seed from URL parameter option enabled. You can find this option in the Edit Attribute dialog in Oracle Policy Modeling.

The interview uses the Seed Data values to set default values, which are displayed as suggested values.

If you want the interview to skip the screen or step if seed data is provided for it, follow these steps in the Policy Modeling:

-

Add a rule to determine whether the seed data was provided:

-

For a Boolean attribute, use this rule:

The <screen name> screen should be shown if <attribute name> is uncertain or <attribute name> is currently unknown -

For a non-Boolean attribute, use this rule:

The <screen name> screen should be shown if <attribute name> is currently unknown

-

-

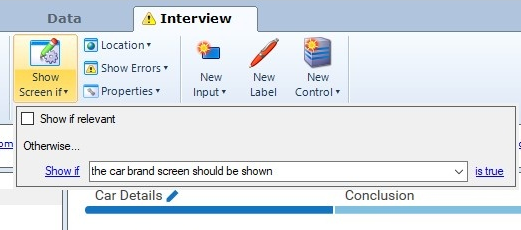

In the Interview tab, select the screen, click Show Screen if, and select the rule from the Show if drop-down list.

Description of the illustration ia-show-screen-if.pngIf you have more than one question on the screen and you want to hide just the question, then instead of using the Show Screen if button, select the question, click Mandatory, and select the rule from the Show if drop-down list.

To learn more about seed data and how you can use them in an interview, see these articles in Intelligent Advisor Documentation:

If your interview uses a web service connector that contains data mappings to application data, you use the Connection Parameters property to define key-value pairs for passing data to the connector.

To learn about parameters, see Data integration in Intelligent Advisor Documentation.

Access Interview Attributes

If you need to access the values of named attributes that were set during the interview, you can use the Variable for Interview Results property to pass in the name of a list variable. The named-attribute values will be stored in that variable as an array of key/value pairs.

If the user exits the interview before completion, then the variable that is named by Variable for Interview Results won't be created.