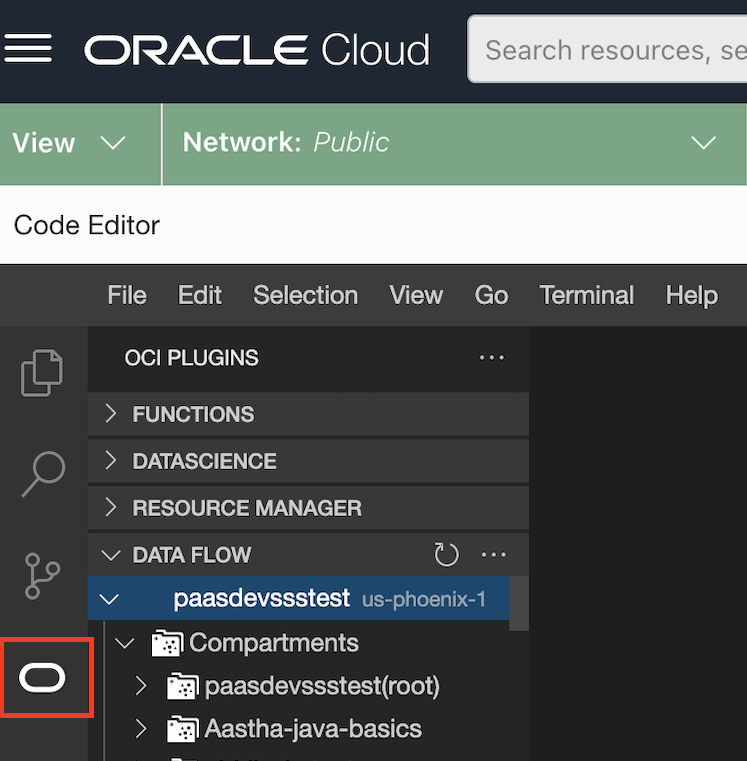

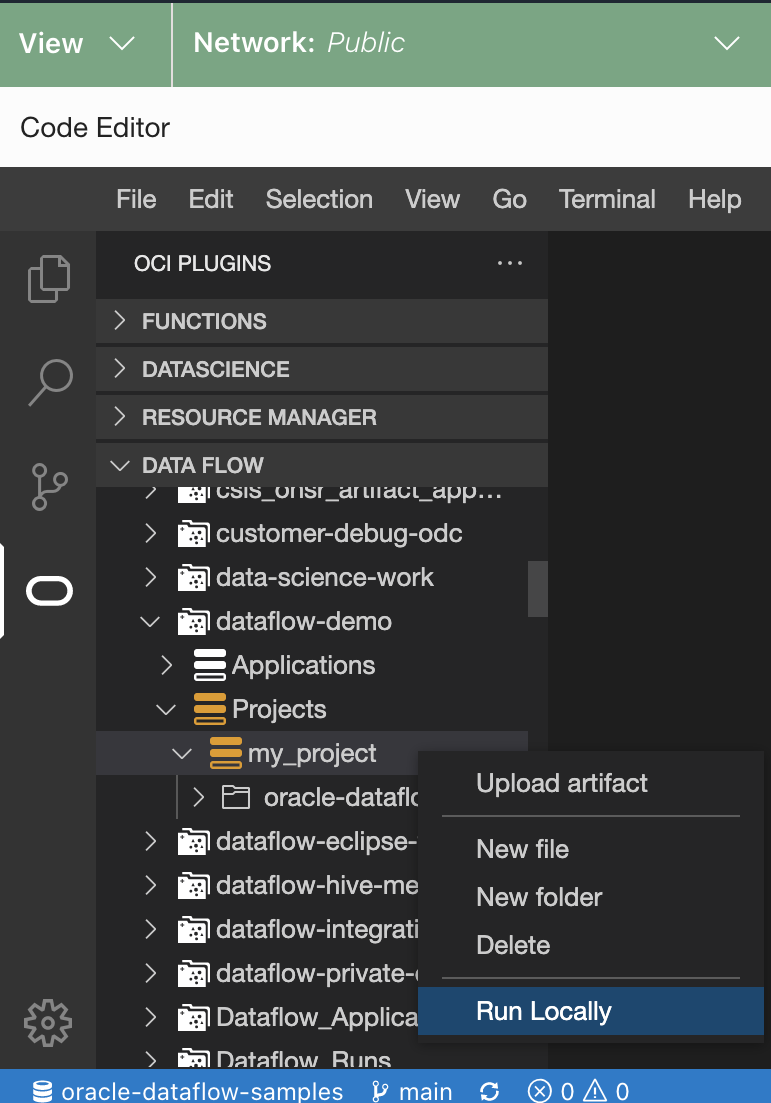

Running an Application with the Code Editor

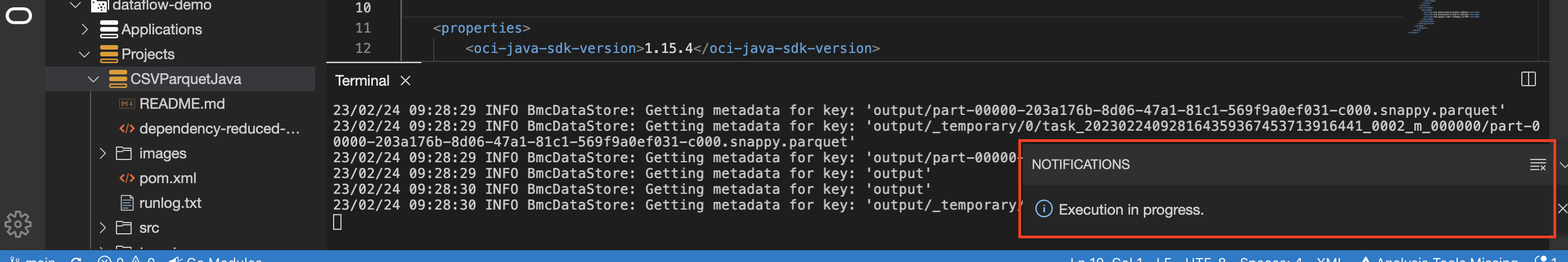

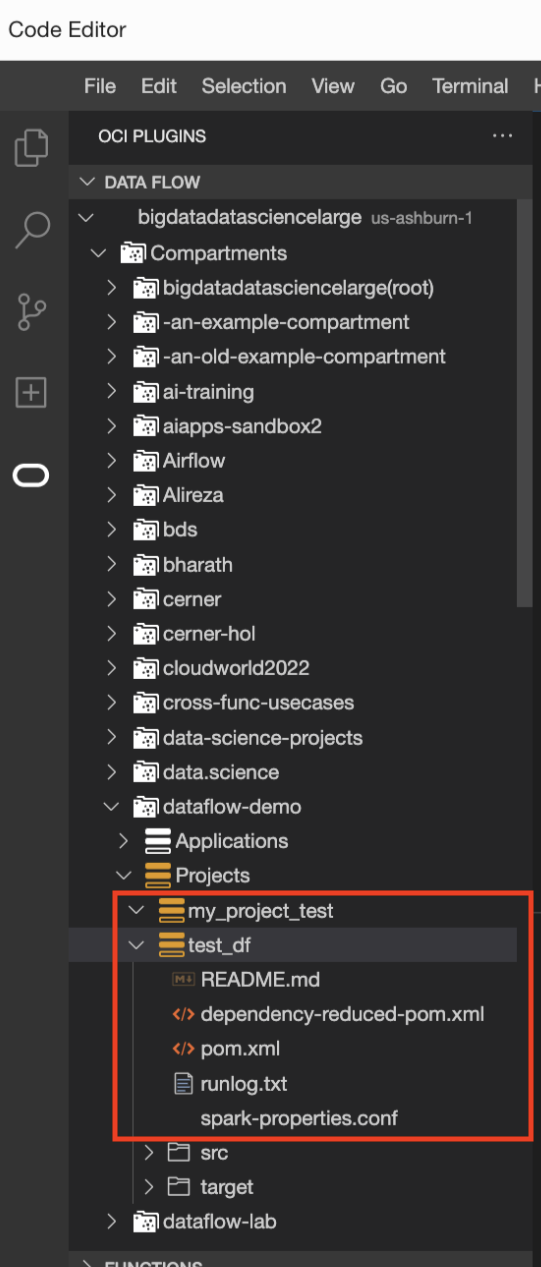

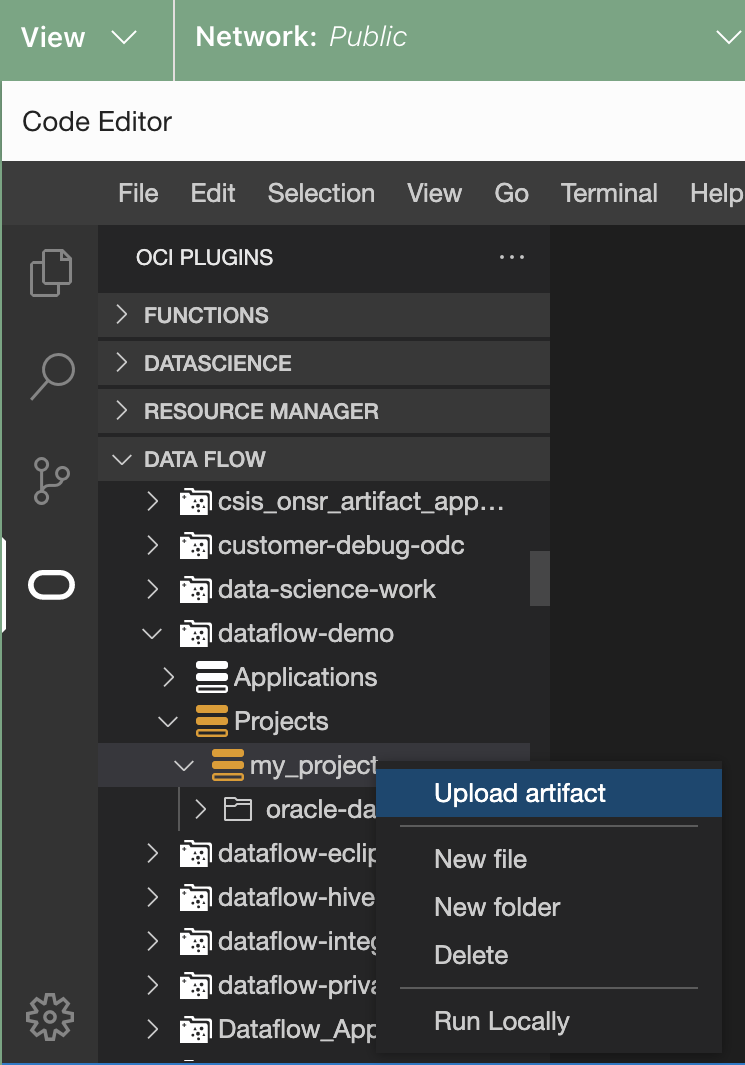

In the Console, you can use the Code Editor to run a Data Flow Application.

You must have created the

configfor user authentication as described in Using the Code Editor.This task can't be performed using the CLI.

This task can't be performed using the API.

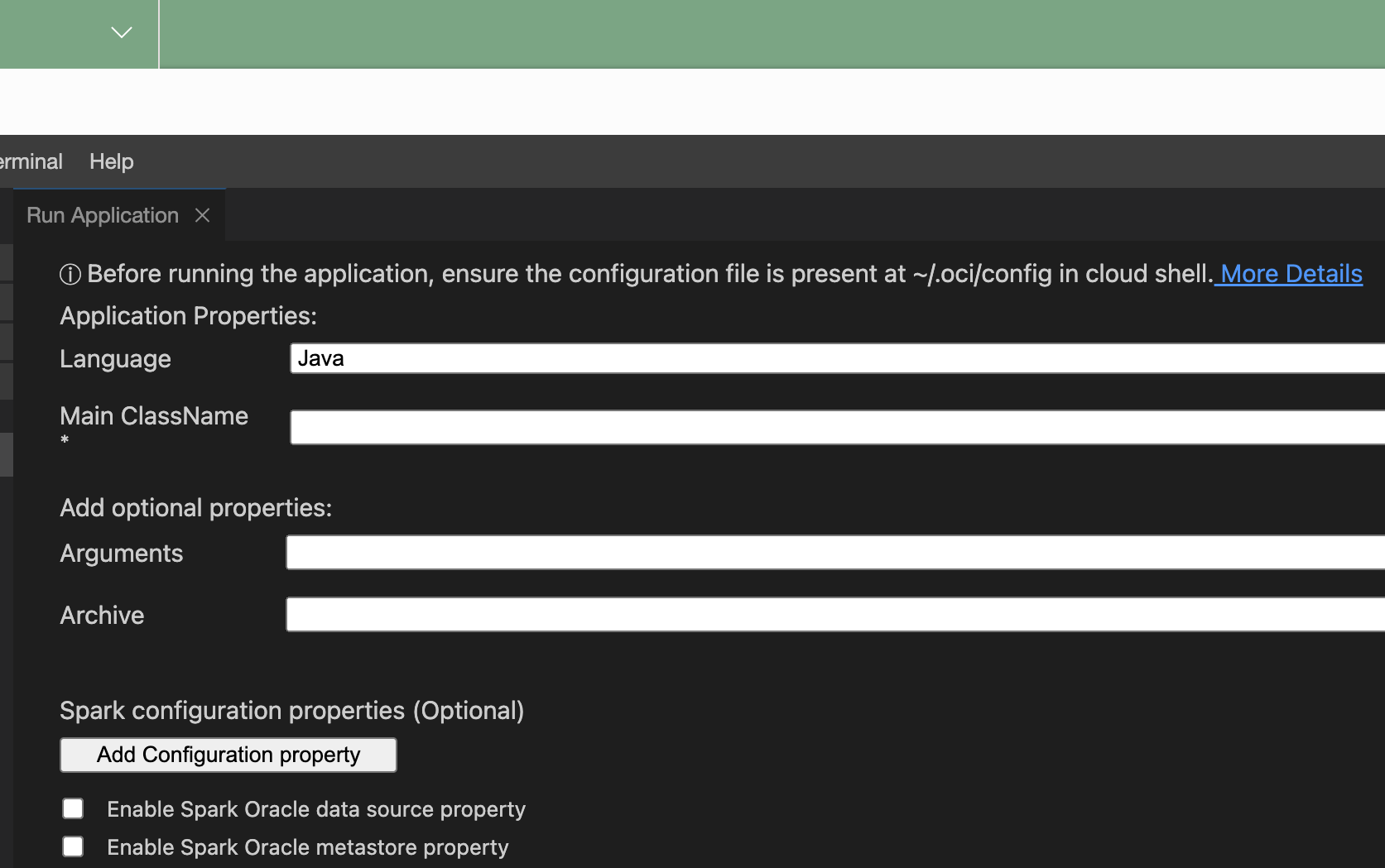

The Run Application window opens.

The Run Application window opens.