Skill Quality Reports

The Skill Quality reports enable you to quickly take stock of the intents and utterances across all versions of the skill.

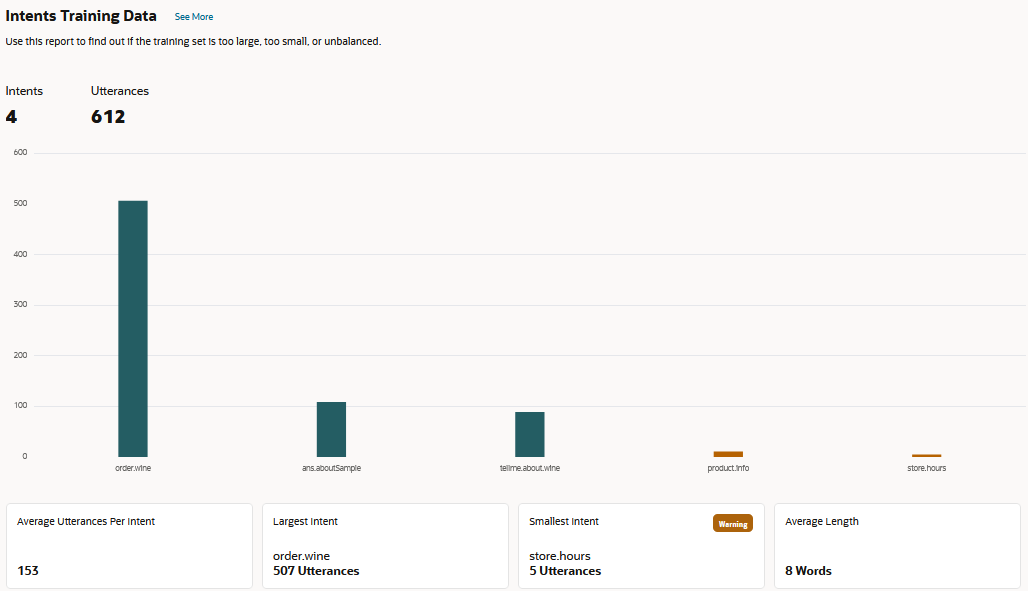

- The Overview Report – This report's metrics and graphs allow you quickly assess the size and shape of your training data by breaking down each of the skill's intents by the number of utterances that they have. You can use this report as your data set grows throughout the development process to make sure that the number of intents, the number of utterances per intent, and the word count for each utterance are always compliant with our guidelines.

- The Report – Breaks down the intents by anomalies, utterances that might be inappropriate for their intent because they are out of scope or mislabeled, or are potentially applicable but difficult to classify because they are unsual.

Skill Quality Overview Report

- Your skill has the minimum of two intents, but has not exceeded the recommended number of 300 intents.

- Your training does not exceed 25,000 utterances.

- Each intent has a minimum of two utterances (with the recommended minimum of 12 utterances to provide predictable training).

- The average utterances length is between three and 30 words.

This report tracks the intents that have been enabled.

How to Use the Overview Report

- A high-level view of the training data through its

intents-by-utterance-count bar chart and metrics, which include the total number

of intents and utterances across all versions of the skill. Using the bar chart

and the metrics, you can find out what these totals mean in terms of how

balanced or unbalanced your training set has become. For example, the report's

graph, along with the disparity between the Largest

intent and Smallest intent totals might

indicate a lopsided training set, one where you need to add data to close the

gap between the largest and smallest intent.

Metric Description and Use Intents The total number intents across all versions of the skill. Use this metric to find out if this skill has exceeded the recommended maximum of 300 intents. Utterances The total number of utterances across all of the intents. Use this metric to find out the actual size of the training set. Largest intent The intent with the most utterances. Compare this total against the Average utterances per intent metric. Smallest intent The intent with the least number of utterances. You may need to add utterances if this total is far below Average utterances per intent. Average utterances per intent The number of utterances averaged across all intents. Use this metric to find out if you're supplying enough data to your training set. Average length The utterance length across the entire training set. Compare this metric against the Average Utterance Length metric for an intent to find out if the word count of its utterances negatively impacts the model because it's too high or too low. Min length The shortest utterance in the training set. Use this metric to find out if any of the utterances in the training set have too few words (less than three), which might negatively impact model performance. Max length The longest utterance in the training set. Use this metric to find an long utterances (30 words or more) which may negatively impact model performance. Answer Intent The number of answer intents in the training set. Use this metric to compare the number of answer intents to regular (or transactional) intents. Note

The Min length, Max length, and Average length metrics and related warnings are for native language skills, not for skills that use translation services.The report flags problem areas with warnings. Hovering over a warning opens a diagnostic message, which puts the problematic utterance or intent in the context of our intent and utterance guidelines and suggests corrective actions. These messages also display in the Intents Issues pane.

These messages also display in the Intents Issues pane.Tip:

The warning messages accessed from the metrics provide you with a link to the Intent page. - A breakdown of the training set on a per-intent basis. The report

includes a table listing intents by language (for multi-lingual skills),

utterance count, and average, minimum, and maximum word length. To see the

latter three totals in context of the overall training set, compare them to the

Average length, Min Length and

Max Length metrics.

As with the high-level metrics, the report flags intents problematic intents with warning messages. A warning

in the Issues indicates that the intent is deficient in some aspect

of utterance length. Clicking the corresponding warning

in the Issues indicates that the intent is deficient in some aspect

of utterance length. Clicking the corresponding warning  in the Average Utterance Length, Max Utterance Length in Words, or

Min Utterance Length in Words columns opens a diagnostic message. You can

apply the corrective action suggested by the message directly to the intent

by clicking the link in the Intent column.

in the Average Utterance Length, Max Utterance Length in Words, or

Min Utterance Length in Words columns opens a diagnostic message. You can

apply the corrective action suggested by the message directly to the intent

by clicking the link in the Intent column.

Description of the illustration intent-issues-table.png

The Skill Quality Anomalies Report

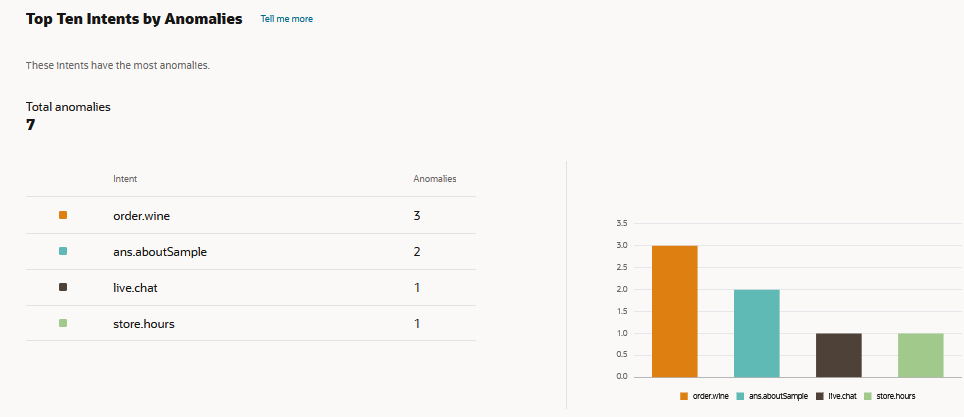

Like the Overview report, this report breaks down intents by utterances. The utterances tallied in this report, however, are anomalies, utterances that might be inappropriate for their intent because they are out of scope or mislabeled, or are potentially applicable but unique. Anomalies can cause the boundaries between intents to become blurred, particularly if you've extended your skill to cover new domains or have partitioned a large intent into smaller intents.

How to Use the Anomalies Report

This report not only ranks the intents by anomalies, but also ranks the

anomalies themselves by how different they are from other utterances in the training

set.

Description of the illustration anomalies-intent-breakdown.png

You can sort the anomalies in this list both by severity and by intent. You can

filter the anomalies by intent, by words or phrases in utterances, and for a

multi-lingual skill, by language.

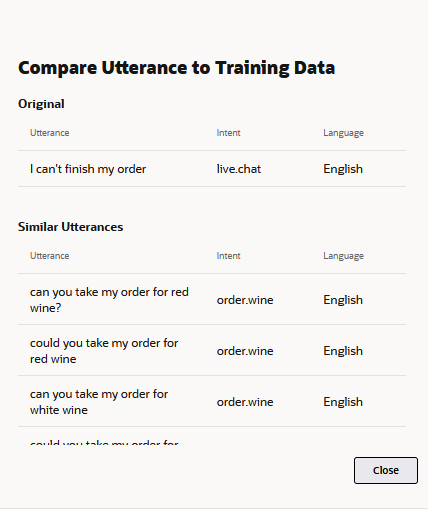

To find out where (or if) an utterance belongs in your training set, click

View Similar in the list to view it in the Similar Utterance

report.

Description of the illustration anomalies-list.png

Like the Similar Utterances report used in the Utterance Tester, this report ranks the other utterances in the

training set relative to the selected utterance. In this case, however, the utterance

isn't a test phrase, but an anomalous utterance that's already in the training set.

Description of the illustration similar-utterance-report-anomalies.png

Based on the anomaly's relationship to the other utterances in the report, you

may find that the utterance is simply misclassified or not pertinent to the skill at

all. Other anomalies, however, may only be anomalous to the other utterances in that

they are implicit requests – they are asking the same questions as the other training

data, but in a different way. For example, "I can't log into my account" might be

evaluated as an anomaly for an agent transfer intent whose training utterances cluster

around more straightforward requests like "I need to talk to a live agent." Adding

similar utterances will add depth to the model.

- Run the report.

- Select an utterance, the click View Similar.

- In the View Similar dialog, evaluate the utterance to the intent.

- If the utterance belongs to the intent, add similar utterances.

- If the utterance is misclassified, assign it to another intent.

- If the utterance does not belong in the training set, delete it.

- Retrain the skill.